fits a custom deep neural network using the Multilayer Perceptron architecture. dnn() supports the formula syntax and allows to customize the neural network to a maximal degree.

Usage

dnn(

formula = NULL,

data = NULL,

hidden = c(50L, 50L),

activation = "selu",

bias = TRUE,

dropout = 0,

loss = c("mse", "mae", "softmax", "cross-entropy", "gaussian", "binomial", "poisson",

"mvp", "nbinom", "multinomial", "clogit"),

validation = 0,

lambda = 0,

alpha = 0.5,

optimizer = c("sgd", "adam", "adadelta", "adagrad", "rmsprop", "rprop"),

lr = 0.01,

batchsize = NULL,

burnin = Inf,

baseloss = NULL,

shuffle = TRUE,

epochs = 100,

bootstrap = NULL,

bootstrap_parallel = FALSE,

plot = TRUE,

verbose = TRUE,

lr_scheduler = NULL,

custom_parameters = NULL,

device = c("cpu", "cuda", "mps"),

early_stopping = FALSE,

tuning = config_tuning(),

hooks = NULL,

X = NULL,

Y = NULL

)Arguments

- formula

an object of class "

formula": a description of the model that should be fitted- data

matrix or data.frame with features/predictors and response variable

hidden units in layers, length of hidden corresponds to number of layers

- activation

activation functions, can be of length one, or a vector of different activation functions for each layer

- bias

whether use biases in the layers, can be of length one, or a vector (number of hidden layers + 1 (last layer)) of logicals for each layer.

- dropout

dropout rate, probability of a node getting left out during training (see

nn_dropout)- loss

loss after which network should be optimized. Can also be distribution from the stats package or own function, see details

- validation

percentage of data set that should be taken as validation set (chosen randomly)

- lambda

strength of regularization: lambda penalty, \(\lambda * (L1 + L2)\) (see alpha)

- alpha

add L1/L2 regularization to training \((1 - \alpha) * |weights| + \alpha ||weights||^2\) will get added for each layer. Must be between 0 and 1

- optimizer

which optimizer used for training the network, for more adjustments to optimizer see

config_optimizer- lr

learning rate given to optimizer

- batchsize

number of samples that are used to calculate one learning rate step, default is 10% of the training data

- burnin

training is aborted if the trainings loss is not below the baseline loss after burnin epochs

- baseloss

baseloss, if null baseloss corresponds to intercept only models

- shuffle

if TRUE, data in each batch gets reshuffled every epoch

- epochs

epochs the training goes on for

- bootstrap

bootstrap neural network or not, numeric corresponds to number of bootstrap samples

- bootstrap_parallel

parallelize (CPU) bootstrapping

- plot

plot training loss

- verbose

print training and validation loss of epochs

- lr_scheduler

learning rate scheduler created with

config_lr_scheduler- custom_parameters

List of parameters/variables to be optimized. Can be used in a custom loss function. See Vignette for example.

- device

device on which network should be trained on. mps correspond to M1/M2 GPU devices.

- early_stopping

if set to integer, training will stop if loss has gotten higher for defined number of epochs in a row, will use validation loss is available.

- tuning

tuning options created with

config_tuning- X

Feature matrix or data.frame, alternative data interface

- Y

Response vector, factor, matrix or data.frame, alternative data interface

Value

an S3 object of class "citodnn" is returned. It is a list containing everything there is to know about the model and its training process.

The list consists of the following attributes:

- net

An object of class "nn_sequential" "nn_module", originates from the torch package and represents the core object of this workflow.

- call

The original function call

- loss

A list which contains relevant information for the target variable and the used loss function

- data

Contains data used for training the model

- weights

List of weights for each training epoch

- use_model_epoch

Integer, which defines which model from which training epoch should be used for prediction. 1 = best model, 2 = last model

- loaded_model_epoch

Integer, shows which model from which epoch is loaded currently into model$net.

- model_properties

A list of properties of the neural network, contains number of input nodes, number of output nodes, size of hidden layers, activation functions, whether bias is included and if dropout layers are included.

- training_properties

A list of all training parameters that were used the last time the model was trained. It consists of learning rate, information about an learning rate scheduler, information about the optimizer, number of epochs, whether early stopping was used, if plot was active, lambda and alpha for L1/L2 regularization, batchsize, shuffle, was the data set split into validation and training, which formula was used for training and at which epoch did the training stop.

- losses

A data.frame containing training and validation losses of each epoch

Activation functions

Supported activation functions: "relu", "leaky_relu", "tanh", "elu", "rrelu", "prelu", "softplus", "celu", "selu", "gelu", "relu6", "sigmoid", "softsign", "hardtanh", "tanhshrink", "softshrink", "hardshrink", "log_sigmoid"

Loss functions / Likelihoods

We support loss functions and likelihoods for different tasks:

| Name | Explanation | Example / Task |

| mse | mean squared error | Regression, predicting continuous values |

| mae | mean absolute error | Regression, predicting continuous values |

| softmax | categorical cross entropy | Multi-class, species classification |

| cross-entropy | categorical cross entropy | Multi-class, species classification |

| gaussian | Normal likelihood | Regression, residual error is also estimated (similar to stats::lm()) |

| binomial | Binomial likelihood | Classification/Logistic regression, mortality |

| poisson | Poisson likelihood | Regression, count data, e.g. species abundances |

| nbinom | Negative binomial likelihood | Regression, count data with dispersion parameter |

| mvp | multivariate probit model | joint species distribution model, multi species (presence absence) |

| multinomial | Multinomial likelihood | step selection in animal movement models |

| clogit | conditional binomial | step selection in animal movement models |

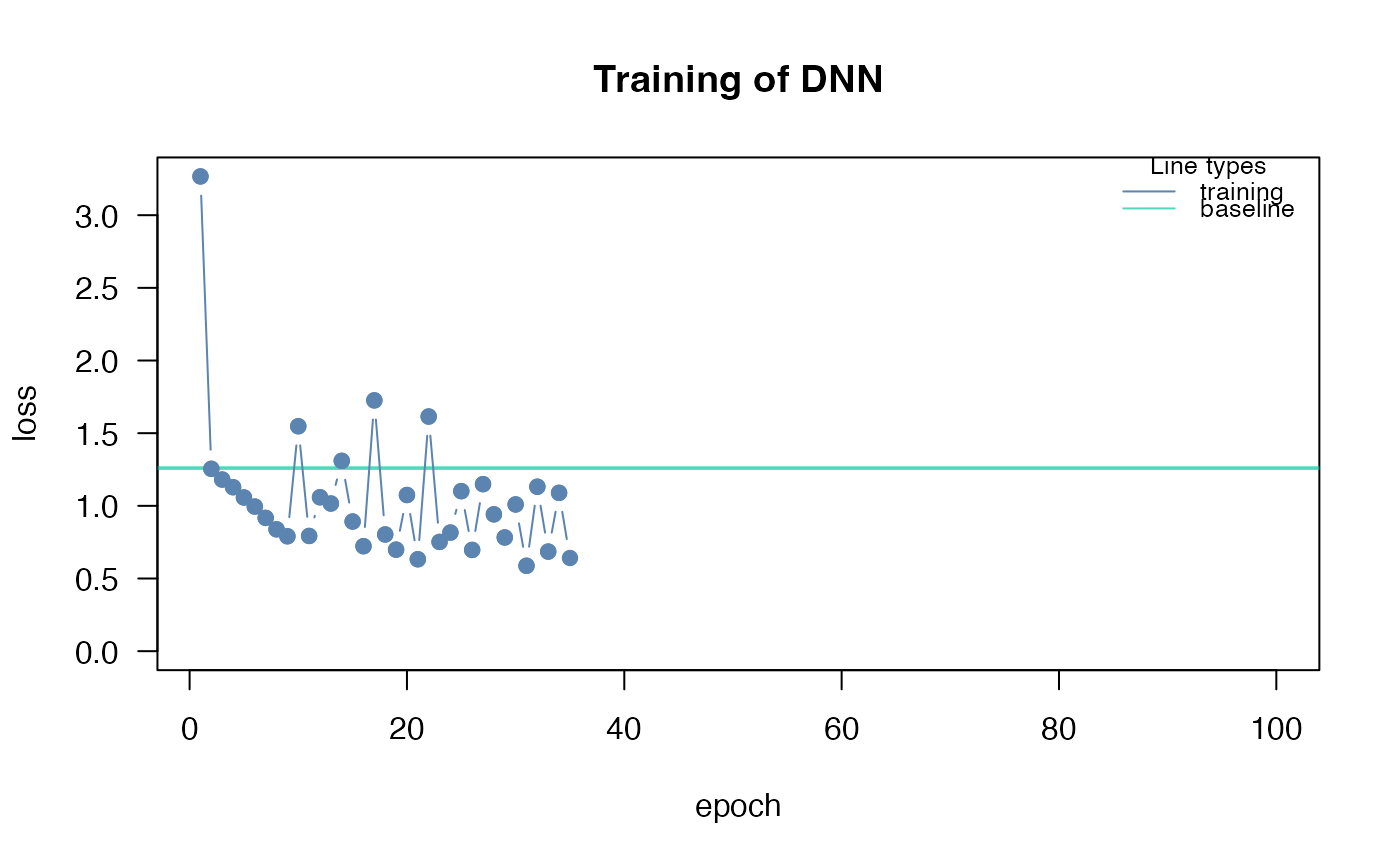

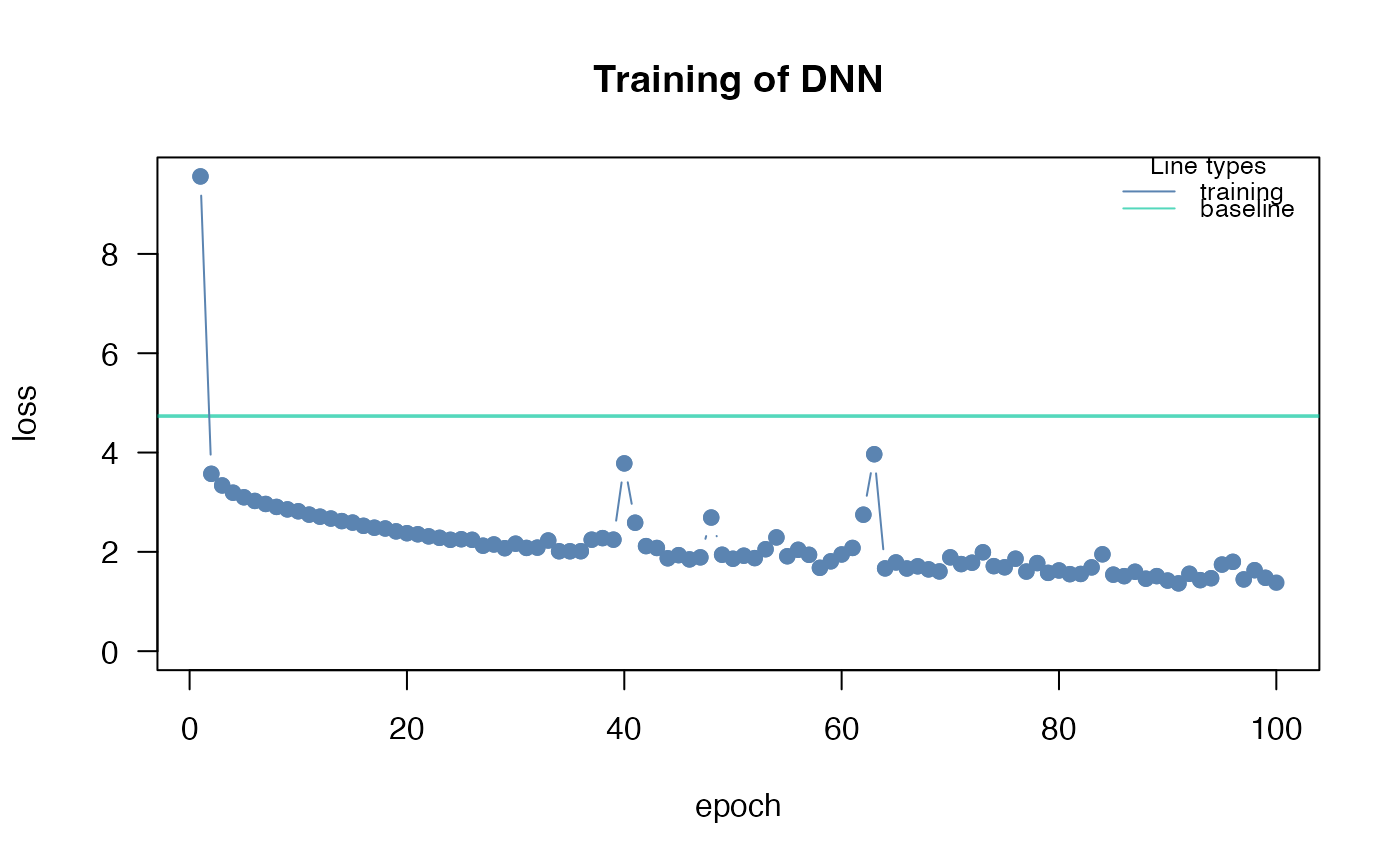

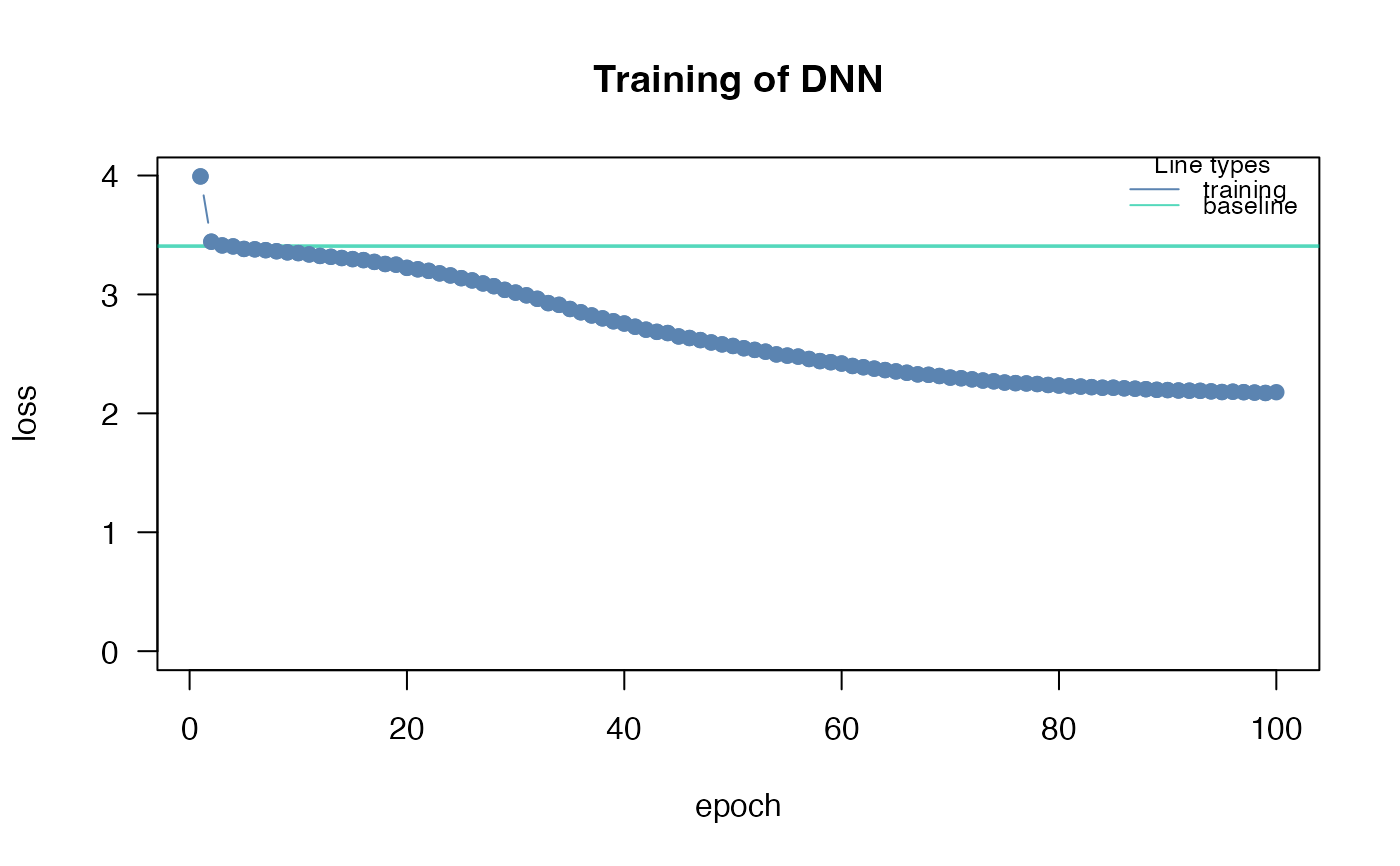

Training and convergence of neural networks

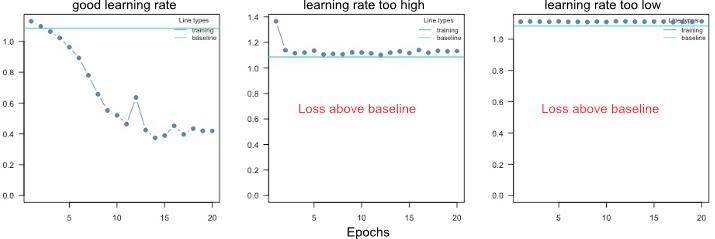

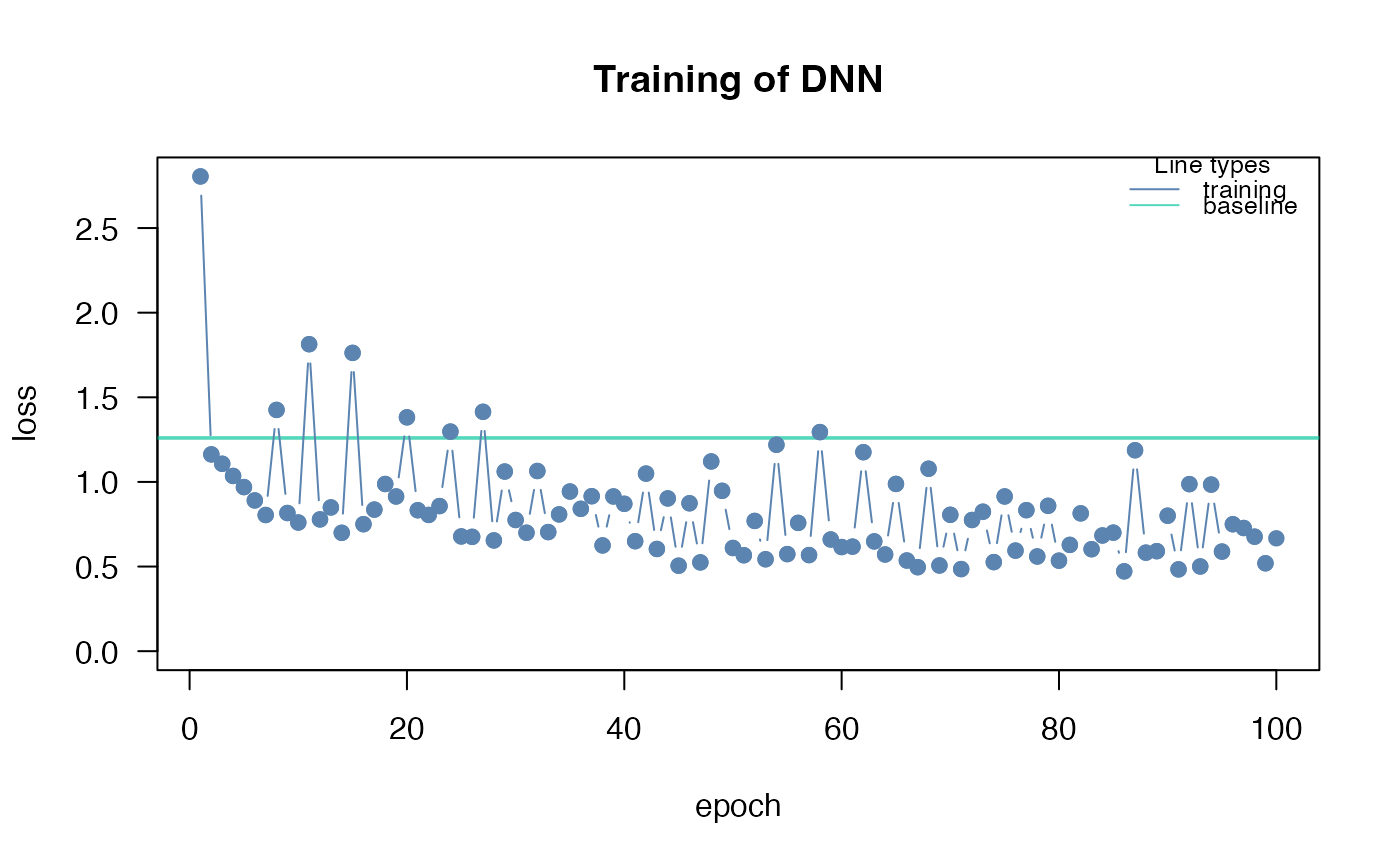

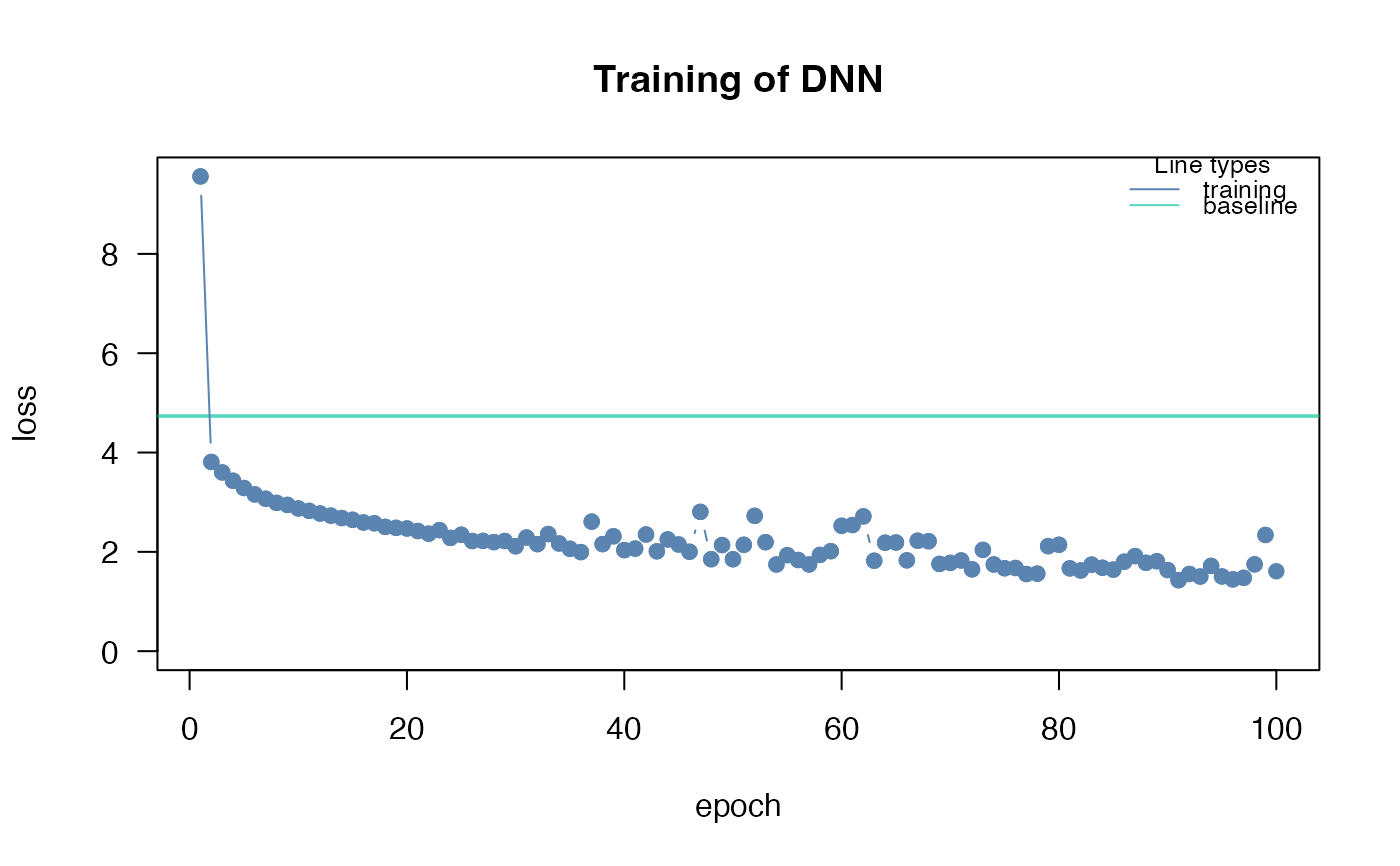

Ensuring convergence can be tricky when training neural networks. Their training is sensitive to a combination of the learning rate (how much the weights are updated in each optimization step), the batch size (a random subset of the data is used in each optimization step), and the number of epochs (number of optimization steps). Typically, the learning rate should be decreased with the size of the neural networks (depth of the network and width of the hidden layers). We provide a baseline loss (intercept only model) that can give hints about an appropriate learning rate:

If the training loss of the model doesn't fall below the baseline loss, the learning rate is either too high or too low. If this happens, try higher and lower learning rates.

A common strategy is to try (manually) a few different learning rates to see if the learning rate is on the right scale.

See the troubleshooting vignette (vignette("B-Training_neural_networks")) for more help on training and debugging neural networks.

Finding the right architecture

As with the learning rate, there is no definitive guide to choosing the right architecture for the right task. However, there are some general rules/recommendations: In general, wider, and deeper neural networks can improve generalization - but this is a double-edged sword because it also increases the risk of overfitting. So, if you increase the width and depth of the network, you should also add regularization (e.g., by increasing the lambda parameter, which corresponds to the regularization strength). Furthermore, in Pichler & Hartig, 2023, we investigated the effects of the hyperparameters on the prediction performance as a function of the data size. For example, we found that the selu activation function outperforms relu for small data sizes (<100 observations).

We recommend starting with moderate sizes (like the defaults), and if the model doesn't generalize/converge, try larger networks along with a regularization that helps minimize the risk of overfitting (see vignette("B-Training_neural_networks") ).

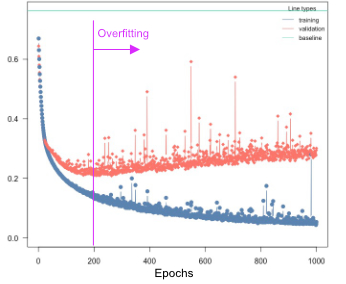

Overfitting

Overfitting means that the model fits the training data well, but generalizes poorly to new observations. We can use the validation argument to detect overfitting. If the validation loss starts to increase again at a certain point, it often means that the models are starting to overfit your training data:

Solutions:

Re-train with epochs = point where model started to overfit

Early stopping, stop training when model starts to overfit, can be specified using the

early_stopping=…argumentUse regularization (dropout or elastic-net, see next section)

Regularization

Elastic Net regularization combines the strengths of L1 (Lasso) and L2 (Ridge) regularization. It introduces a penalty term that encourages sparse weight values while maintaining overall weight shrinkage. By controlling the sparsity of the learned model, Elastic Net regularization helps avoid overfitting while allowing for meaningful feature selection. We advise using elastic net (e.g. lambda = 0.001 and alpha = 0.2).

Dropout regularization helps prevent overfitting by randomly disabling a portion of neurons during training. This technique encourages the network to learn more robust and generalized representations, as it prevents individual neurons from relying too heavily on specific input patterns. Dropout has been widely adopted as a simple yet effective regularization method in deep learning.

By utilizing these regularization methods in your neural network training with the cito package, you can improve generalization performance and enhance the network's ability to handle unseen data. These techniques act as valuable tools in mitigating overfitting and promoting more robust and reliable model performance.

Uncertainty

We can use bootstrapping to generate uncertainties for all outputs. Bootstrapping can be enabled by setting bootstrap = ... to the number of bootstrap samples to be used. Note, however, that the computational cost can be excessive.

In some cases it may be worthwhile to parallelize bootstrapping, for example if you have a GPU and the neural network is small. Parallelization for bootstrapping can be enabled by setting the bootstrap_parallel = ... argument to the desired number of calls to run in parallel.

Custom Optimizer and Learning Rate Schedulers

When training a network, you have the flexibility to customize the optimizer settings and learning rate scheduler to optimize the learning process. In the cito package, you can initialize these configurations using the config_lr_scheduler and config_optimizer functions.

config_lr_scheduler allows you to define a specific learning rate scheduler that controls how the learning rate changes over time during training. This is beneficial in scenarios where you want to adaptively adjust the learning rate to improve convergence or avoid getting stuck in local optima.

Similarly, the config_optimizer function enables you to specify the optimizer for your network. Different optimizers, such as stochastic gradient descent (SGD), Adam, or RMSprop, offer various strategies for updating the network's weights and biases during training. Choosing the right optimizer can significantly impact the training process and the final performance of your neural network.

Hyperparameter tuning

We have implemented experimental support for hyperparameter tuning. We can mark hyperparameters that should be tuned by cito by setting their values to tune(), for example dnn (..., lr = tune(). tune() is a function that creates a range of random values for the given hyperparameter. You can change the maximum and minimum range of the potential hyperparameters or pass custom values to the tune(values = c(....)) function. The following table lists the hyperparameters that can currently be tuned:

| Hyperparameter | Example | Details |

| hidden | dnn(…,hidden=tune(10, 20, fixed=’depth’)) | Depth and width can be both tuned or only one of them, if both of them should be tuned, vectors for lower and upper #' boundaries must be provided (first = number of nodes) |

| bias | dnn(…, bias=tune()) | Should the bias be turned on or off for all hidden layers |

| lambda | dnn(…, lambda = tune(0.0001, 0.1)) | lambda will be tuned within the range (0.0001, 0.1) |

| alpha | dnn(…, lambda = tune(0.2, 0.4)) | alpha will be tuned within the range (0.2, 0.4) |

| activation | dnn(…, activation = tune()) | activation functions of the hidden layers will be tuned |

| dropout | dnn(…, dropout = tune()) | Dropout rate will be tuned (globally for all layers) |

| lr | dnn(…, lr = tune()) | Learning rate will be tuned |

| batchsize | dnn(…, batchsize = tune()) | batch size will be tuned |

| epochs | dnn(…, batchsize = tune()) | batchsize will be tuned |

The hyperparameters are tuned by random search (i.e., random values for the hyperparameters within a specified range) and by cross-validation. The exact tuning regime can be specified with config_tuning.

Note that hyperparameter tuning can be expensive. We have implemented an option to parallelize hyperparameter tuning, including parallelization over one or more GPUs (the hyperparameter evaluation is parallelized, not the CV). This can be especially useful for small models. For example, if you have 4 GPUs, 20 CPU cores, and 20 steps (random samples from the random search), you could run dnn(..., device="cuda",lr = tune(), batchsize=tune(), tuning=config_tuning(parallel=20, NGPU=4), which will distribute 20 model fits across 4 GPUs, so that each GPU will process 5 models (in parallel).

As this is an experimental feature, we welcome feature requests and bug reports on our github site.

For the custom values, all hyperparameters except for the hidden layers require a vector of values. Hidden layers expect a two-column matrix where the first column is the number of hidden nodes and the second column corresponds to the number of hidden layers.

How neural networks work

In Multilayer Perceptron (MLP) networks, each neuron is connected to every neuron in the previous layer and every neuron in the subsequent layer. The value of each neuron is computed using a weighted sum of the outputs from the previous layer, followed by the application of an activation function. Specifically, the value of a neuron is calculated as the weighted sum of the outputs of the neurons in the previous layer, combined with a bias term. This sum is then passed through an activation function, which introduces non-linearity into the network. The calculated value of each neuron becomes the input for the neurons in the next layer, and the process continues until the output layer is reached. The choice of activation function and the specific weight values determine the network's ability to learn and approximate complex relationships between inputs and outputs.

Therefore the value of each neuron can be calculated using: \( a (\sum_j{ w_j * a_j})\). Where \(w_j\) is the weight and \(a_j\) is the value from neuron j to the current one. a() is the activation function, e.g. \( relu(x) = max(0,x)\)

Training on graphic cards

If you have an NVIDIA CUDA-enabled device and have installed the CUDA toolkit version 11.3 and cuDNN 8.4, you can take advantage of GPU acceleration for training your neural networks. It is crucial to have these specific versions installed, as other versions may not be compatible. For detailed installation instructions and more information on utilizing GPUs for training, please refer to the mlverse: 'torch' documentation.

Note: GPU training is optional, and the package can still be used for training on CPU even without CUDA and cuDNN installations.

Examples

# \donttest{

if(torch::torch_is_installed()){

library(cito)

# Example workflow in cito

## Build and train Network

### softmax is used for multi-class responses (e.g., Species)

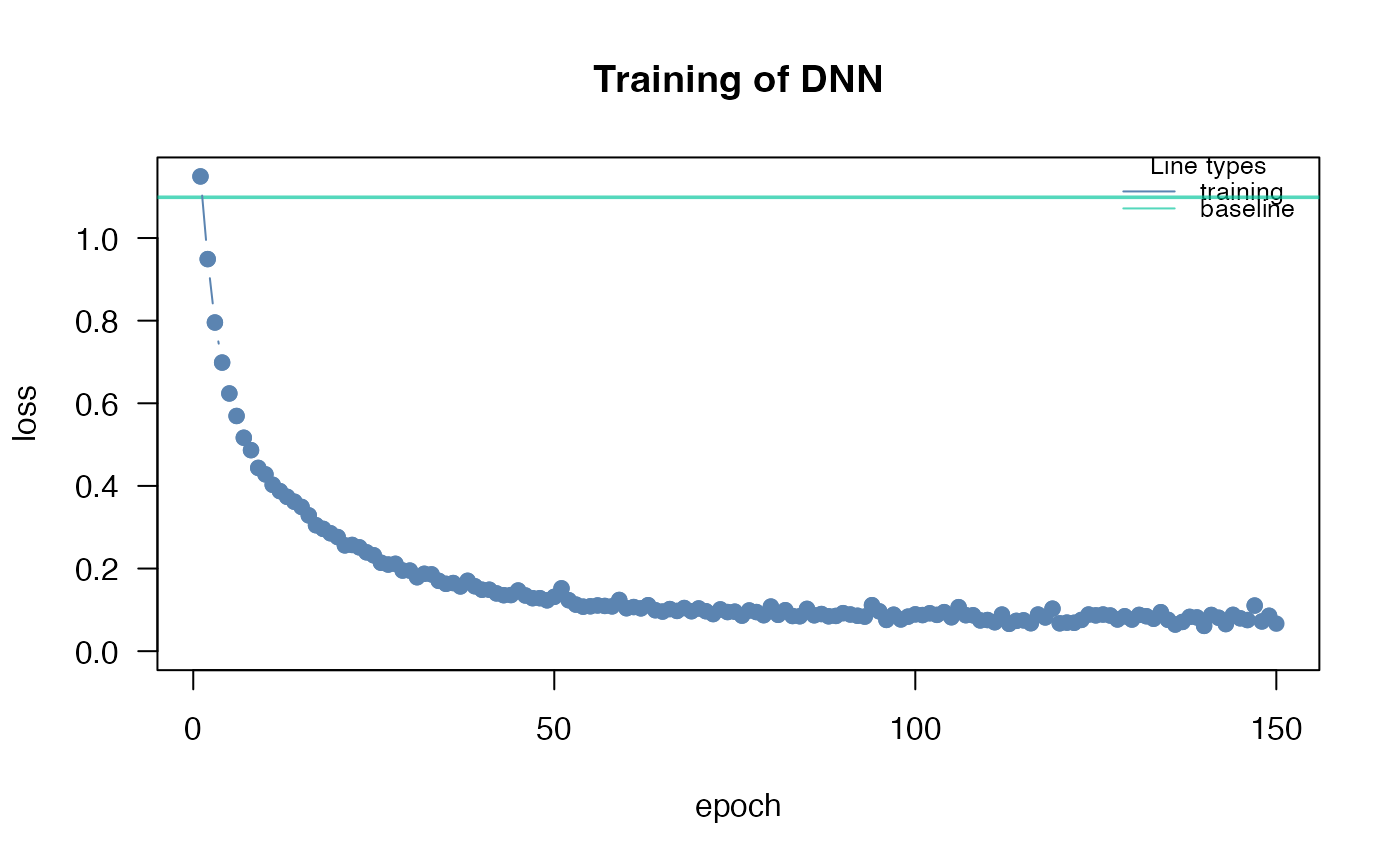

nn.fit<- dnn(Species~., data = datasets::iris, loss = "softmax")

## The training loss is below the baseline loss but at the end of the

## training the loss was still decreasing, so continue training for another 50

## epochs

nn.fit <- continue_training(nn.fit, epochs = 50L)

# Sturcture of Neural Network

print(nn.fit)

# Plot Neural Network

plot(nn.fit)

## 4 Input nodes (first layer) because of 4 features

## 3 Output nodes (last layer) because of 3 response species (one node for each

## level in the response variable).

## The layers between the input and output layer are called hidden layers (two

## of them)

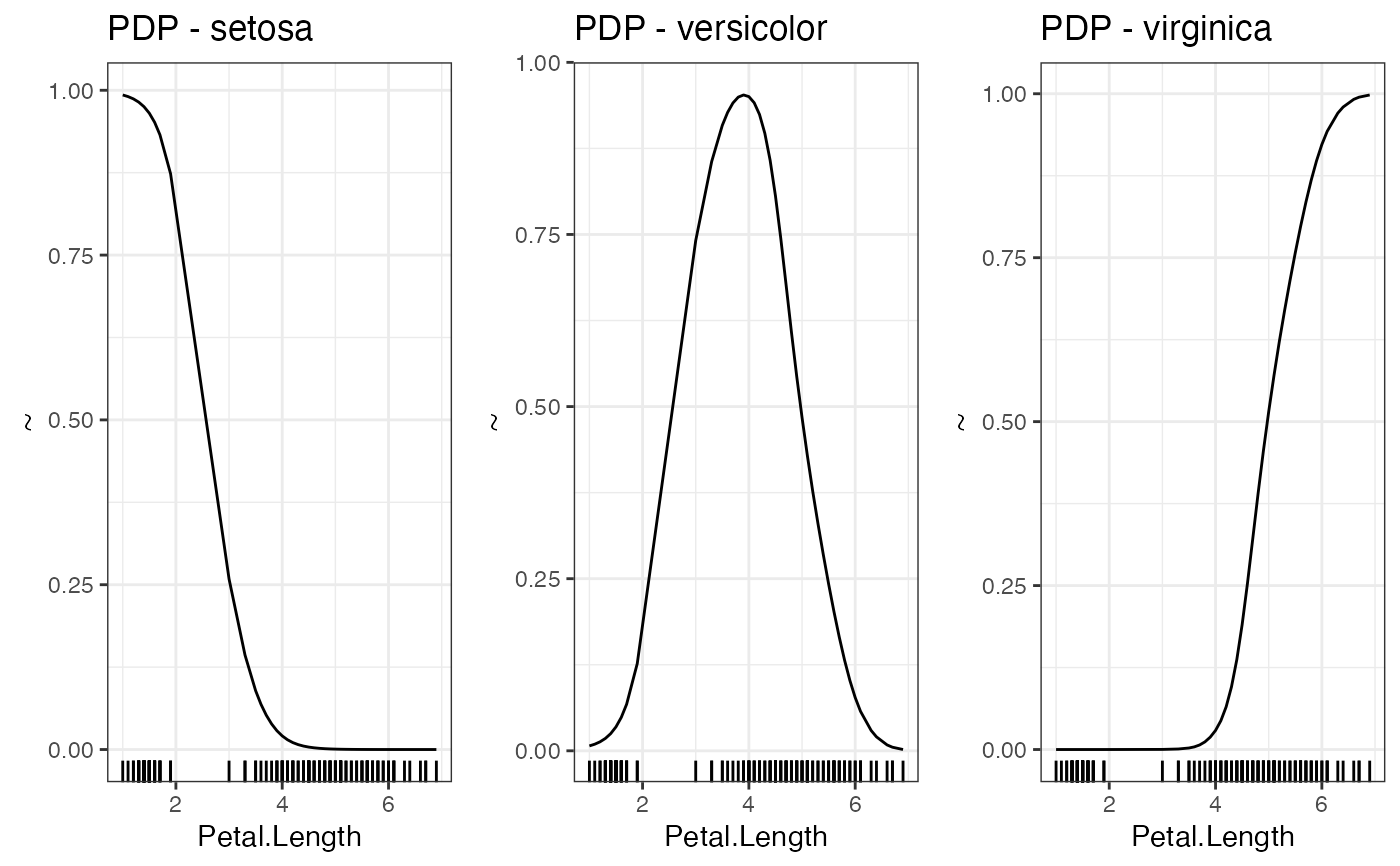

## We now want to understand how the predictions are made, what are the

## important features? The summary function automatically calculates feature

## importance (the interpretation is similar to an anova) and calculates

## average conditional effects that are similar to linear effects:

summary(nn.fit)

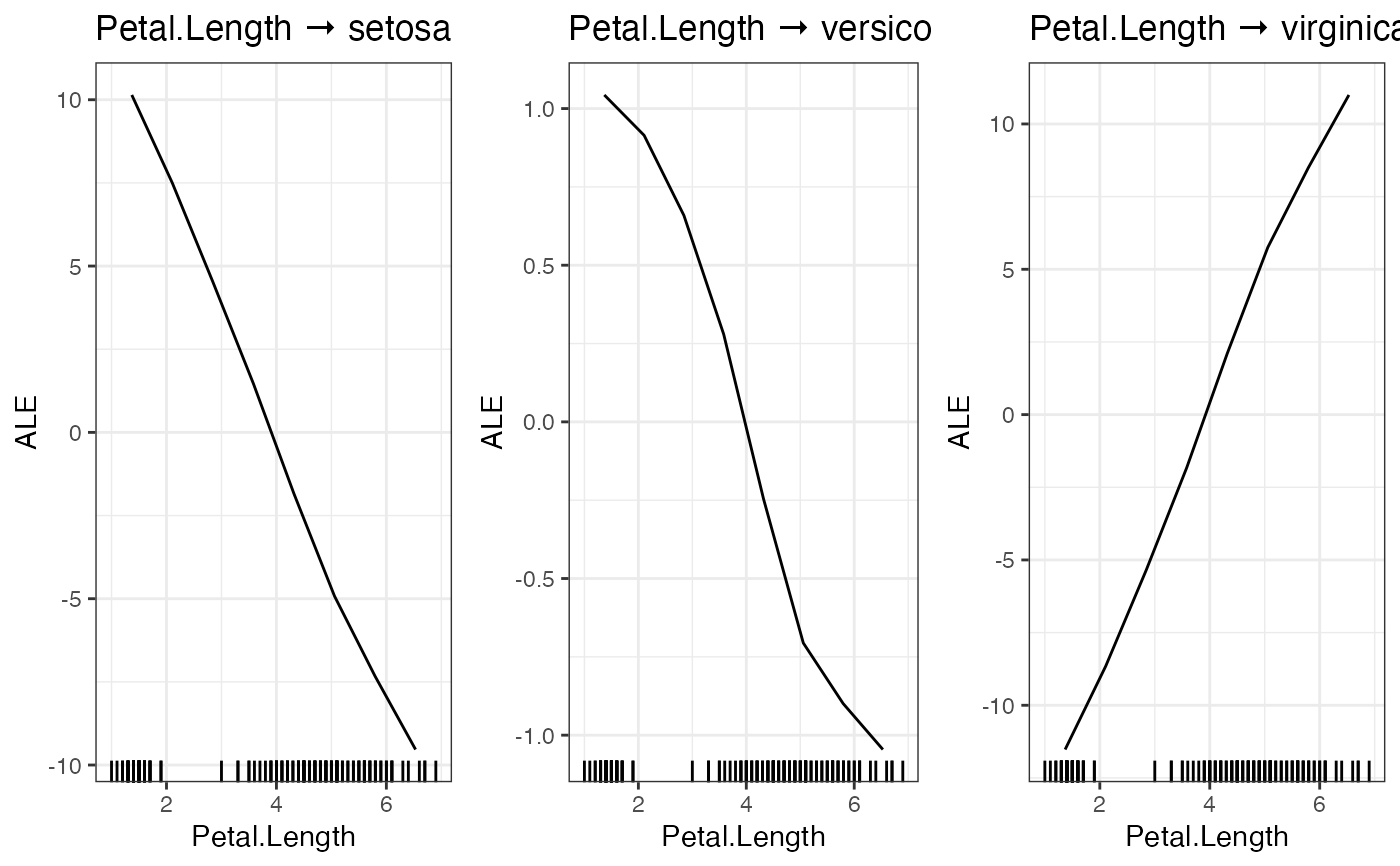

## To visualize the effect (response-feature effect), we can use the ALE and

## PDP functions

# Partial dependencies

PDP(nn.fit, variable = "Petal.Length")

# Accumulated local effect plots

ALE(nn.fit, variable = "Petal.Length")

# Per se, it is difficult to get confidence intervals for our xAI metrics (or

# for the predictions). But we can use bootstrapping to obtain uncertainties

# for all cito outputs:

## Re-fit the neural network with bootstrapping

nn.fit<- dnn(Species~.,

data = datasets::iris,

loss = "softmax",

epochs = 150L,

verbose = FALSE,

bootstrap = 20L)

## convergence can be tested via the analyze_training function

analyze_training(nn.fit)

## Summary for xAI metrics (can take some time):

summary(nn.fit)

## Now with standard errors and p-values

## Note: Take the p-values with a grain of salt! We do not know yet if they are

## correct (e.g. if you use regularization, they are likely conservative == too

## large)

## Predictions with bootstrapping:

dim(predict(nn.fit))

## predictions are by default averaged (over the bootstrap samples)

## Multinomial and conditional logit regression

m = dnn(Species~., data = iris, loss = "clogit", lr = 0.01)

m = dnn(Species~., data = iris, loss = "multinomial", lr = 0.01)

Y = t(stats::rmultinom(100, 10, prob = c(0.2, 0.2, 0.5)))

m = dnn(cbind(X1, X2, X3)~., data = data.frame(Y, A = as.factor(runif(100))), loss = "multinomial", lr = 0.01)

## conditional logit for size > 1 is not supported yet

# Hyperparameter tuning (experimental feature)

hidden_values = matrix(c(5, 2,

4, 2,

10,2,

15,2), 4, 2, byrow = TRUE)

## Potential architectures we want to test, first column == number of nodes

print(hidden_values)

nn.fit = dnn(Species~.,

data = iris,

epochs = 30L,

loss = "softmax",

hidden = tune(values = hidden_values),

lr = tune(0.00001, 0.1) # tune lr between range 0.00001 and 0.1

)

## Tuning results:

print(nn.fit$tuning)

# test = Inf means that tuning was cancelled after only one fit (within the CV)

# Advanced: Custom loss functions and additional parameters

## Normal Likelihood with sd parameter:

custom_loss = function(pred, true) {

logLik = torch::distr_normal(pred,

scale = torch::nnf_relu(scale)+

0.001)$log_prob(true)

return(-logLik$mean())

}

nn.fit<- dnn(Sepal.Length~.,

data = datasets::iris,

loss = custom_loss,

verbose = FALSE,

custom_parameters = list(scale = 1.0)

)

nn.fit$parameter$scale

## Multivariate normal likelihood with parametrized covariance matrix

## Sigma = L*L^t + D

## Helper function to build covariance matrix

create_cov = function(LU, Diag) {

return(torch::torch_matmul(LU, LU$t()) + torch::torch_diag(Diag$exp()+0.01))

}

custom_loss_MVN = function(true, pred) {

Sigma = create_cov(SigmaPar, SigmaDiag)

logLik = torch::distr_multivariate_normal(pred,

covariance_matrix = Sigma)$

log_prob(true)

return(-logLik$mean())

}

nn.fit<- dnn(cbind(Sepal.Length, Sepal.Width, Petal.Length)~.,

data = datasets::iris,

lr = 0.01,

verbose = FALSE,

loss = custom_loss_MVN,

custom_parameters =

list(SigmaDiag = rep(0, 3),

SigmaPar = matrix(rnorm(6, sd = 0.001), 3, 2))

)

as.matrix(create_cov(nn.fit$loss$parameter$SigmaPar,

nn.fit$loss$parameter$SigmaDiag))

}

#> Loss at epoch 1: 1.122883, lr: 0.01000

#> Loss at epoch 2: 0.939408, lr: 0.01000

#> Loss at epoch 3: 0.805962, lr: 0.01000

#> Loss at epoch 4: 0.700783, lr: 0.01000

#> Loss at epoch 5: 0.635470, lr: 0.01000

#> Loss at epoch 6: 0.570248, lr: 0.01000

#> Loss at epoch 7: 0.520989, lr: 0.01000

#> Loss at epoch 8: 0.491538, lr: 0.01000

#> Loss at epoch 9: 0.470051, lr: 0.01000

#> Loss at epoch 10: 0.434126, lr: 0.01000

#> Loss at epoch 11: 0.404641, lr: 0.01000

#> Loss at epoch 12: 0.382035, lr: 0.01000

#> Loss at epoch 13: 0.363658, lr: 0.01000

#> Loss at epoch 14: 0.348712, lr: 0.01000

#> Loss at epoch 15: 0.325178, lr: 0.01000

#> Loss at epoch 16: 0.316422, lr: 0.01000

#> Loss at epoch 17: 0.294118, lr: 0.01000

#> Loss at epoch 18: 0.300825, lr: 0.01000

#> Loss at epoch 19: 0.273868, lr: 0.01000

#> Loss at epoch 20: 0.268420, lr: 0.01000

#> Loss at epoch 21: 0.258743, lr: 0.01000

#> Loss at epoch 22: 0.251251, lr: 0.01000

#> Loss at epoch 23: 0.241765, lr: 0.01000

#> Loss at epoch 24: 0.224810, lr: 0.01000

#> Loss at epoch 25: 0.215337, lr: 0.01000

#> Loss at epoch 26: 0.214214, lr: 0.01000

#> Loss at epoch 27: 0.215144, lr: 0.01000

#> Loss at epoch 28: 0.200791, lr: 0.01000

#> Loss at epoch 29: 0.191077, lr: 0.01000

#> Loss at epoch 30: 0.180039, lr: 0.01000

#> Loss at epoch 31: 0.191212, lr: 0.01000

#> Loss at epoch 32: 0.180171, lr: 0.01000

#> Loss at epoch 33: 0.166964, lr: 0.01000

#> Loss at epoch 34: 0.176963, lr: 0.01000

#> Loss at epoch 35: 0.159958, lr: 0.01000

#> Loss at epoch 36: 0.171904, lr: 0.01000

#> Loss at epoch 37: 0.162911, lr: 0.01000

#> Loss at epoch 38: 0.143172, lr: 0.01000

#> Loss at epoch 39: 0.144486, lr: 0.01000

#> Loss at epoch 40: 0.147660, lr: 0.01000

#> Loss at epoch 41: 0.140176, lr: 0.01000

#> Loss at epoch 42: 0.145586, lr: 0.01000

#> Loss at epoch 43: 0.137137, lr: 0.01000

#> Loss at epoch 44: 0.128878, lr: 0.01000

#> Loss at epoch 45: 0.131917, lr: 0.01000

#> Loss at epoch 46: 0.126327, lr: 0.01000

#> Loss at epoch 47: 0.128138, lr: 0.01000

#> Loss at epoch 48: 0.123225, lr: 0.01000

#> Loss at epoch 49: 0.123541, lr: 0.01000

#> Loss at epoch 50: 0.115989, lr: 0.01000

#> Loss at epoch 51: 0.132032, lr: 0.01000

#> Loss at epoch 52: 0.119388, lr: 0.01000

#> Loss at epoch 53: 0.111416, lr: 0.01000

#> Loss at epoch 54: 0.117665, lr: 0.01000

#> Loss at epoch 55: 0.106307, lr: 0.01000

#> Loss at epoch 56: 0.124540, lr: 0.01000

#> Loss at epoch 57: 0.118285, lr: 0.01000

#> Loss at epoch 58: 0.111521, lr: 0.01000

#> Loss at epoch 59: 0.100682, lr: 0.01000

#> Loss at epoch 60: 0.106777, lr: 0.01000

#> Loss at epoch 61: 0.104414, lr: 0.01000

#> Loss at epoch 62: 0.098554, lr: 0.01000

#> Loss at epoch 63: 0.106682, lr: 0.01000

#> Loss at epoch 64: 0.102592, lr: 0.01000

#> Loss at epoch 65: 0.103393, lr: 0.01000

#> Loss at epoch 66: 0.094509, lr: 0.01000

#> Loss at epoch 67: 0.101544, lr: 0.01000

#> Loss at epoch 68: 0.089308, lr: 0.01000

#> Loss at epoch 69: 0.086096, lr: 0.01000

#> Loss at epoch 70: 0.090955, lr: 0.01000

#> Loss at epoch 71: 0.101453, lr: 0.01000

#> Loss at epoch 72: 0.090777, lr: 0.01000

#> Loss at epoch 73: 0.096065, lr: 0.01000

#> Loss at epoch 74: 0.087671, lr: 0.01000

#> Loss at epoch 75: 0.085364, lr: 0.01000

#> Loss at epoch 76: 0.092898, lr: 0.01000

#> Loss at epoch 77: 0.099216, lr: 0.01000

#> Loss at epoch 78: 0.113096, lr: 0.01000

#> Loss at epoch 79: 0.087467, lr: 0.01000

#> Loss at epoch 80: 0.110578, lr: 0.01000

#> Loss at epoch 81: 0.097965, lr: 0.01000

#> Loss at epoch 82: 0.086167, lr: 0.01000

#> Loss at epoch 83: 0.104936, lr: 0.01000

#> Loss at epoch 84: 0.083683, lr: 0.01000

#> Loss at epoch 85: 0.092008, lr: 0.01000

#> Loss at epoch 86: 0.079450, lr: 0.01000

#> Loss at epoch 87: 0.160431, lr: 0.01000

#> Loss at epoch 88: 0.159892, lr: 0.01000

#> Loss at epoch 89: 0.083055, lr: 0.01000

#> Loss at epoch 90: 0.080187, lr: 0.01000

#> Loss at epoch 91: 0.089905, lr: 0.01000

#> Loss at epoch 92: 0.069938, lr: 0.01000

#> Loss at epoch 93: 0.103553, lr: 0.01000

#> Loss at epoch 94: 0.075622, lr: 0.01000

#> Loss at epoch 95: 0.081060, lr: 0.01000

#> Loss at epoch 96: 0.080786, lr: 0.01000

#> Loss at epoch 97: 0.079348, lr: 0.01000

#> Loss at epoch 98: 0.086677, lr: 0.01000

#> Loss at epoch 99: 0.081812, lr: 0.01000

#> Loss at epoch 100: 0.091377, lr: 0.01000

#> Loss at epoch 101: 0.074764, lr: 0.01000

#> Loss at epoch 2: 0.939408, lr: 0.01000

#> Loss at epoch 3: 0.805962, lr: 0.01000

#> Loss at epoch 4: 0.700783, lr: 0.01000

#> Loss at epoch 5: 0.635470, lr: 0.01000

#> Loss at epoch 6: 0.570248, lr: 0.01000

#> Loss at epoch 7: 0.520989, lr: 0.01000

#> Loss at epoch 8: 0.491538, lr: 0.01000

#> Loss at epoch 9: 0.470051, lr: 0.01000

#> Loss at epoch 10: 0.434126, lr: 0.01000

#> Loss at epoch 11: 0.404641, lr: 0.01000

#> Loss at epoch 12: 0.382035, lr: 0.01000

#> Loss at epoch 13: 0.363658, lr: 0.01000

#> Loss at epoch 14: 0.348712, lr: 0.01000

#> Loss at epoch 15: 0.325178, lr: 0.01000

#> Loss at epoch 16: 0.316422, lr: 0.01000

#> Loss at epoch 17: 0.294118, lr: 0.01000

#> Loss at epoch 18: 0.300825, lr: 0.01000

#> Loss at epoch 19: 0.273868, lr: 0.01000

#> Loss at epoch 20: 0.268420, lr: 0.01000

#> Loss at epoch 21: 0.258743, lr: 0.01000

#> Loss at epoch 22: 0.251251, lr: 0.01000

#> Loss at epoch 23: 0.241765, lr: 0.01000

#> Loss at epoch 24: 0.224810, lr: 0.01000

#> Loss at epoch 25: 0.215337, lr: 0.01000

#> Loss at epoch 26: 0.214214, lr: 0.01000

#> Loss at epoch 27: 0.215144, lr: 0.01000

#> Loss at epoch 28: 0.200791, lr: 0.01000

#> Loss at epoch 29: 0.191077, lr: 0.01000

#> Loss at epoch 30: 0.180039, lr: 0.01000

#> Loss at epoch 31: 0.191212, lr: 0.01000

#> Loss at epoch 32: 0.180171, lr: 0.01000

#> Loss at epoch 33: 0.166964, lr: 0.01000

#> Loss at epoch 34: 0.176963, lr: 0.01000

#> Loss at epoch 35: 0.159958, lr: 0.01000

#> Loss at epoch 36: 0.171904, lr: 0.01000

#> Loss at epoch 37: 0.162911, lr: 0.01000

#> Loss at epoch 38: 0.143172, lr: 0.01000

#> Loss at epoch 39: 0.144486, lr: 0.01000

#> Loss at epoch 40: 0.147660, lr: 0.01000

#> Loss at epoch 41: 0.140176, lr: 0.01000

#> Loss at epoch 42: 0.145586, lr: 0.01000

#> Loss at epoch 43: 0.137137, lr: 0.01000

#> Loss at epoch 44: 0.128878, lr: 0.01000

#> Loss at epoch 45: 0.131917, lr: 0.01000

#> Loss at epoch 46: 0.126327, lr: 0.01000

#> Loss at epoch 47: 0.128138, lr: 0.01000

#> Loss at epoch 48: 0.123225, lr: 0.01000

#> Loss at epoch 49: 0.123541, lr: 0.01000

#> Loss at epoch 50: 0.115989, lr: 0.01000

#> Loss at epoch 51: 0.132032, lr: 0.01000

#> Loss at epoch 52: 0.119388, lr: 0.01000

#> Loss at epoch 53: 0.111416, lr: 0.01000

#> Loss at epoch 54: 0.117665, lr: 0.01000

#> Loss at epoch 55: 0.106307, lr: 0.01000

#> Loss at epoch 56: 0.124540, lr: 0.01000

#> Loss at epoch 57: 0.118285, lr: 0.01000

#> Loss at epoch 58: 0.111521, lr: 0.01000

#> Loss at epoch 59: 0.100682, lr: 0.01000

#> Loss at epoch 60: 0.106777, lr: 0.01000

#> Loss at epoch 61: 0.104414, lr: 0.01000

#> Loss at epoch 62: 0.098554, lr: 0.01000

#> Loss at epoch 63: 0.106682, lr: 0.01000

#> Loss at epoch 64: 0.102592, lr: 0.01000

#> Loss at epoch 65: 0.103393, lr: 0.01000

#> Loss at epoch 66: 0.094509, lr: 0.01000

#> Loss at epoch 67: 0.101544, lr: 0.01000

#> Loss at epoch 68: 0.089308, lr: 0.01000

#> Loss at epoch 69: 0.086096, lr: 0.01000

#> Loss at epoch 70: 0.090955, lr: 0.01000

#> Loss at epoch 71: 0.101453, lr: 0.01000

#> Loss at epoch 72: 0.090777, lr: 0.01000

#> Loss at epoch 73: 0.096065, lr: 0.01000

#> Loss at epoch 74: 0.087671, lr: 0.01000

#> Loss at epoch 75: 0.085364, lr: 0.01000

#> Loss at epoch 76: 0.092898, lr: 0.01000

#> Loss at epoch 77: 0.099216, lr: 0.01000

#> Loss at epoch 78: 0.113096, lr: 0.01000

#> Loss at epoch 79: 0.087467, lr: 0.01000

#> Loss at epoch 80: 0.110578, lr: 0.01000

#> Loss at epoch 81: 0.097965, lr: 0.01000

#> Loss at epoch 82: 0.086167, lr: 0.01000

#> Loss at epoch 83: 0.104936, lr: 0.01000

#> Loss at epoch 84: 0.083683, lr: 0.01000

#> Loss at epoch 85: 0.092008, lr: 0.01000

#> Loss at epoch 86: 0.079450, lr: 0.01000

#> Loss at epoch 87: 0.160431, lr: 0.01000

#> Loss at epoch 88: 0.159892, lr: 0.01000

#> Loss at epoch 89: 0.083055, lr: 0.01000

#> Loss at epoch 90: 0.080187, lr: 0.01000

#> Loss at epoch 91: 0.089905, lr: 0.01000

#> Loss at epoch 92: 0.069938, lr: 0.01000

#> Loss at epoch 93: 0.103553, lr: 0.01000

#> Loss at epoch 94: 0.075622, lr: 0.01000

#> Loss at epoch 95: 0.081060, lr: 0.01000

#> Loss at epoch 96: 0.080786, lr: 0.01000

#> Loss at epoch 97: 0.079348, lr: 0.01000

#> Loss at epoch 98: 0.086677, lr: 0.01000

#> Loss at epoch 99: 0.081812, lr: 0.01000

#> Loss at epoch 100: 0.091377, lr: 0.01000

#> Loss at epoch 101: 0.074764, lr: 0.01000

#> Loss at epoch 102: 0.067267, lr: 0.01000

#> Loss at epoch 103: 0.103423, lr: 0.01000

#> Loss at epoch 104: 0.092478, lr: 0.01000

#> Loss at epoch 105: 0.081089, lr: 0.01000

#> Loss at epoch 106: 0.064284, lr: 0.01000

#> Loss at epoch 107: 0.068101, lr: 0.01000

#> Loss at epoch 108: 0.072496, lr: 0.01000

#> Loss at epoch 109: 0.071757, lr: 0.01000

#> Loss at epoch 110: 0.077805, lr: 0.01000

#> Loss at epoch 111: 0.076026, lr: 0.01000

#> Loss at epoch 112: 0.069836, lr: 0.01000

#> Loss at epoch 113: 0.081460, lr: 0.01000

#> Loss at epoch 114: 0.066927, lr: 0.01000

#> Loss at epoch 115: 0.076848, lr: 0.01000

#> Loss at epoch 116: 0.064451, lr: 0.01000

#> Loss at epoch 117: 0.068426, lr: 0.01000

#> Loss at epoch 118: 0.075841, lr: 0.01000

#> Loss at epoch 119: 0.072744, lr: 0.01000

#> Loss at epoch 120: 0.086062, lr: 0.01000

#> Loss at epoch 121: 0.074982, lr: 0.01000

#> Loss at epoch 122: 0.075559, lr: 0.01000

#> Loss at epoch 123: 0.063803, lr: 0.01000

#> Loss at epoch 124: 0.077047, lr: 0.01000

#> Loss at epoch 125: 0.085156, lr: 0.01000

#> Loss at epoch 126: 0.071627, lr: 0.01000

#> Loss at epoch 127: 0.076577, lr: 0.01000

#> Loss at epoch 128: 0.073260, lr: 0.01000

#> Loss at epoch 129: 0.080519, lr: 0.01000

#> Loss at epoch 130: 0.068600, lr: 0.01000

#> Loss at epoch 131: 0.071613, lr: 0.01000

#> Loss at epoch 132: 0.090010, lr: 0.01000

#> Loss at epoch 133: 0.075034, lr: 0.01000

#> Loss at epoch 134: 0.065622, lr: 0.01000

#> Loss at epoch 135: 0.065883, lr: 0.01000

#> Loss at epoch 136: 0.066783, lr: 0.01000

#> Loss at epoch 137: 0.063999, lr: 0.01000

#> Loss at epoch 138: 0.075011, lr: 0.01000

#> Loss at epoch 139: 0.073635, lr: 0.01000

#> Loss at epoch 140: 0.086393, lr: 0.01000

#> Loss at epoch 141: 0.088661, lr: 0.01000

#> Loss at epoch 142: 0.080406, lr: 0.01000

#> Loss at epoch 143: 0.063072, lr: 0.01000

#> Loss at epoch 144: 0.072055, lr: 0.01000

#> Loss at epoch 145: 0.071915, lr: 0.01000

#> Loss at epoch 146: 0.074514, lr: 0.01000

#> Loss at epoch 147: 0.063698, lr: 0.01000

#> Loss at epoch 148: 0.069009, lr: 0.01000

#> Loss at epoch 149: 0.075835, lr: 0.01000

#> Loss at epoch 150: 0.065982, lr: 0.01000

#> dnn(formula = Species ~ Sepal.Length + Sepal.Width + Petal.Length +

#> Petal.Width, data = datasets::iris, loss = "softmax")

#> An `nn_module` containing 2,953 parameters.

#>

#> ── Modules ─────────────────────────────────────────────────────────────────────

#> • 0: <nn_linear> #250 parameters

#> • 1: <nn_selu> #0 parameters

#> • 2: <nn_linear> #2,550 parameters

#> • 3: <nn_selu> #0 parameters

#> • 4: <nn_linear> #153 parameters

#> Loss at epoch 102: 0.067267, lr: 0.01000

#> Loss at epoch 103: 0.103423, lr: 0.01000

#> Loss at epoch 104: 0.092478, lr: 0.01000

#> Loss at epoch 105: 0.081089, lr: 0.01000

#> Loss at epoch 106: 0.064284, lr: 0.01000

#> Loss at epoch 107: 0.068101, lr: 0.01000

#> Loss at epoch 108: 0.072496, lr: 0.01000

#> Loss at epoch 109: 0.071757, lr: 0.01000

#> Loss at epoch 110: 0.077805, lr: 0.01000

#> Loss at epoch 111: 0.076026, lr: 0.01000

#> Loss at epoch 112: 0.069836, lr: 0.01000

#> Loss at epoch 113: 0.081460, lr: 0.01000

#> Loss at epoch 114: 0.066927, lr: 0.01000

#> Loss at epoch 115: 0.076848, lr: 0.01000

#> Loss at epoch 116: 0.064451, lr: 0.01000

#> Loss at epoch 117: 0.068426, lr: 0.01000

#> Loss at epoch 118: 0.075841, lr: 0.01000

#> Loss at epoch 119: 0.072744, lr: 0.01000

#> Loss at epoch 120: 0.086062, lr: 0.01000

#> Loss at epoch 121: 0.074982, lr: 0.01000

#> Loss at epoch 122: 0.075559, lr: 0.01000

#> Loss at epoch 123: 0.063803, lr: 0.01000

#> Loss at epoch 124: 0.077047, lr: 0.01000

#> Loss at epoch 125: 0.085156, lr: 0.01000

#> Loss at epoch 126: 0.071627, lr: 0.01000

#> Loss at epoch 127: 0.076577, lr: 0.01000

#> Loss at epoch 128: 0.073260, lr: 0.01000

#> Loss at epoch 129: 0.080519, lr: 0.01000

#> Loss at epoch 130: 0.068600, lr: 0.01000

#> Loss at epoch 131: 0.071613, lr: 0.01000

#> Loss at epoch 132: 0.090010, lr: 0.01000

#> Loss at epoch 133: 0.075034, lr: 0.01000

#> Loss at epoch 134: 0.065622, lr: 0.01000

#> Loss at epoch 135: 0.065883, lr: 0.01000

#> Loss at epoch 136: 0.066783, lr: 0.01000

#> Loss at epoch 137: 0.063999, lr: 0.01000

#> Loss at epoch 138: 0.075011, lr: 0.01000

#> Loss at epoch 139: 0.073635, lr: 0.01000

#> Loss at epoch 140: 0.086393, lr: 0.01000

#> Loss at epoch 141: 0.088661, lr: 0.01000

#> Loss at epoch 142: 0.080406, lr: 0.01000

#> Loss at epoch 143: 0.063072, lr: 0.01000

#> Loss at epoch 144: 0.072055, lr: 0.01000

#> Loss at epoch 145: 0.071915, lr: 0.01000

#> Loss at epoch 146: 0.074514, lr: 0.01000

#> Loss at epoch 147: 0.063698, lr: 0.01000

#> Loss at epoch 148: 0.069009, lr: 0.01000

#> Loss at epoch 149: 0.075835, lr: 0.01000

#> Loss at epoch 150: 0.065982, lr: 0.01000

#> dnn(formula = Species ~ Sepal.Length + Sepal.Width + Petal.Length +

#> Petal.Width, data = datasets::iris, loss = "softmax")

#> An `nn_module` containing 2,953 parameters.

#>

#> ── Modules ─────────────────────────────────────────────────────────────────────

#> • 0: <nn_linear> #250 parameters

#> • 1: <nn_selu> #0 parameters

#> • 2: <nn_linear> #2,550 parameters

#> • 3: <nn_selu> #0 parameters

#> • 4: <nn_linear> #153 parameters

#> Number of Neighborhoods reduced to 8

#> Number of Neighborhoods reduced to 8

#> Number of Neighborhoods reduced to 8

#> Number of Neighborhoods reduced to 8

#> Number of Neighborhoods reduced to 8

#> Number of Neighborhoods reduced to 8

#> Loss at epoch 1: 0.601621, lr: 0.01000

#> Loss at epoch 1: 0.601621, lr: 0.01000

#> Loss at epoch 2: 0.527020, lr: 0.01000

#> Loss at epoch 3: 0.482529, lr: 0.01000

#> Loss at epoch 4: 0.442351, lr: 0.01000

#> Loss at epoch 5: 0.416154, lr: 0.01000

#> Loss at epoch 6: 0.388134, lr: 0.01000

#> Loss at epoch 7: 0.366647, lr: 0.01000

#> Loss at epoch 8: 0.355607, lr: 0.01000

#> Loss at epoch 9: 0.336405, lr: 0.01000

#> Loss at epoch 10: 0.319753, lr: 0.01000

#> Loss at epoch 11: 0.308047, lr: 0.01000

#> Loss at epoch 12: 0.304148, lr: 0.01000

#> Loss at epoch 13: 0.286538, lr: 0.01000

#> Loss at epoch 14: 0.281094, lr: 0.01000

#> Loss at epoch 15: 0.265741, lr: 0.01000

#> Loss at epoch 16: 0.257500, lr: 0.01000

#> Loss at epoch 17: 0.247748, lr: 0.01000

#> Loss at epoch 18: 0.244144, lr: 0.01000

#> Loss at epoch 19: 0.236366, lr: 0.01000

#> Loss at epoch 20: 0.225660, lr: 0.01000

#> Loss at epoch 21: 0.220222, lr: 0.01000

#> Loss at epoch 22: 0.215510, lr: 0.01000

#> Loss at epoch 23: 0.208355, lr: 0.01000

#> Loss at epoch 24: 0.218105, lr: 0.01000

#> Loss at epoch 25: 0.196464, lr: 0.01000

#> Loss at epoch 26: 0.194430, lr: 0.01000

#> Loss at epoch 27: 0.181971, lr: 0.01000

#> Loss at epoch 28: 0.181993, lr: 0.01000

#> Loss at epoch 29: 0.178608, lr: 0.01000

#> Loss at epoch 30: 0.173065, lr: 0.01000

#> Loss at epoch 31: 0.167747, lr: 0.01000

#> Loss at epoch 32: 0.159985, lr: 0.01000

#> Loss at epoch 33: 0.158465, lr: 0.01000

#> Loss at epoch 34: 0.149841, lr: 0.01000

#> Loss at epoch 35: 0.146935, lr: 0.01000

#> Loss at epoch 36: 0.142092, lr: 0.01000

#> Loss at epoch 37: 0.139657, lr: 0.01000

#> Loss at epoch 38: 0.137810, lr: 0.01000

#> Loss at epoch 39: 0.131381, lr: 0.01000

#> Loss at epoch 40: 0.131064, lr: 0.01000

#> Loss at epoch 41: 0.123916, lr: 0.01000

#> Loss at epoch 42: 0.125249, lr: 0.01000

#> Loss at epoch 43: 0.120735, lr: 0.01000

#> Loss at epoch 44: 0.122068, lr: 0.01000

#> Loss at epoch 45: 0.116491, lr: 0.01000

#> Loss at epoch 46: 0.111433, lr: 0.01000

#> Loss at epoch 47: 0.114211, lr: 0.01000

#> Loss at epoch 48: 0.107359, lr: 0.01000

#> Loss at epoch 49: 0.107689, lr: 0.01000

#> Loss at epoch 50: 0.113173, lr: 0.01000

#> Loss at epoch 51: 0.103488, lr: 0.01000

#> Loss at epoch 52: 0.101193, lr: 0.01000

#> Loss at epoch 53: 0.098770, lr: 0.01000

#> Loss at epoch 54: 0.098003, lr: 0.01000

#> Loss at epoch 55: 0.093003, lr: 0.01000

#> Loss at epoch 56: 0.093533, lr: 0.01000

#> Loss at epoch 57: 0.091959, lr: 0.01000

#> Loss at epoch 58: 0.090286, lr: 0.01000

#> Loss at epoch 59: 0.084182, lr: 0.01000

#> Loss at epoch 60: 0.089599, lr: 0.01000

#> Loss at epoch 61: 0.088411, lr: 0.01000

#> Loss at epoch 62: 0.085659, lr: 0.01000

#> Loss at epoch 63: 0.086118, lr: 0.01000

#> Loss at epoch 64: 0.081835, lr: 0.01000

#> Loss at epoch 65: 0.086713, lr: 0.01000

#> Loss at epoch 66: 0.080826, lr: 0.01000

#> Loss at epoch 67: 0.078375, lr: 0.01000

#> Loss at epoch 68: 0.073323, lr: 0.01000

#> Loss at epoch 69: 0.076566, lr: 0.01000

#> Loss at epoch 70: 0.074961, lr: 0.01000

#> Loss at epoch 71: 0.078304, lr: 0.01000

#> Loss at epoch 72: 0.077540, lr: 0.01000

#> Loss at epoch 73: 0.079322, lr: 0.01000

#> Loss at epoch 74: 0.073114, lr: 0.01000

#> Loss at epoch 75: 0.072321, lr: 0.01000

#> Loss at epoch 76: 0.070515, lr: 0.01000

#> Loss at epoch 77: 0.068053, lr: 0.01000

#> Loss at epoch 78: 0.071502, lr: 0.01000

#> Loss at epoch 79: 0.069582, lr: 0.01000

#> Loss at epoch 80: 0.069650, lr: 0.01000

#> Loss at epoch 81: 0.069487, lr: 0.01000

#> Loss at epoch 82: 0.068888, lr: 0.01000

#> Loss at epoch 83: 0.068813, lr: 0.01000

#> Loss at epoch 84: 0.064974, lr: 0.01000

#> Loss at epoch 85: 0.065338, lr: 0.01000

#> Loss at epoch 86: 0.066665, lr: 0.01000

#> Loss at epoch 87: 0.065803, lr: 0.01000

#> Loss at epoch 88: 0.061202, lr: 0.01000

#> Loss at epoch 89: 0.062329, lr: 0.01000

#> Loss at epoch 90: 0.064357, lr: 0.01000

#> Loss at epoch 91: 0.062041, lr: 0.01000

#> Loss at epoch 92: 0.059282, lr: 0.01000

#> Loss at epoch 93: 0.063019, lr: 0.01000

#> Loss at epoch 94: 0.062858, lr: 0.01000

#> Loss at epoch 95: 0.062765, lr: 0.01000

#> Loss at epoch 96: 0.058870, lr: 0.01000

#> Loss at epoch 97: 0.057647, lr: 0.01000

#> Loss at epoch 98: 0.063779, lr: 0.01000

#> Loss at epoch 99: 0.068420, lr: 0.01000

#> Loss at epoch 100: 0.059893, lr: 0.01000

#> Loss at epoch 1: 1.049376, lr: 0.01000

#> Loss at epoch 2: 0.527020, lr: 0.01000

#> Loss at epoch 3: 0.482529, lr: 0.01000

#> Loss at epoch 4: 0.442351, lr: 0.01000

#> Loss at epoch 5: 0.416154, lr: 0.01000

#> Loss at epoch 6: 0.388134, lr: 0.01000

#> Loss at epoch 7: 0.366647, lr: 0.01000

#> Loss at epoch 8: 0.355607, lr: 0.01000

#> Loss at epoch 9: 0.336405, lr: 0.01000

#> Loss at epoch 10: 0.319753, lr: 0.01000

#> Loss at epoch 11: 0.308047, lr: 0.01000

#> Loss at epoch 12: 0.304148, lr: 0.01000

#> Loss at epoch 13: 0.286538, lr: 0.01000

#> Loss at epoch 14: 0.281094, lr: 0.01000

#> Loss at epoch 15: 0.265741, lr: 0.01000

#> Loss at epoch 16: 0.257500, lr: 0.01000

#> Loss at epoch 17: 0.247748, lr: 0.01000

#> Loss at epoch 18: 0.244144, lr: 0.01000

#> Loss at epoch 19: 0.236366, lr: 0.01000

#> Loss at epoch 20: 0.225660, lr: 0.01000

#> Loss at epoch 21: 0.220222, lr: 0.01000

#> Loss at epoch 22: 0.215510, lr: 0.01000

#> Loss at epoch 23: 0.208355, lr: 0.01000

#> Loss at epoch 24: 0.218105, lr: 0.01000

#> Loss at epoch 25: 0.196464, lr: 0.01000

#> Loss at epoch 26: 0.194430, lr: 0.01000

#> Loss at epoch 27: 0.181971, lr: 0.01000

#> Loss at epoch 28: 0.181993, lr: 0.01000

#> Loss at epoch 29: 0.178608, lr: 0.01000

#> Loss at epoch 30: 0.173065, lr: 0.01000

#> Loss at epoch 31: 0.167747, lr: 0.01000

#> Loss at epoch 32: 0.159985, lr: 0.01000

#> Loss at epoch 33: 0.158465, lr: 0.01000

#> Loss at epoch 34: 0.149841, lr: 0.01000

#> Loss at epoch 35: 0.146935, lr: 0.01000

#> Loss at epoch 36: 0.142092, lr: 0.01000

#> Loss at epoch 37: 0.139657, lr: 0.01000

#> Loss at epoch 38: 0.137810, lr: 0.01000

#> Loss at epoch 39: 0.131381, lr: 0.01000

#> Loss at epoch 40: 0.131064, lr: 0.01000

#> Loss at epoch 41: 0.123916, lr: 0.01000

#> Loss at epoch 42: 0.125249, lr: 0.01000

#> Loss at epoch 43: 0.120735, lr: 0.01000

#> Loss at epoch 44: 0.122068, lr: 0.01000

#> Loss at epoch 45: 0.116491, lr: 0.01000

#> Loss at epoch 46: 0.111433, lr: 0.01000

#> Loss at epoch 47: 0.114211, lr: 0.01000

#> Loss at epoch 48: 0.107359, lr: 0.01000

#> Loss at epoch 49: 0.107689, lr: 0.01000

#> Loss at epoch 50: 0.113173, lr: 0.01000

#> Loss at epoch 51: 0.103488, lr: 0.01000

#> Loss at epoch 52: 0.101193, lr: 0.01000

#> Loss at epoch 53: 0.098770, lr: 0.01000

#> Loss at epoch 54: 0.098003, lr: 0.01000

#> Loss at epoch 55: 0.093003, lr: 0.01000

#> Loss at epoch 56: 0.093533, lr: 0.01000

#> Loss at epoch 57: 0.091959, lr: 0.01000

#> Loss at epoch 58: 0.090286, lr: 0.01000

#> Loss at epoch 59: 0.084182, lr: 0.01000

#> Loss at epoch 60: 0.089599, lr: 0.01000

#> Loss at epoch 61: 0.088411, lr: 0.01000

#> Loss at epoch 62: 0.085659, lr: 0.01000

#> Loss at epoch 63: 0.086118, lr: 0.01000

#> Loss at epoch 64: 0.081835, lr: 0.01000

#> Loss at epoch 65: 0.086713, lr: 0.01000

#> Loss at epoch 66: 0.080826, lr: 0.01000

#> Loss at epoch 67: 0.078375, lr: 0.01000

#> Loss at epoch 68: 0.073323, lr: 0.01000

#> Loss at epoch 69: 0.076566, lr: 0.01000

#> Loss at epoch 70: 0.074961, lr: 0.01000

#> Loss at epoch 71: 0.078304, lr: 0.01000

#> Loss at epoch 72: 0.077540, lr: 0.01000

#> Loss at epoch 73: 0.079322, lr: 0.01000

#> Loss at epoch 74: 0.073114, lr: 0.01000

#> Loss at epoch 75: 0.072321, lr: 0.01000

#> Loss at epoch 76: 0.070515, lr: 0.01000

#> Loss at epoch 77: 0.068053, lr: 0.01000

#> Loss at epoch 78: 0.071502, lr: 0.01000

#> Loss at epoch 79: 0.069582, lr: 0.01000

#> Loss at epoch 80: 0.069650, lr: 0.01000

#> Loss at epoch 81: 0.069487, lr: 0.01000

#> Loss at epoch 82: 0.068888, lr: 0.01000

#> Loss at epoch 83: 0.068813, lr: 0.01000

#> Loss at epoch 84: 0.064974, lr: 0.01000

#> Loss at epoch 85: 0.065338, lr: 0.01000

#> Loss at epoch 86: 0.066665, lr: 0.01000

#> Loss at epoch 87: 0.065803, lr: 0.01000

#> Loss at epoch 88: 0.061202, lr: 0.01000

#> Loss at epoch 89: 0.062329, lr: 0.01000

#> Loss at epoch 90: 0.064357, lr: 0.01000

#> Loss at epoch 91: 0.062041, lr: 0.01000

#> Loss at epoch 92: 0.059282, lr: 0.01000

#> Loss at epoch 93: 0.063019, lr: 0.01000

#> Loss at epoch 94: 0.062858, lr: 0.01000

#> Loss at epoch 95: 0.062765, lr: 0.01000

#> Loss at epoch 96: 0.058870, lr: 0.01000

#> Loss at epoch 97: 0.057647, lr: 0.01000

#> Loss at epoch 98: 0.063779, lr: 0.01000

#> Loss at epoch 99: 0.068420, lr: 0.01000

#> Loss at epoch 100: 0.059893, lr: 0.01000

#> Loss at epoch 1: 1.049376, lr: 0.01000

#> Loss at epoch 2: 0.854018, lr: 0.01000

#> Loss at epoch 3: 0.760081, lr: 0.01000

#> Loss at epoch 4: 0.680631, lr: 0.01000

#> Loss at epoch 5: 0.603664, lr: 0.01000

#> Loss at epoch 6: 0.571285, lr: 0.01000

#> Loss at epoch 7: 0.522103, lr: 0.01000

#> Loss at epoch 8: 0.492490, lr: 0.01000

#> Loss at epoch 9: 0.471385, lr: 0.01000

#> Loss at epoch 10: 0.447510, lr: 0.01000

#> Loss at epoch 11: 0.425259, lr: 0.01000

#> Loss at epoch 12: 0.400507, lr: 0.01000

#> Loss at epoch 13: 0.394950, lr: 0.01000

#> Loss at epoch 14: 0.368494, lr: 0.01000

#> Loss at epoch 15: 0.369700, lr: 0.01000

#> Loss at epoch 16: 0.350458, lr: 0.01000

#> Loss at epoch 17: 0.336985, lr: 0.01000

#> Loss at epoch 18: 0.323236, lr: 0.01000

#> Loss at epoch 19: 0.304475, lr: 0.01000

#> Loss at epoch 20: 0.290913, lr: 0.01000

#> Loss at epoch 21: 0.297108, lr: 0.01000

#> Loss at epoch 22: 0.280417, lr: 0.01000

#> Loss at epoch 23: 0.256421, lr: 0.01000

#> Loss at epoch 24: 0.244953, lr: 0.01000

#> Loss at epoch 25: 0.244835, lr: 0.01000

#> Loss at epoch 26: 0.232594, lr: 0.01000

#> Loss at epoch 27: 0.229202, lr: 0.01000

#> Loss at epoch 28: 0.214360, lr: 0.01000

#> Loss at epoch 29: 0.212492, lr: 0.01000

#> Loss at epoch 30: 0.219175, lr: 0.01000

#> Loss at epoch 31: 0.194153, lr: 0.01000

#> Loss at epoch 32: 0.194438, lr: 0.01000

#> Loss at epoch 33: 0.188413, lr: 0.01000

#> Loss at epoch 34: 0.191631, lr: 0.01000

#> Loss at epoch 35: 0.177538, lr: 0.01000

#> Loss at epoch 36: 0.166582, lr: 0.01000

#> Loss at epoch 37: 0.170072, lr: 0.01000

#> Loss at epoch 38: 0.193429, lr: 0.01000

#> Loss at epoch 39: 0.160113, lr: 0.01000

#> Loss at epoch 40: 0.153373, lr: 0.01000

#> Loss at epoch 41: 0.152776, lr: 0.01000

#> Loss at epoch 42: 0.157244, lr: 0.01000

#> Loss at epoch 43: 0.154730, lr: 0.01000

#> Loss at epoch 44: 0.157798, lr: 0.01000

#> Loss at epoch 45: 0.151893, lr: 0.01000

#> Loss at epoch 46: 0.137553, lr: 0.01000

#> Loss at epoch 47: 0.131623, lr: 0.01000

#> Loss at epoch 48: 0.140020, lr: 0.01000

#> Loss at epoch 49: 0.125532, lr: 0.01000

#> Loss at epoch 50: 0.145807, lr: 0.01000

#> Loss at epoch 51: 0.141335, lr: 0.01000

#> Loss at epoch 52: 0.119975, lr: 0.01000

#> Loss at epoch 53: 0.135336, lr: 0.01000

#> Loss at epoch 54: 0.124408, lr: 0.01000

#> Loss at epoch 55: 0.127828, lr: 0.01000

#> Loss at epoch 56: 0.117936, lr: 0.01000

#> Loss at epoch 57: 0.119951, lr: 0.01000

#> Loss at epoch 58: 0.113324, lr: 0.01000

#> Loss at epoch 59: 0.110688, lr: 0.01000

#> Loss at epoch 60: 0.113019, lr: 0.01000

#> Loss at epoch 61: 0.109198, lr: 0.01000

#> Loss at epoch 62: 0.115576, lr: 0.01000

#> Loss at epoch 63: 0.101509, lr: 0.01000

#> Loss at epoch 64: 0.106491, lr: 0.01000

#> Loss at epoch 65: 0.105197, lr: 0.01000

#> Loss at epoch 66: 0.099187, lr: 0.01000

#> Loss at epoch 67: 0.100820, lr: 0.01000

#> Loss at epoch 68: 0.103384, lr: 0.01000

#> Loss at epoch 69: 0.092852, lr: 0.01000

#> Loss at epoch 70: 0.103932, lr: 0.01000

#> Loss at epoch 71: 0.109047, lr: 0.01000

#> Loss at epoch 72: 0.092890, lr: 0.01000

#> Loss at epoch 73: 0.102648, lr: 0.01000

#> Loss at epoch 74: 0.115875, lr: 0.01000

#> Loss at epoch 75: 0.089873, lr: 0.01000

#> Loss at epoch 76: 0.092550, lr: 0.01000

#> Loss at epoch 77: 0.098603, lr: 0.01000

#> Loss at epoch 78: 0.090885, lr: 0.01000

#> Loss at epoch 79: 0.087177, lr: 0.01000

#> Loss at epoch 80: 0.115214, lr: 0.01000

#> Loss at epoch 81: 0.091414, lr: 0.01000

#> Loss at epoch 82: 0.106223, lr: 0.01000

#> Loss at epoch 83: 0.088888, lr: 0.01000

#> Loss at epoch 84: 0.081500, lr: 0.01000

#> Loss at epoch 85: 0.087106, lr: 0.01000

#> Loss at epoch 86: 0.090019, lr: 0.01000

#> Loss at epoch 87: 0.082456, lr: 0.01000

#> Loss at epoch 88: 0.085815, lr: 0.01000

#> Loss at epoch 89: 0.086994, lr: 0.01000

#> Loss at epoch 90: 0.084518, lr: 0.01000

#> Loss at epoch 91: 0.084101, lr: 0.01000

#> Loss at epoch 92: 0.095540, lr: 0.01000

#> Loss at epoch 93: 0.079930, lr: 0.01000

#> Loss at epoch 94: 0.102735, lr: 0.01000

#> Loss at epoch 95: 0.099556, lr: 0.01000

#> Loss at epoch 96: 0.093528, lr: 0.01000

#> Loss at epoch 97: 0.081843, lr: 0.01000

#> Loss at epoch 98: 0.089272, lr: 0.01000

#> Loss at epoch 99: 0.077286, lr: 0.01000

#> Loss at epoch 100: 0.091239, lr: 0.01000

#> Loss at epoch 1: 3.992303, lr: 0.01000

#> Loss at epoch 2: 0.854018, lr: 0.01000

#> Loss at epoch 3: 0.760081, lr: 0.01000

#> Loss at epoch 4: 0.680631, lr: 0.01000

#> Loss at epoch 5: 0.603664, lr: 0.01000

#> Loss at epoch 6: 0.571285, lr: 0.01000

#> Loss at epoch 7: 0.522103, lr: 0.01000

#> Loss at epoch 8: 0.492490, lr: 0.01000

#> Loss at epoch 9: 0.471385, lr: 0.01000

#> Loss at epoch 10: 0.447510, lr: 0.01000

#> Loss at epoch 11: 0.425259, lr: 0.01000

#> Loss at epoch 12: 0.400507, lr: 0.01000

#> Loss at epoch 13: 0.394950, lr: 0.01000

#> Loss at epoch 14: 0.368494, lr: 0.01000

#> Loss at epoch 15: 0.369700, lr: 0.01000

#> Loss at epoch 16: 0.350458, lr: 0.01000

#> Loss at epoch 17: 0.336985, lr: 0.01000

#> Loss at epoch 18: 0.323236, lr: 0.01000

#> Loss at epoch 19: 0.304475, lr: 0.01000

#> Loss at epoch 20: 0.290913, lr: 0.01000

#> Loss at epoch 21: 0.297108, lr: 0.01000

#> Loss at epoch 22: 0.280417, lr: 0.01000

#> Loss at epoch 23: 0.256421, lr: 0.01000

#> Loss at epoch 24: 0.244953, lr: 0.01000

#> Loss at epoch 25: 0.244835, lr: 0.01000

#> Loss at epoch 26: 0.232594, lr: 0.01000

#> Loss at epoch 27: 0.229202, lr: 0.01000

#> Loss at epoch 28: 0.214360, lr: 0.01000

#> Loss at epoch 29: 0.212492, lr: 0.01000

#> Loss at epoch 30: 0.219175, lr: 0.01000

#> Loss at epoch 31: 0.194153, lr: 0.01000

#> Loss at epoch 32: 0.194438, lr: 0.01000

#> Loss at epoch 33: 0.188413, lr: 0.01000

#> Loss at epoch 34: 0.191631, lr: 0.01000

#> Loss at epoch 35: 0.177538, lr: 0.01000

#> Loss at epoch 36: 0.166582, lr: 0.01000

#> Loss at epoch 37: 0.170072, lr: 0.01000

#> Loss at epoch 38: 0.193429, lr: 0.01000

#> Loss at epoch 39: 0.160113, lr: 0.01000

#> Loss at epoch 40: 0.153373, lr: 0.01000

#> Loss at epoch 41: 0.152776, lr: 0.01000

#> Loss at epoch 42: 0.157244, lr: 0.01000

#> Loss at epoch 43: 0.154730, lr: 0.01000

#> Loss at epoch 44: 0.157798, lr: 0.01000

#> Loss at epoch 45: 0.151893, lr: 0.01000

#> Loss at epoch 46: 0.137553, lr: 0.01000

#> Loss at epoch 47: 0.131623, lr: 0.01000

#> Loss at epoch 48: 0.140020, lr: 0.01000

#> Loss at epoch 49: 0.125532, lr: 0.01000

#> Loss at epoch 50: 0.145807, lr: 0.01000

#> Loss at epoch 51: 0.141335, lr: 0.01000

#> Loss at epoch 52: 0.119975, lr: 0.01000

#> Loss at epoch 53: 0.135336, lr: 0.01000

#> Loss at epoch 54: 0.124408, lr: 0.01000

#> Loss at epoch 55: 0.127828, lr: 0.01000

#> Loss at epoch 56: 0.117936, lr: 0.01000

#> Loss at epoch 57: 0.119951, lr: 0.01000

#> Loss at epoch 58: 0.113324, lr: 0.01000

#> Loss at epoch 59: 0.110688, lr: 0.01000

#> Loss at epoch 60: 0.113019, lr: 0.01000

#> Loss at epoch 61: 0.109198, lr: 0.01000

#> Loss at epoch 62: 0.115576, lr: 0.01000

#> Loss at epoch 63: 0.101509, lr: 0.01000

#> Loss at epoch 64: 0.106491, lr: 0.01000

#> Loss at epoch 65: 0.105197, lr: 0.01000

#> Loss at epoch 66: 0.099187, lr: 0.01000

#> Loss at epoch 67: 0.100820, lr: 0.01000

#> Loss at epoch 68: 0.103384, lr: 0.01000

#> Loss at epoch 69: 0.092852, lr: 0.01000

#> Loss at epoch 70: 0.103932, lr: 0.01000

#> Loss at epoch 71: 0.109047, lr: 0.01000

#> Loss at epoch 72: 0.092890, lr: 0.01000

#> Loss at epoch 73: 0.102648, lr: 0.01000

#> Loss at epoch 74: 0.115875, lr: 0.01000

#> Loss at epoch 75: 0.089873, lr: 0.01000

#> Loss at epoch 76: 0.092550, lr: 0.01000

#> Loss at epoch 77: 0.098603, lr: 0.01000

#> Loss at epoch 78: 0.090885, lr: 0.01000

#> Loss at epoch 79: 0.087177, lr: 0.01000

#> Loss at epoch 80: 0.115214, lr: 0.01000

#> Loss at epoch 81: 0.091414, lr: 0.01000

#> Loss at epoch 82: 0.106223, lr: 0.01000

#> Loss at epoch 83: 0.088888, lr: 0.01000

#> Loss at epoch 84: 0.081500, lr: 0.01000

#> Loss at epoch 85: 0.087106, lr: 0.01000

#> Loss at epoch 86: 0.090019, lr: 0.01000

#> Loss at epoch 87: 0.082456, lr: 0.01000

#> Loss at epoch 88: 0.085815, lr: 0.01000

#> Loss at epoch 89: 0.086994, lr: 0.01000

#> Loss at epoch 90: 0.084518, lr: 0.01000

#> Loss at epoch 91: 0.084101, lr: 0.01000

#> Loss at epoch 92: 0.095540, lr: 0.01000

#> Loss at epoch 93: 0.079930, lr: 0.01000

#> Loss at epoch 94: 0.102735, lr: 0.01000

#> Loss at epoch 95: 0.099556, lr: 0.01000

#> Loss at epoch 96: 0.093528, lr: 0.01000

#> Loss at epoch 97: 0.081843, lr: 0.01000

#> Loss at epoch 98: 0.089272, lr: 0.01000

#> Loss at epoch 99: 0.077286, lr: 0.01000

#> Loss at epoch 100: 0.091239, lr: 0.01000

#> Loss at epoch 1: 3.992303, lr: 0.01000

#> Loss at epoch 2: 3.443521, lr: 0.01000

#> Loss at epoch 3: 3.412122, lr: 0.01000

#> Loss at epoch 4: 3.403807, lr: 0.01000

#> Loss at epoch 5: 3.382572, lr: 0.01000

#> Loss at epoch 6: 3.379379, lr: 0.01000

#> Loss at epoch 7: 3.372216, lr: 0.01000

#> Loss at epoch 8: 3.363098, lr: 0.01000

#> Loss at epoch 9: 3.353826, lr: 0.01000

#> Loss at epoch 10: 3.347643, lr: 0.01000

#> Loss at epoch 11: 3.336297, lr: 0.01000

#> Loss at epoch 12: 3.323684, lr: 0.01000

#> Loss at epoch 13: 3.316515, lr: 0.01000

#> Loss at epoch 14: 3.306178, lr: 0.01000

#> Loss at epoch 15: 3.297047, lr: 0.01000

#> Loss at epoch 16: 3.288667, lr: 0.01000

#> Loss at epoch 17: 3.273517, lr: 0.01000

#> Loss at epoch 18: 3.255925, lr: 0.01000

#> Loss at epoch 19: 3.250206, lr: 0.01000

#> Loss at epoch 20: 3.223473, lr: 0.01000

#> Loss at epoch 21: 3.211311, lr: 0.01000

#> Loss at epoch 22: 3.198291, lr: 0.01000

#> Loss at epoch 23: 3.177080, lr: 0.01000

#> Loss at epoch 24: 3.159677, lr: 0.01000

#> Loss at epoch 25: 3.136800, lr: 0.01000

#> Loss at epoch 26: 3.117822, lr: 0.01000

#> Loss at epoch 27: 3.092546, lr: 0.01000

#> Loss at epoch 28: 3.069404, lr: 0.01000

#> Loss at epoch 29: 3.038474, lr: 0.01000

#> Loss at epoch 30: 3.015352, lr: 0.01000

#> Loss at epoch 31: 2.992323, lr: 0.01000

#> Loss at epoch 32: 2.963254, lr: 0.01000

#> Loss at epoch 33: 2.927048, lr: 0.01000

#> Loss at epoch 34: 2.912457, lr: 0.01000

#> Loss at epoch 35: 2.877573, lr: 0.01000

#> Loss at epoch 36: 2.849750, lr: 0.01000

#> Loss at epoch 37: 2.823297, lr: 0.01000

#> Loss at epoch 38: 2.798708, lr: 0.01000

#> Loss at epoch 39: 2.774173, lr: 0.01000

#> Loss at epoch 40: 2.755432, lr: 0.01000

#> Loss at epoch 41: 2.728382, lr: 0.01000

#> Loss at epoch 42: 2.703559, lr: 0.01000

#> Loss at epoch 43: 2.685270, lr: 0.01000

#> Loss at epoch 44: 2.675339, lr: 0.01000

#> Loss at epoch 45: 2.646701, lr: 0.01000

#> Loss at epoch 46: 2.633486, lr: 0.01000

#> Loss at epoch 47: 2.616056, lr: 0.01000

#> Loss at epoch 48: 2.596277, lr: 0.01000

#> Loss at epoch 49: 2.580026, lr: 0.01000

#> Loss at epoch 50: 2.566535, lr: 0.01000

#> Loss at epoch 51: 2.547462, lr: 0.01000

#> Loss at epoch 52: 2.533624, lr: 0.01000

#> Loss at epoch 53: 2.518617, lr: 0.01000

#> Loss at epoch 54: 2.495553, lr: 0.01000

#> Loss at epoch 55: 2.485435, lr: 0.01000

#> Loss at epoch 56: 2.477212, lr: 0.01000

#> Loss at epoch 57: 2.457061, lr: 0.01000

#> Loss at epoch 58: 2.439397, lr: 0.01000

#> Loss at epoch 59: 2.430008, lr: 0.01000

#> Loss at epoch 60: 2.418852, lr: 0.01000

#> Loss at epoch 61: 2.398282, lr: 0.01000

#> Loss at epoch 62: 2.387675, lr: 0.01000

#> Loss at epoch 63: 2.376007, lr: 0.01000

#> Loss at epoch 64: 2.363876, lr: 0.01000

#> Loss at epoch 65: 2.352631, lr: 0.01000

#> Loss at epoch 66: 2.341351, lr: 0.01000

#> Loss at epoch 67: 2.327733, lr: 0.01000

#> Loss at epoch 68: 2.324057, lr: 0.01000

#> Loss at epoch 69: 2.314050, lr: 0.01000

#> Loss at epoch 70: 2.300553, lr: 0.01000

#> Loss at epoch 71: 2.295034, lr: 0.01000

#> Loss at epoch 72: 2.285905, lr: 0.01000

#> Loss at epoch 73: 2.275905, lr: 0.01000

#> Loss at epoch 74: 2.269326, lr: 0.01000

#> Loss at epoch 75: 2.259049, lr: 0.01000

#> Loss at epoch 76: 2.254114, lr: 0.01000

#> Loss at epoch 77: 2.251495, lr: 0.01000

#> Loss at epoch 78: 2.246542, lr: 0.01000

#> Loss at epoch 79: 2.238564, lr: 0.01000

#> Loss at epoch 80: 2.233981, lr: 0.01000

#> Loss at epoch 81: 2.227862, lr: 0.01000

#> Loss at epoch 82: 2.225135, lr: 0.01000

#> Loss at epoch 83: 2.220459, lr: 0.01000

#> Loss at epoch 84: 2.215439, lr: 0.01000

#> Loss at epoch 85: 2.215550, lr: 0.01000

#> Loss at epoch 86: 2.210361, lr: 0.01000

#> Loss at epoch 87: 2.207266, lr: 0.01000

#> Loss at epoch 88: 2.203069, lr: 0.01000

#> Loss at epoch 89: 2.197910, lr: 0.01000

#> Loss at epoch 90: 2.195693, lr: 0.01000

#> Loss at epoch 91: 2.192172, lr: 0.01000

#> Loss at epoch 92: 2.190574, lr: 0.01000

#> Loss at epoch 93: 2.189275, lr: 0.01000

#> Loss at epoch 94: 2.184715, lr: 0.01000

#> Loss at epoch 95: 2.179309, lr: 0.01000

#> Loss at epoch 96: 2.181983, lr: 0.01000

#> Loss at epoch 97: 2.178058, lr: 0.01000

#> Loss at epoch 98: 2.174677, lr: 0.01000

#> Loss at epoch 99: 2.171290, lr: 0.01000

#> Loss at epoch 100: 2.177768, lr: 0.01000

#> [,1] [,2]

#> [1,] 5 2

#> [2,] 4 2

#> [3,] 10 2

#> [4,] 15 2

#> Starting hyperparameter tuning...

#> Fitting final model...

#> # A tibble: 10 × 6

#> steps test train models hidden lr

#> <int> <dbl> <dbl> <lgl> <list> <dbl>

#> 1 1 32.2 0 NA <dbl [2]> 0.0173

#> 2 2 22.0 0 NA <dbl [2]> 0.0588

#> 3 3 26.4 0 NA <dbl [2]> 0.0719

#> 4 4 32.3 0 NA <dbl [2]> 0.0990

#> 5 5 47.0 0 NA <dbl [2]> 0.0443

#> 6 6 44.7 0 NA <dbl [2]> 0.0341

#> 7 7 67.8 0 NA <dbl [2]> 0.0373

#> 8 8 31.7 0 NA <dbl [2]> 0.0268

#> 9 9 24.6 0 NA <dbl [2]> 0.0820

#> 10 10 45.0 0 NA <dbl [2]> 0.0414

#> Loss at epoch 2: 3.443521, lr: 0.01000

#> Loss at epoch 3: 3.412122, lr: 0.01000

#> Loss at epoch 4: 3.403807, lr: 0.01000

#> Loss at epoch 5: 3.382572, lr: 0.01000

#> Loss at epoch 6: 3.379379, lr: 0.01000

#> Loss at epoch 7: 3.372216, lr: 0.01000

#> Loss at epoch 8: 3.363098, lr: 0.01000

#> Loss at epoch 9: 3.353826, lr: 0.01000

#> Loss at epoch 10: 3.347643, lr: 0.01000

#> Loss at epoch 11: 3.336297, lr: 0.01000

#> Loss at epoch 12: 3.323684, lr: 0.01000

#> Loss at epoch 13: 3.316515, lr: 0.01000

#> Loss at epoch 14: 3.306178, lr: 0.01000

#> Loss at epoch 15: 3.297047, lr: 0.01000

#> Loss at epoch 16: 3.288667, lr: 0.01000

#> Loss at epoch 17: 3.273517, lr: 0.01000

#> Loss at epoch 18: 3.255925, lr: 0.01000

#> Loss at epoch 19: 3.250206, lr: 0.01000

#> Loss at epoch 20: 3.223473, lr: 0.01000

#> Loss at epoch 21: 3.211311, lr: 0.01000

#> Loss at epoch 22: 3.198291, lr: 0.01000

#> Loss at epoch 23: 3.177080, lr: 0.01000

#> Loss at epoch 24: 3.159677, lr: 0.01000

#> Loss at epoch 25: 3.136800, lr: 0.01000

#> Loss at epoch 26: 3.117822, lr: 0.01000

#> Loss at epoch 27: 3.092546, lr: 0.01000

#> Loss at epoch 28: 3.069404, lr: 0.01000

#> Loss at epoch 29: 3.038474, lr: 0.01000

#> Loss at epoch 30: 3.015352, lr: 0.01000

#> Loss at epoch 31: 2.992323, lr: 0.01000

#> Loss at epoch 32: 2.963254, lr: 0.01000

#> Loss at epoch 33: 2.927048, lr: 0.01000

#> Loss at epoch 34: 2.912457, lr: 0.01000

#> Loss at epoch 35: 2.877573, lr: 0.01000

#> Loss at epoch 36: 2.849750, lr: 0.01000

#> Loss at epoch 37: 2.823297, lr: 0.01000

#> Loss at epoch 38: 2.798708, lr: 0.01000

#> Loss at epoch 39: 2.774173, lr: 0.01000

#> Loss at epoch 40: 2.755432, lr: 0.01000

#> Loss at epoch 41: 2.728382, lr: 0.01000

#> Loss at epoch 42: 2.703559, lr: 0.01000

#> Loss at epoch 43: 2.685270, lr: 0.01000

#> Loss at epoch 44: 2.675339, lr: 0.01000

#> Loss at epoch 45: 2.646701, lr: 0.01000

#> Loss at epoch 46: 2.633486, lr: 0.01000

#> Loss at epoch 47: 2.616056, lr: 0.01000

#> Loss at epoch 48: 2.596277, lr: 0.01000

#> Loss at epoch 49: 2.580026, lr: 0.01000

#> Loss at epoch 50: 2.566535, lr: 0.01000

#> Loss at epoch 51: 2.547462, lr: 0.01000

#> Loss at epoch 52: 2.533624, lr: 0.01000

#> Loss at epoch 53: 2.518617, lr: 0.01000

#> Loss at epoch 54: 2.495553, lr: 0.01000

#> Loss at epoch 55: 2.485435, lr: 0.01000

#> Loss at epoch 56: 2.477212, lr: 0.01000

#> Loss at epoch 57: 2.457061, lr: 0.01000

#> Loss at epoch 58: 2.439397, lr: 0.01000

#> Loss at epoch 59: 2.430008, lr: 0.01000

#> Loss at epoch 60: 2.418852, lr: 0.01000

#> Loss at epoch 61: 2.398282, lr: 0.01000

#> Loss at epoch 62: 2.387675, lr: 0.01000

#> Loss at epoch 63: 2.376007, lr: 0.01000

#> Loss at epoch 64: 2.363876, lr: 0.01000

#> Loss at epoch 65: 2.352631, lr: 0.01000

#> Loss at epoch 66: 2.341351, lr: 0.01000

#> Loss at epoch 67: 2.327733, lr: 0.01000

#> Loss at epoch 68: 2.324057, lr: 0.01000

#> Loss at epoch 69: 2.314050, lr: 0.01000

#> Loss at epoch 70: 2.300553, lr: 0.01000

#> Loss at epoch 71: 2.295034, lr: 0.01000

#> Loss at epoch 72: 2.285905, lr: 0.01000

#> Loss at epoch 73: 2.275905, lr: 0.01000

#> Loss at epoch 74: 2.269326, lr: 0.01000

#> Loss at epoch 75: 2.259049, lr: 0.01000

#> Loss at epoch 76: 2.254114, lr: 0.01000

#> Loss at epoch 77: 2.251495, lr: 0.01000

#> Loss at epoch 78: 2.246542, lr: 0.01000

#> Loss at epoch 79: 2.238564, lr: 0.01000

#> Loss at epoch 80: 2.233981, lr: 0.01000

#> Loss at epoch 81: 2.227862, lr: 0.01000

#> Loss at epoch 82: 2.225135, lr: 0.01000

#> Loss at epoch 83: 2.220459, lr: 0.01000

#> Loss at epoch 84: 2.215439, lr: 0.01000

#> Loss at epoch 85: 2.215550, lr: 0.01000

#> Loss at epoch 86: 2.210361, lr: 0.01000

#> Loss at epoch 87: 2.207266, lr: 0.01000

#> Loss at epoch 88: 2.203069, lr: 0.01000

#> Loss at epoch 89: 2.197910, lr: 0.01000

#> Loss at epoch 90: 2.195693, lr: 0.01000

#> Loss at epoch 91: 2.192172, lr: 0.01000

#> Loss at epoch 92: 2.190574, lr: 0.01000

#> Loss at epoch 93: 2.189275, lr: 0.01000

#> Loss at epoch 94: 2.184715, lr: 0.01000

#> Loss at epoch 95: 2.179309, lr: 0.01000

#> Loss at epoch 96: 2.181983, lr: 0.01000

#> Loss at epoch 97: 2.178058, lr: 0.01000

#> Loss at epoch 98: 2.174677, lr: 0.01000

#> Loss at epoch 99: 2.171290, lr: 0.01000

#> Loss at epoch 100: 2.177768, lr: 0.01000

#> [,1] [,2]

#> [1,] 5 2

#> [2,] 4 2

#> [3,] 10 2

#> [4,] 15 2

#> Starting hyperparameter tuning...

#> Fitting final model...

#> # A tibble: 10 × 6

#> steps test train models hidden lr

#> <int> <dbl> <dbl> <lgl> <list> <dbl>

#> 1 1 32.2 0 NA <dbl [2]> 0.0173

#> 2 2 22.0 0 NA <dbl [2]> 0.0588

#> 3 3 26.4 0 NA <dbl [2]> 0.0719

#> 4 4 32.3 0 NA <dbl [2]> 0.0990

#> 5 5 47.0 0 NA <dbl [2]> 0.0443

#> 6 6 44.7 0 NA <dbl [2]> 0.0341

#> 7 7 67.8 0 NA <dbl [2]> 0.0373

#> 8 8 31.7 0 NA <dbl [2]> 0.0268

#> 9 9 24.6 0 NA <dbl [2]> 0.0820

#> 10 10 45.0 0 NA <dbl [2]> 0.0414

#> [,1] [,2] [,3]

#> [1,] 0.32596233 0.04433576 0.07790677

#> [2,] 0.04433576 0.16945159 0.03192519

#> [3,] 0.07790677 0.03192519 0.22975661

# }

#> [,1] [,2] [,3]

#> [1,] 0.32596233 0.04433576 0.07790677

#> [2,] 0.04433576 0.16945159 0.03192519

#> [3,] 0.07790677 0.03192519 0.22975661

# }