Usage

config_optimizer(

type = c("adam", "adadelta", "adagrad", "rmsprop", "rprop", "sgd"),

verbose = FALSE,

...

)Value

object of class cito_optim to give to dnn

Details

different optimizer need different variables, this function will tell you how the variables are set. For more information see the corresponding functions:

adam:

optim_adamadadelta:

optim_adadeltaadagrad:

optim_adagradrmsprop:

optim_rmsproprprop:

optim_rpropsgd:

optim_sgd

Examples

# \donttest{

if(torch::torch_is_installed()){

library(cito)

# create optimizer object

opt <- config_optimizer(type = "adagrad",

lr_decay = 1e-04,

weight_decay = 0.1,

verbose = TRUE)

# Build and train Network

nn.fit<- dnn(Sepal.Length~., data = datasets::iris, optimizer = opt)

}

#> set adagrad optimizer with following values

#> lr_decay: [1e-04]

#> weight_decay: [0.1]

#> initial_accumulator_value: [0]

#> eps: [1e-10]

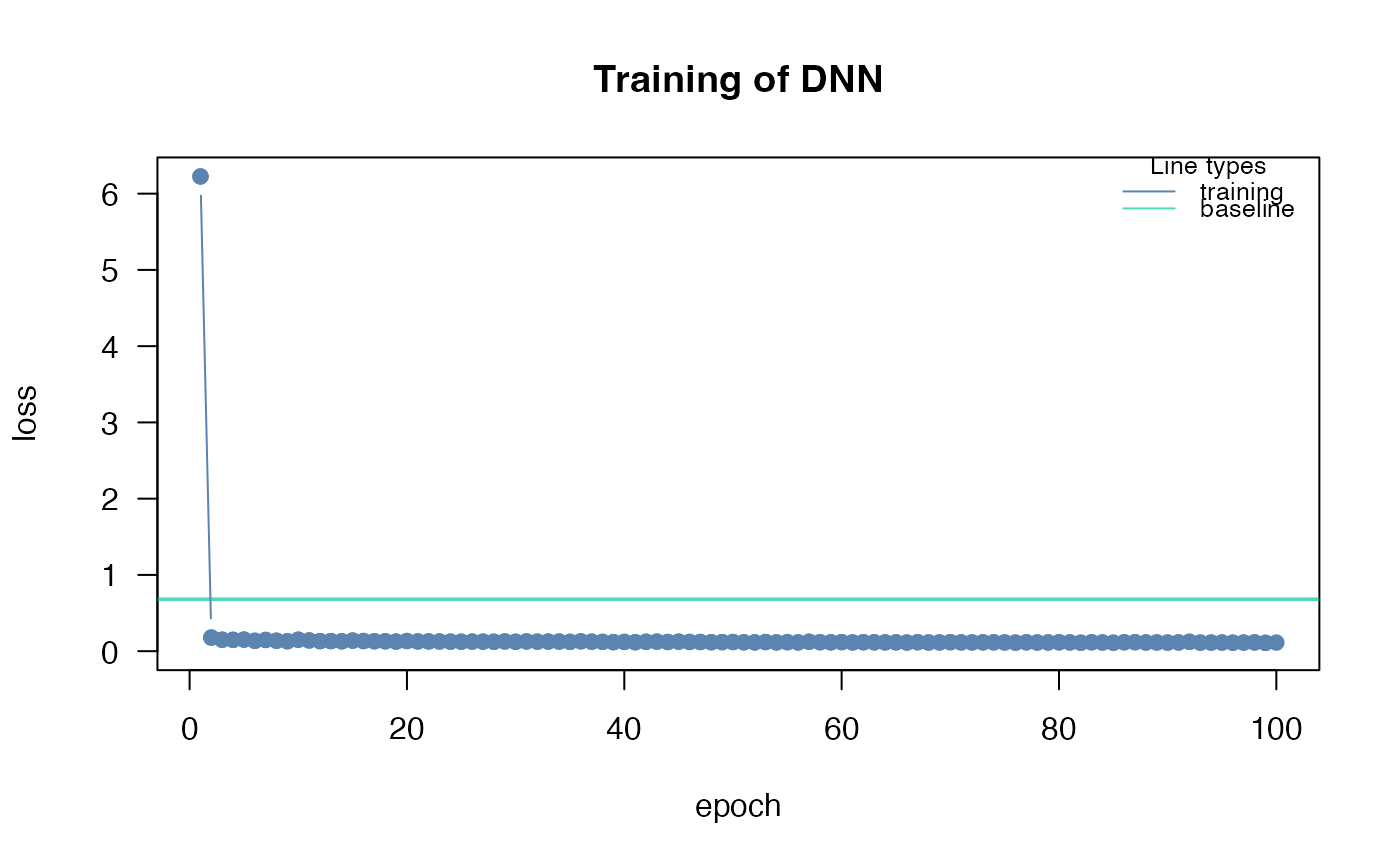

#> Loss at epoch 1: 5.434706, lr: 0.01000

#> Loss at epoch 2: 0.147970, lr: 0.01000

#> Loss at epoch 3: 0.135986, lr: 0.01000

#> Loss at epoch 4: 0.135486, lr: 0.01000

#> Loss at epoch 5: 0.131027, lr: 0.01000

#> Loss at epoch 6: 0.126657, lr: 0.01000

#> Loss at epoch 7: 0.125437, lr: 0.01000

#> Loss at epoch 8: 0.124690, lr: 0.01000

#> Loss at epoch 9: 0.124437, lr: 0.01000

#> Loss at epoch 10: 0.124501, lr: 0.01000

#> Loss at epoch 11: 0.127424, lr: 0.01000

#> Loss at epoch 12: 0.121171, lr: 0.01000

#> Loss at epoch 13: 0.122711, lr: 0.01000

#> Loss at epoch 14: 0.121640, lr: 0.01000

#> Loss at epoch 15: 0.118683, lr: 0.01000

#> Loss at epoch 16: 0.125965, lr: 0.01000

#> Loss at epoch 17: 0.121460, lr: 0.01000

#> Loss at epoch 18: 0.121079, lr: 0.01000

#> Loss at epoch 19: 0.119068, lr: 0.01000

#> Loss at epoch 20: 0.115174, lr: 0.01000

#> Loss at epoch 21: 0.119830, lr: 0.01000

#> Loss at epoch 22: 0.119216, lr: 0.01000

#> Loss at epoch 23: 0.124864, lr: 0.01000

#> Loss at epoch 24: 0.116221, lr: 0.01000

#> Loss at epoch 25: 0.113975, lr: 0.01000

#> Loss at epoch 26: 0.118420, lr: 0.01000

#> Loss at epoch 27: 0.113949, lr: 0.01000

#> Loss at epoch 28: 0.116257, lr: 0.01000

#> Loss at epoch 29: 0.113590, lr: 0.01000

#> Loss at epoch 30: 0.115900, lr: 0.01000

#> Loss at epoch 31: 0.115285, lr: 0.01000

#> Loss at epoch 32: 0.113506, lr: 0.01000

#> Loss at epoch 33: 0.114386, lr: 0.01000

#> Loss at epoch 34: 0.113213, lr: 0.01000

#> Loss at epoch 35: 0.119462, lr: 0.01000

#> Loss at epoch 36: 0.113817, lr: 0.01000

#> Loss at epoch 37: 0.117121, lr: 0.01000

#> Loss at epoch 38: 0.111578, lr: 0.01000

#> Loss at epoch 39: 0.114151, lr: 0.01000

#> Loss at epoch 40: 0.112557, lr: 0.01000

#> Loss at epoch 41: 0.113448, lr: 0.01000

#> Loss at epoch 42: 0.114760, lr: 0.01000

#> Loss at epoch 43: 0.118541, lr: 0.01000

#> Loss at epoch 44: 0.113428, lr: 0.01000

#> Loss at epoch 45: 0.114449, lr: 0.01000

#> Loss at epoch 46: 0.111930, lr: 0.01000

#> Loss at epoch 47: 0.110264, lr: 0.01000

#> Loss at epoch 48: 0.115660, lr: 0.01000

#> Loss at epoch 49: 0.112935, lr: 0.01000

#> Loss at epoch 50: 0.112793, lr: 0.01000

#> Loss at epoch 51: 0.111559, lr: 0.01000

#> Loss at epoch 52: 0.117571, lr: 0.01000

#> Loss at epoch 53: 0.113916, lr: 0.01000

#> Loss at epoch 54: 0.110999, lr: 0.01000

#> Loss at epoch 55: 0.112905, lr: 0.01000

#> Loss at epoch 56: 0.111579, lr: 0.01000

#> Loss at epoch 57: 0.111584, lr: 0.01000

#> Loss at epoch 58: 0.109349, lr: 0.01000

#> Loss at epoch 59: 0.110725, lr: 0.01000

#> Loss at epoch 60: 0.112281, lr: 0.01000

#> Loss at epoch 61: 0.112364, lr: 0.01000

#> Loss at epoch 62: 0.111839, lr: 0.01000

#> Loss at epoch 63: 0.109593, lr: 0.01000

#> Loss at epoch 64: 0.109720, lr: 0.01000

#> Loss at epoch 65: 0.110415, lr: 0.01000

#> Loss at epoch 66: 0.109808, lr: 0.01000

#> Loss at epoch 67: 0.114200, lr: 0.01000

#> Loss at epoch 68: 0.111774, lr: 0.01000

#> Loss at epoch 69: 0.112833, lr: 0.01000

#> Loss at epoch 70: 0.112160, lr: 0.01000

#> Loss at epoch 71: 0.111173, lr: 0.01000

#> Loss at epoch 72: 0.113472, lr: 0.01000

#> Loss at epoch 73: 0.109752, lr: 0.01000

#> Loss at epoch 74: 0.112768, lr: 0.01000

#> Loss at epoch 75: 0.110691, lr: 0.01000

#> Loss at epoch 76: 0.112001, lr: 0.01000

#> Loss at epoch 77: 0.109820, lr: 0.01000

#> Loss at epoch 78: 0.110821, lr: 0.01000

#> Loss at epoch 79: 0.112135, lr: 0.01000

#> Loss at epoch 80: 0.114343, lr: 0.01000

#> Loss at epoch 81: 0.111323, lr: 0.01000

#> Loss at epoch 82: 0.112789, lr: 0.01000

#> Loss at epoch 83: 0.114382, lr: 0.01000

#> Loss at epoch 84: 0.108308, lr: 0.01000

#> Loss at epoch 85: 0.112346, lr: 0.01000

#> Loss at epoch 86: 0.111382, lr: 0.01000

#> Loss at epoch 87: 0.108837, lr: 0.01000

#> Loss at epoch 88: 0.110568, lr: 0.01000

#> Loss at epoch 89: 0.107535, lr: 0.01000

#> Loss at epoch 90: 0.108457, lr: 0.01000

#> Loss at epoch 91: 0.111533, lr: 0.01000

#> Loss at epoch 92: 0.111983, lr: 0.01000

#> Loss at epoch 93: 0.109763, lr: 0.01000

#> Loss at epoch 94: 0.109996, lr: 0.01000

#> Loss at epoch 95: 0.109777, lr: 0.01000

#> Loss at epoch 96: 0.110851, lr: 0.01000

#> Loss at epoch 97: 0.109567, lr: 0.01000

#> Loss at epoch 98: 0.111678, lr: 0.01000

#> Loss at epoch 99: 0.109224, lr: 0.01000

#> Loss at epoch 100: 0.107545, lr: 0.01000

# }

#> Loss at epoch 2: 0.147970, lr: 0.01000

#> Loss at epoch 3: 0.135986, lr: 0.01000

#> Loss at epoch 4: 0.135486, lr: 0.01000

#> Loss at epoch 5: 0.131027, lr: 0.01000

#> Loss at epoch 6: 0.126657, lr: 0.01000

#> Loss at epoch 7: 0.125437, lr: 0.01000

#> Loss at epoch 8: 0.124690, lr: 0.01000

#> Loss at epoch 9: 0.124437, lr: 0.01000

#> Loss at epoch 10: 0.124501, lr: 0.01000

#> Loss at epoch 11: 0.127424, lr: 0.01000

#> Loss at epoch 12: 0.121171, lr: 0.01000

#> Loss at epoch 13: 0.122711, lr: 0.01000

#> Loss at epoch 14: 0.121640, lr: 0.01000

#> Loss at epoch 15: 0.118683, lr: 0.01000

#> Loss at epoch 16: 0.125965, lr: 0.01000

#> Loss at epoch 17: 0.121460, lr: 0.01000

#> Loss at epoch 18: 0.121079, lr: 0.01000

#> Loss at epoch 19: 0.119068, lr: 0.01000

#> Loss at epoch 20: 0.115174, lr: 0.01000

#> Loss at epoch 21: 0.119830, lr: 0.01000

#> Loss at epoch 22: 0.119216, lr: 0.01000

#> Loss at epoch 23: 0.124864, lr: 0.01000

#> Loss at epoch 24: 0.116221, lr: 0.01000

#> Loss at epoch 25: 0.113975, lr: 0.01000

#> Loss at epoch 26: 0.118420, lr: 0.01000

#> Loss at epoch 27: 0.113949, lr: 0.01000

#> Loss at epoch 28: 0.116257, lr: 0.01000

#> Loss at epoch 29: 0.113590, lr: 0.01000

#> Loss at epoch 30: 0.115900, lr: 0.01000

#> Loss at epoch 31: 0.115285, lr: 0.01000

#> Loss at epoch 32: 0.113506, lr: 0.01000

#> Loss at epoch 33: 0.114386, lr: 0.01000

#> Loss at epoch 34: 0.113213, lr: 0.01000

#> Loss at epoch 35: 0.119462, lr: 0.01000

#> Loss at epoch 36: 0.113817, lr: 0.01000

#> Loss at epoch 37: 0.117121, lr: 0.01000

#> Loss at epoch 38: 0.111578, lr: 0.01000

#> Loss at epoch 39: 0.114151, lr: 0.01000

#> Loss at epoch 40: 0.112557, lr: 0.01000

#> Loss at epoch 41: 0.113448, lr: 0.01000

#> Loss at epoch 42: 0.114760, lr: 0.01000

#> Loss at epoch 43: 0.118541, lr: 0.01000

#> Loss at epoch 44: 0.113428, lr: 0.01000

#> Loss at epoch 45: 0.114449, lr: 0.01000

#> Loss at epoch 46: 0.111930, lr: 0.01000

#> Loss at epoch 47: 0.110264, lr: 0.01000

#> Loss at epoch 48: 0.115660, lr: 0.01000

#> Loss at epoch 49: 0.112935, lr: 0.01000

#> Loss at epoch 50: 0.112793, lr: 0.01000

#> Loss at epoch 51: 0.111559, lr: 0.01000

#> Loss at epoch 52: 0.117571, lr: 0.01000

#> Loss at epoch 53: 0.113916, lr: 0.01000

#> Loss at epoch 54: 0.110999, lr: 0.01000

#> Loss at epoch 55: 0.112905, lr: 0.01000

#> Loss at epoch 56: 0.111579, lr: 0.01000

#> Loss at epoch 57: 0.111584, lr: 0.01000

#> Loss at epoch 58: 0.109349, lr: 0.01000

#> Loss at epoch 59: 0.110725, lr: 0.01000

#> Loss at epoch 60: 0.112281, lr: 0.01000

#> Loss at epoch 61: 0.112364, lr: 0.01000

#> Loss at epoch 62: 0.111839, lr: 0.01000

#> Loss at epoch 63: 0.109593, lr: 0.01000

#> Loss at epoch 64: 0.109720, lr: 0.01000

#> Loss at epoch 65: 0.110415, lr: 0.01000

#> Loss at epoch 66: 0.109808, lr: 0.01000

#> Loss at epoch 67: 0.114200, lr: 0.01000

#> Loss at epoch 68: 0.111774, lr: 0.01000

#> Loss at epoch 69: 0.112833, lr: 0.01000

#> Loss at epoch 70: 0.112160, lr: 0.01000

#> Loss at epoch 71: 0.111173, lr: 0.01000

#> Loss at epoch 72: 0.113472, lr: 0.01000

#> Loss at epoch 73: 0.109752, lr: 0.01000

#> Loss at epoch 74: 0.112768, lr: 0.01000

#> Loss at epoch 75: 0.110691, lr: 0.01000

#> Loss at epoch 76: 0.112001, lr: 0.01000

#> Loss at epoch 77: 0.109820, lr: 0.01000

#> Loss at epoch 78: 0.110821, lr: 0.01000

#> Loss at epoch 79: 0.112135, lr: 0.01000

#> Loss at epoch 80: 0.114343, lr: 0.01000

#> Loss at epoch 81: 0.111323, lr: 0.01000

#> Loss at epoch 82: 0.112789, lr: 0.01000

#> Loss at epoch 83: 0.114382, lr: 0.01000

#> Loss at epoch 84: 0.108308, lr: 0.01000

#> Loss at epoch 85: 0.112346, lr: 0.01000

#> Loss at epoch 86: 0.111382, lr: 0.01000

#> Loss at epoch 87: 0.108837, lr: 0.01000

#> Loss at epoch 88: 0.110568, lr: 0.01000

#> Loss at epoch 89: 0.107535, lr: 0.01000

#> Loss at epoch 90: 0.108457, lr: 0.01000

#> Loss at epoch 91: 0.111533, lr: 0.01000

#> Loss at epoch 92: 0.111983, lr: 0.01000

#> Loss at epoch 93: 0.109763, lr: 0.01000

#> Loss at epoch 94: 0.109996, lr: 0.01000

#> Loss at epoch 95: 0.109777, lr: 0.01000

#> Loss at epoch 96: 0.110851, lr: 0.01000

#> Loss at epoch 97: 0.109567, lr: 0.01000

#> Loss at epoch 98: 0.111678, lr: 0.01000

#> Loss at epoch 99: 0.109224, lr: 0.01000

#> Loss at epoch 100: 0.107545, lr: 0.01000

# }