Calculates the Partial Dependency Plot for one feature, either numeric or categorical. Returns it as a plot.

Usage

PDP(

model,

variable = NULL,

data = NULL,

ice = FALSE,

resolution.ice = 20,

plot = TRUE,

parallel = FALSE,

...

)

# S3 method for class 'citodnn'

PDP(

model,

variable = NULL,

data = NULL,

ice = FALSE,

resolution.ice = 20,

plot = TRUE,

parallel = FALSE,

...

)

# S3 method for class 'citodnnBootstrap'

PDP(

model,

variable = NULL,

data = NULL,

ice = FALSE,

resolution.ice = 20,

plot = TRUE,

parallel = FALSE,

...

)Arguments

- model

a model created by

dnn- variable

variable as string for which the PDP should be done. If none is supplied it is done for all variables.

- data

specify new data PDP should be performed . If NULL, PDP is performed on the training data.

- ice

Individual Conditional Dependence will be shown if TRUE

- resolution.ice

resolution in which ice will be computed

- plot

plot PDP or not

- parallel

parallelize over bootstrap models or not

- ...

arguments passed to

predict

Value

A list of plots made with 'ggplot2' consisting of an individual plot for each defined variable.

Description

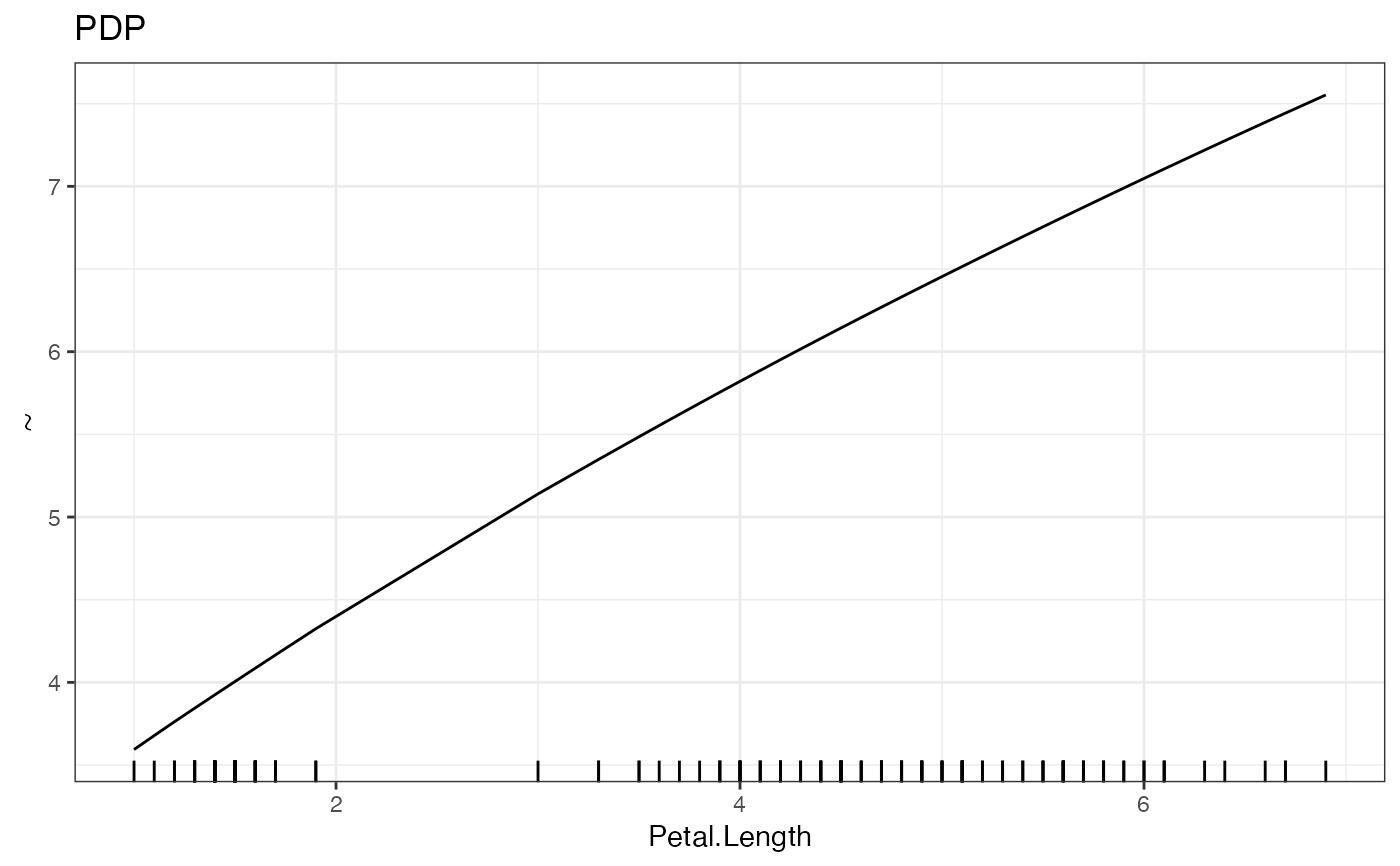

Performs a Partial Dependency Plot (PDP) estimation to analyze the relationship between a selected feature and the target variable.

The PDP function estimates the partial function \(\hat{f}_S\):

\(\hat{f}_S(x_S)=\frac{1}{n}\sum_{i=1}^n\hat{f}(x_S,x^{(i)}_{C})\)

with a Monte Carlo Estimation:

\(\hat{f}_S(x_S)=\frac{1}{n}\sum_{i=1}^n\hat{f}(x_S,x^{(i)}_{C})\) using a Monte Carlo estimation method. It calculates the average prediction of the target variable for different values of the selected feature while keeping other features constant.

For categorical features, all data instances are used, and each instance is set to one level of the categorical feature. The average prediction per category is then calculated and visualized in a bar plot.

If the ice parameter is set to TRUE, the Individual Conditional Expectation (ICE) curves are also shown. These curves illustrate how each individual data sample reacts to changes in the feature value. Please note that this option is not available for categorical features. Unlike PDP, the ICE curves are computed using a value grid instead of utilizing every value of every data entry.

Note: The PDP analysis provides valuable insights into the relationship between a specific feature and the target variable, helping to understand the feature's impact on the model's predictions. If a categorical feature is analyzed, all data instances are used and set to each level. Then an average is calculated per category and put out in a bar plot.

If ice is set to true additional the individual conditional dependence will be shown and the original PDP will be colored yellow. These lines show, how each individual data sample reacts to changes in the feature. This option is not available for categorical features. Unlike PDP the ICE curves are computed with a value grid instead of utilizing every value of every data entry.

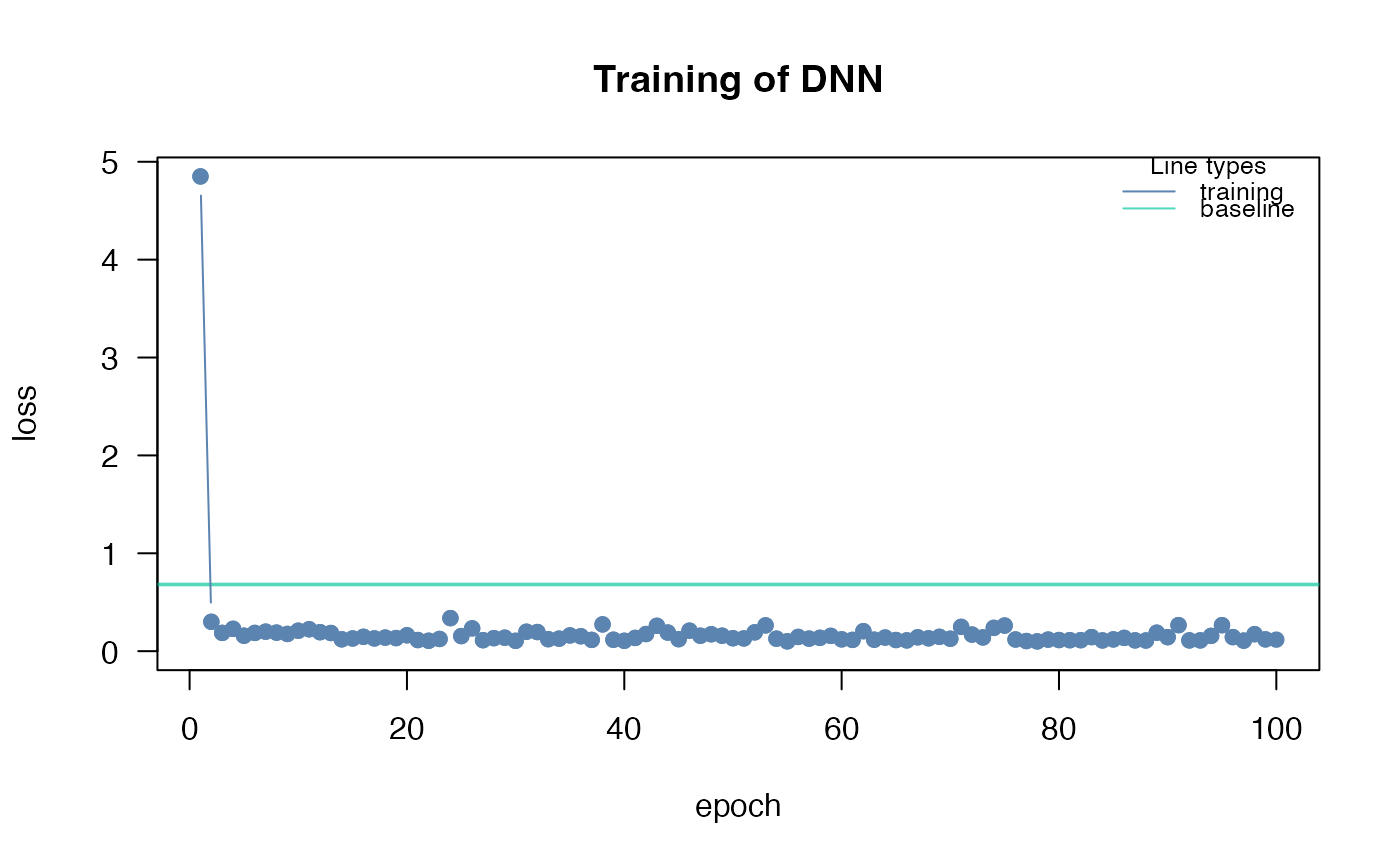

Examples

# \donttest{

if(torch::torch_is_installed()){

library(cito)

# Build and train Network

nn.fit<- dnn(Sepal.Length~., data = datasets::iris)

PDP(nn.fit, variable = "Petal.Length")

}

#> Loss at epoch 1: 3.664424, lr: 0.01000

#> Loss at epoch 2: 1.497374, lr: 0.01000

#> Loss at epoch 3: 0.530163, lr: 0.01000

#> Loss at epoch 4: 0.178987, lr: 0.01000

#> Loss at epoch 5: 0.288653, lr: 0.01000

#> Loss at epoch 6: 0.130974, lr: 0.01000

#> Loss at epoch 7: 0.197148, lr: 0.01000

#> Loss at epoch 8: 0.486733, lr: 0.01000

#> Loss at epoch 9: 0.372030, lr: 0.01000

#> Loss at epoch 10: 0.345576, lr: 0.01000

#> Loss at epoch 11: 0.261917, lr: 0.01000

#> Loss at epoch 12: 0.184841, lr: 0.01000

#> Loss at epoch 13: 0.142929, lr: 0.01000

#> Loss at epoch 14: 0.343258, lr: 0.01000

#> Loss at epoch 15: 0.153308, lr: 0.01000

#> Loss at epoch 16: 0.128067, lr: 0.01000

#> Loss at epoch 17: 0.231181, lr: 0.01000

#> Loss at epoch 18: 0.167183, lr: 0.01000

#> Loss at epoch 19: 0.338393, lr: 0.01000

#> Loss at epoch 20: 0.182522, lr: 0.01000

#> Loss at epoch 21: 0.242890, lr: 0.01000

#> Loss at epoch 22: 0.174132, lr: 0.01000

#> Loss at epoch 23: 0.160364, lr: 0.01000

#> Loss at epoch 24: 0.187050, lr: 0.01000

#> Loss at epoch 25: 0.196678, lr: 0.01000

#> Loss at epoch 26: 0.344192, lr: 0.01000

#> Loss at epoch 27: 0.185613, lr: 0.01000

#> Loss at epoch 28: 0.168092, lr: 0.01000

#> Loss at epoch 29: 0.132117, lr: 0.01000

#> Loss at epoch 30: 0.175003, lr: 0.01000

#> Loss at epoch 31: 0.156258, lr: 0.01000

#> Loss at epoch 32: 0.135925, lr: 0.01000

#> Loss at epoch 33: 0.122647, lr: 0.01000

#> Loss at epoch 34: 0.198828, lr: 0.01000

#> Loss at epoch 35: 0.265848, lr: 0.01000

#> Loss at epoch 36: 0.114820, lr: 0.01000

#> Loss at epoch 37: 0.174555, lr: 0.01000

#> Loss at epoch 38: 0.128287, lr: 0.01000

#> Loss at epoch 39: 0.212362, lr: 0.01000

#> Loss at epoch 40: 0.127659, lr: 0.01000

#> Loss at epoch 41: 0.138128, lr: 0.01000

#> Loss at epoch 42: 0.143118, lr: 0.01000

#> Loss at epoch 43: 0.169750, lr: 0.01000

#> Loss at epoch 44: 0.150594, lr: 0.01000

#> Loss at epoch 45: 0.291155, lr: 0.01000

#> Loss at epoch 46: 0.148066, lr: 0.01000

#> Loss at epoch 47: 0.144092, lr: 0.01000

#> Loss at epoch 48: 0.150879, lr: 0.01000

#> Loss at epoch 49: 0.173141, lr: 0.01000

#> Loss at epoch 50: 0.148515, lr: 0.01000

#> Loss at epoch 51: 0.120425, lr: 0.01000

#> Loss at epoch 52: 0.218867, lr: 0.01000

#> Loss at epoch 53: 0.123754, lr: 0.01000

#> Loss at epoch 54: 0.176253, lr: 0.01000

#> Loss at epoch 55: 0.233659, lr: 0.01000

#> Loss at epoch 56: 0.159904, lr: 0.01000

#> Loss at epoch 57: 0.134267, lr: 0.01000

#> Loss at epoch 58: 0.143162, lr: 0.01000

#> Loss at epoch 59: 0.145228, lr: 0.01000

#> Loss at epoch 60: 0.113980, lr: 0.01000

#> Loss at epoch 61: 0.126273, lr: 0.01000

#> Loss at epoch 62: 0.123818, lr: 0.01000

#> Loss at epoch 63: 0.130005, lr: 0.01000

#> Loss at epoch 64: 0.178676, lr: 0.01000

#> Loss at epoch 65: 0.246696, lr: 0.01000

#> Loss at epoch 66: 0.175567, lr: 0.01000

#> Loss at epoch 67: 0.156420, lr: 0.01000

#> Loss at epoch 68: 0.128855, lr: 0.01000

#> Loss at epoch 69: 0.198549, lr: 0.01000

#> Loss at epoch 70: 0.110308, lr: 0.01000

#> Loss at epoch 71: 0.126377, lr: 0.01000

#> Loss at epoch 72: 0.180684, lr: 0.01000

#> Loss at epoch 73: 0.165441, lr: 0.01000

#> Loss at epoch 74: 0.130092, lr: 0.01000

#> Loss at epoch 75: 0.166000, lr: 0.01000

#> Loss at epoch 76: 0.137054, lr: 0.01000

#> Loss at epoch 77: 0.164392, lr: 0.01000

#> Loss at epoch 78: 0.125858, lr: 0.01000

#> Loss at epoch 79: 0.179265, lr: 0.01000

#> Loss at epoch 80: 0.145095, lr: 0.01000

#> Loss at epoch 81: 0.224620, lr: 0.01000

#> Loss at epoch 82: 0.118003, lr: 0.01000

#> Loss at epoch 83: 0.127677, lr: 0.01000

#> Loss at epoch 84: 0.157284, lr: 0.01000

#> Loss at epoch 85: 0.128797, lr: 0.01000

#> Loss at epoch 86: 0.172269, lr: 0.01000

#> Loss at epoch 87: 0.126245, lr: 0.01000

#> Loss at epoch 88: 0.138377, lr: 0.01000

#> Loss at epoch 89: 0.106076, lr: 0.01000

#> Loss at epoch 90: 0.201372, lr: 0.01000

#> Loss at epoch 91: 0.160302, lr: 0.01000

#> Loss at epoch 92: 0.138648, lr: 0.01000

#> Loss at epoch 93: 0.115474, lr: 0.01000

#> Loss at epoch 94: 0.135377, lr: 0.01000

#> Loss at epoch 95: 0.137939, lr: 0.01000

#> Loss at epoch 96: 0.135082, lr: 0.01000

#> Loss at epoch 97: 0.111533, lr: 0.01000

#> Loss at epoch 98: 0.138479, lr: 0.01000

#> Loss at epoch 99: 0.157114, lr: 0.01000

#> Loss at epoch 100: 0.206865, lr: 0.01000

#> Loss at epoch 2: 1.497374, lr: 0.01000

#> Loss at epoch 3: 0.530163, lr: 0.01000

#> Loss at epoch 4: 0.178987, lr: 0.01000

#> Loss at epoch 5: 0.288653, lr: 0.01000

#> Loss at epoch 6: 0.130974, lr: 0.01000

#> Loss at epoch 7: 0.197148, lr: 0.01000

#> Loss at epoch 8: 0.486733, lr: 0.01000

#> Loss at epoch 9: 0.372030, lr: 0.01000

#> Loss at epoch 10: 0.345576, lr: 0.01000

#> Loss at epoch 11: 0.261917, lr: 0.01000

#> Loss at epoch 12: 0.184841, lr: 0.01000

#> Loss at epoch 13: 0.142929, lr: 0.01000

#> Loss at epoch 14: 0.343258, lr: 0.01000

#> Loss at epoch 15: 0.153308, lr: 0.01000

#> Loss at epoch 16: 0.128067, lr: 0.01000

#> Loss at epoch 17: 0.231181, lr: 0.01000

#> Loss at epoch 18: 0.167183, lr: 0.01000

#> Loss at epoch 19: 0.338393, lr: 0.01000

#> Loss at epoch 20: 0.182522, lr: 0.01000

#> Loss at epoch 21: 0.242890, lr: 0.01000

#> Loss at epoch 22: 0.174132, lr: 0.01000

#> Loss at epoch 23: 0.160364, lr: 0.01000

#> Loss at epoch 24: 0.187050, lr: 0.01000

#> Loss at epoch 25: 0.196678, lr: 0.01000

#> Loss at epoch 26: 0.344192, lr: 0.01000

#> Loss at epoch 27: 0.185613, lr: 0.01000

#> Loss at epoch 28: 0.168092, lr: 0.01000

#> Loss at epoch 29: 0.132117, lr: 0.01000

#> Loss at epoch 30: 0.175003, lr: 0.01000

#> Loss at epoch 31: 0.156258, lr: 0.01000

#> Loss at epoch 32: 0.135925, lr: 0.01000

#> Loss at epoch 33: 0.122647, lr: 0.01000

#> Loss at epoch 34: 0.198828, lr: 0.01000

#> Loss at epoch 35: 0.265848, lr: 0.01000

#> Loss at epoch 36: 0.114820, lr: 0.01000

#> Loss at epoch 37: 0.174555, lr: 0.01000

#> Loss at epoch 38: 0.128287, lr: 0.01000

#> Loss at epoch 39: 0.212362, lr: 0.01000

#> Loss at epoch 40: 0.127659, lr: 0.01000

#> Loss at epoch 41: 0.138128, lr: 0.01000

#> Loss at epoch 42: 0.143118, lr: 0.01000

#> Loss at epoch 43: 0.169750, lr: 0.01000

#> Loss at epoch 44: 0.150594, lr: 0.01000

#> Loss at epoch 45: 0.291155, lr: 0.01000

#> Loss at epoch 46: 0.148066, lr: 0.01000

#> Loss at epoch 47: 0.144092, lr: 0.01000

#> Loss at epoch 48: 0.150879, lr: 0.01000

#> Loss at epoch 49: 0.173141, lr: 0.01000

#> Loss at epoch 50: 0.148515, lr: 0.01000

#> Loss at epoch 51: 0.120425, lr: 0.01000

#> Loss at epoch 52: 0.218867, lr: 0.01000

#> Loss at epoch 53: 0.123754, lr: 0.01000

#> Loss at epoch 54: 0.176253, lr: 0.01000

#> Loss at epoch 55: 0.233659, lr: 0.01000

#> Loss at epoch 56: 0.159904, lr: 0.01000

#> Loss at epoch 57: 0.134267, lr: 0.01000

#> Loss at epoch 58: 0.143162, lr: 0.01000

#> Loss at epoch 59: 0.145228, lr: 0.01000

#> Loss at epoch 60: 0.113980, lr: 0.01000

#> Loss at epoch 61: 0.126273, lr: 0.01000

#> Loss at epoch 62: 0.123818, lr: 0.01000

#> Loss at epoch 63: 0.130005, lr: 0.01000

#> Loss at epoch 64: 0.178676, lr: 0.01000

#> Loss at epoch 65: 0.246696, lr: 0.01000

#> Loss at epoch 66: 0.175567, lr: 0.01000

#> Loss at epoch 67: 0.156420, lr: 0.01000

#> Loss at epoch 68: 0.128855, lr: 0.01000

#> Loss at epoch 69: 0.198549, lr: 0.01000

#> Loss at epoch 70: 0.110308, lr: 0.01000

#> Loss at epoch 71: 0.126377, lr: 0.01000

#> Loss at epoch 72: 0.180684, lr: 0.01000

#> Loss at epoch 73: 0.165441, lr: 0.01000

#> Loss at epoch 74: 0.130092, lr: 0.01000

#> Loss at epoch 75: 0.166000, lr: 0.01000

#> Loss at epoch 76: 0.137054, lr: 0.01000

#> Loss at epoch 77: 0.164392, lr: 0.01000

#> Loss at epoch 78: 0.125858, lr: 0.01000

#> Loss at epoch 79: 0.179265, lr: 0.01000

#> Loss at epoch 80: 0.145095, lr: 0.01000

#> Loss at epoch 81: 0.224620, lr: 0.01000

#> Loss at epoch 82: 0.118003, lr: 0.01000

#> Loss at epoch 83: 0.127677, lr: 0.01000

#> Loss at epoch 84: 0.157284, lr: 0.01000

#> Loss at epoch 85: 0.128797, lr: 0.01000

#> Loss at epoch 86: 0.172269, lr: 0.01000

#> Loss at epoch 87: 0.126245, lr: 0.01000

#> Loss at epoch 88: 0.138377, lr: 0.01000

#> Loss at epoch 89: 0.106076, lr: 0.01000

#> Loss at epoch 90: 0.201372, lr: 0.01000

#> Loss at epoch 91: 0.160302, lr: 0.01000

#> Loss at epoch 92: 0.138648, lr: 0.01000

#> Loss at epoch 93: 0.115474, lr: 0.01000

#> Loss at epoch 94: 0.135377, lr: 0.01000

#> Loss at epoch 95: 0.137939, lr: 0.01000

#> Loss at epoch 96: 0.135082, lr: 0.01000

#> Loss at epoch 97: 0.111533, lr: 0.01000

#> Loss at epoch 98: 0.138479, lr: 0.01000

#> Loss at epoch 99: 0.157114, lr: 0.01000

#> Loss at epoch 100: 0.206865, lr: 0.01000

# }

# }