Helps create custom learning rate schedulers for dnn.

Usage

config_lr_scheduler(

type = c("lambda", "multiplicative", "reduce_on_plateau", "one_cycle", "step"),

verbose = FALSE,

...

)Value

object of class cito_lr_scheduler to give to dnn

Details

different learning rate scheduler need different variables, these functions will tell you which variables can be set:

lambda:

lr_lambdamultiplicative:

lr_multiplicativereduce_on_plateau:

lr_reduce_on_plateauone_cycle:

lr_one_cyclestep:

lr_step

Examples

# \donttest{

if(torch::torch_is_installed()){

library(cito)

# create learning rate scheduler object

scheduler <- config_lr_scheduler(type = "step",

step_size = 30,

gamma = 0.15,

verbose = TRUE)

# Build and train Network

nn.fit<- dnn(Sepal.Length~., data = datasets::iris, lr_scheduler = scheduler)

}

#> Learning rate Scheduler step

#> step_size: [30]

#> gamma: [0.15]

#> last_epoch: [-1]

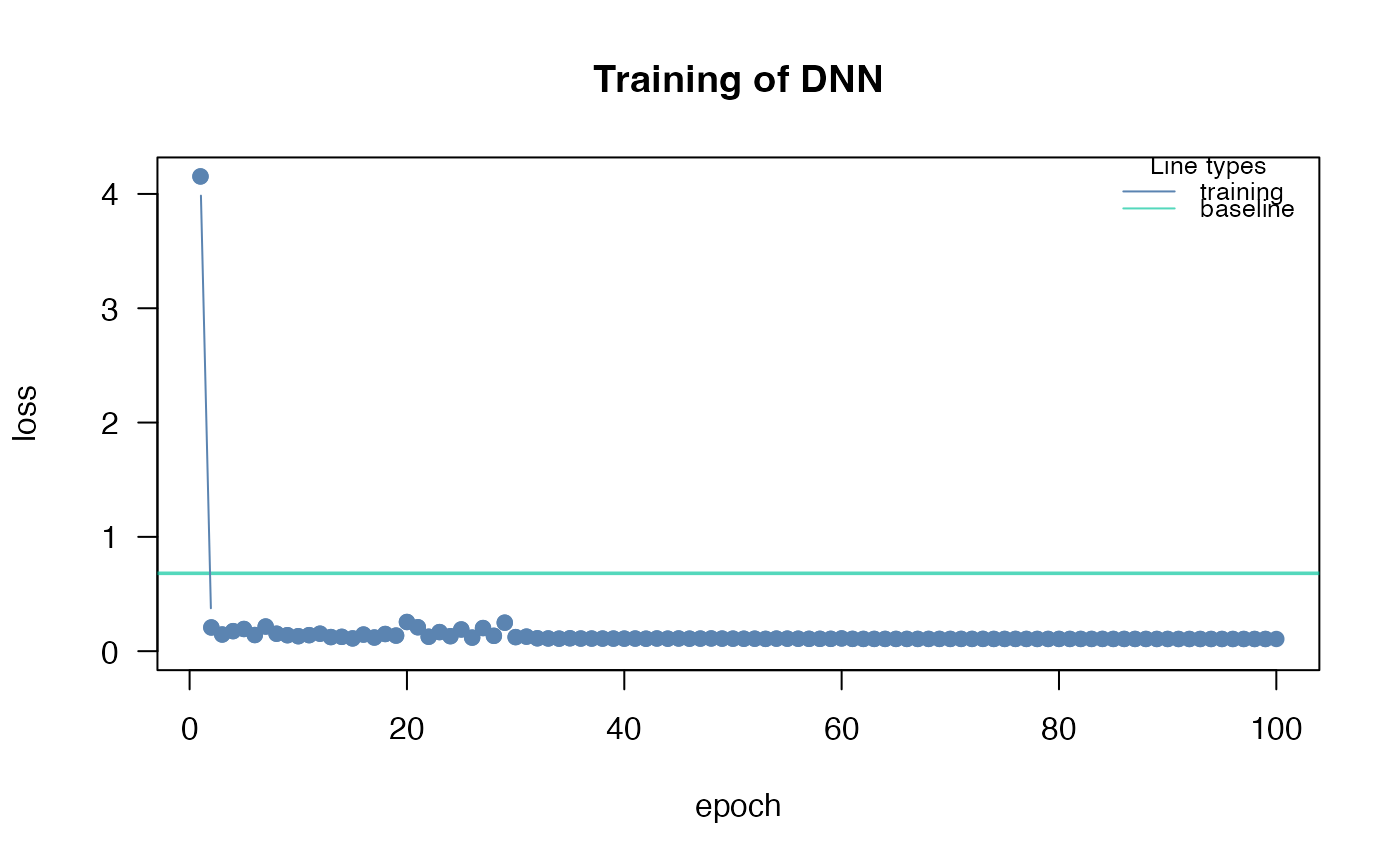

#> Loss at epoch 1: 3.954713, lr: 0.01000

#> Loss at epoch 2: 0.231113, lr: 0.01000

#> Loss at epoch 3: 0.231350, lr: 0.01000

#> Loss at epoch 4: 0.269389, lr: 0.01000

#> Loss at epoch 5: 0.169243, lr: 0.01000

#> Loss at epoch 6: 0.141261, lr: 0.01000

#> Loss at epoch 7: 0.185906, lr: 0.01000

#> Loss at epoch 8: 0.143617, lr: 0.01000

#> Loss at epoch 9: 0.140730, lr: 0.01000

#> Loss at epoch 10: 0.129844, lr: 0.01000

#> Loss at epoch 11: 0.136042, lr: 0.01000

#> Loss at epoch 12: 0.436134, lr: 0.01000

#> Loss at epoch 13: 0.318335, lr: 0.01000

#> Loss at epoch 14: 0.138690, lr: 0.01000

#> Loss at epoch 15: 0.246074, lr: 0.01000

#> Loss at epoch 16: 0.157595, lr: 0.01000

#> Loss at epoch 17: 0.294319, lr: 0.01000

#> Loss at epoch 18: 0.168762, lr: 0.01000

#> Loss at epoch 19: 0.176419, lr: 0.01000

#> Loss at epoch 20: 0.161251, lr: 0.01000

#> Loss at epoch 21: 0.166288, lr: 0.01000

#> Loss at epoch 22: 0.207131, lr: 0.01000

#> Loss at epoch 23: 0.141381, lr: 0.01000

#> Loss at epoch 24: 0.153455, lr: 0.01000

#> Loss at epoch 25: 0.143479, lr: 0.01000

#> Loss at epoch 26: 0.258239, lr: 0.01000

#> Loss at epoch 27: 0.197407, lr: 0.01000

#> Loss at epoch 28: 0.216296, lr: 0.01000

#> Loss at epoch 29: 0.185858, lr: 0.01000

#> Loss at epoch 30: 0.213892, lr: 0.00150

#> Loss at epoch 31: 0.123207, lr: 0.00150

#> Loss at epoch 32: 0.115316, lr: 0.00150

#> Loss at epoch 33: 0.114999, lr: 0.00150

#> Loss at epoch 34: 0.116099, lr: 0.00150

#> Loss at epoch 35: 0.116590, lr: 0.00150

#> Loss at epoch 36: 0.117337, lr: 0.00150

#> Loss at epoch 37: 0.114886, lr: 0.00150

#> Loss at epoch 38: 0.115280, lr: 0.00150

#> Loss at epoch 39: 0.115999, lr: 0.00150

#> Loss at epoch 40: 0.113874, lr: 0.00150

#> Loss at epoch 41: 0.114967, lr: 0.00150

#> Loss at epoch 42: 0.113593, lr: 0.00150

#> Loss at epoch 43: 0.113893, lr: 0.00150

#> Loss at epoch 44: 0.113710, lr: 0.00150

#> Loss at epoch 45: 0.115073, lr: 0.00150

#> Loss at epoch 46: 0.113688, lr: 0.00150

#> Loss at epoch 47: 0.115372, lr: 0.00150

#> Loss at epoch 48: 0.113541, lr: 0.00150

#> Loss at epoch 49: 0.112336, lr: 0.00150

#> Loss at epoch 50: 0.115721, lr: 0.00150

#> Loss at epoch 51: 0.113674, lr: 0.00150

#> Loss at epoch 52: 0.111781, lr: 0.00150

#> Loss at epoch 53: 0.113932, lr: 0.00150

#> Loss at epoch 54: 0.113738, lr: 0.00150

#> Loss at epoch 55: 0.112622, lr: 0.00150

#> Loss at epoch 56: 0.111825, lr: 0.00150

#> Loss at epoch 57: 0.112605, lr: 0.00150

#> Loss at epoch 58: 0.112345, lr: 0.00150

#> Loss at epoch 59: 0.111780, lr: 0.00150

#> Loss at epoch 60: 0.112041, lr: 0.00022

#> Loss at epoch 61: 0.111289, lr: 0.00022

#> Loss at epoch 62: 0.111016, lr: 0.00022

#> Loss at epoch 63: 0.111133, lr: 0.00022

#> Loss at epoch 64: 0.111072, lr: 0.00022

#> Loss at epoch 65: 0.111458, lr: 0.00022

#> Loss at epoch 66: 0.110895, lr: 0.00022

#> Loss at epoch 67: 0.111030, lr: 0.00022

#> Loss at epoch 68: 0.110732, lr: 0.00022

#> Loss at epoch 69: 0.111241, lr: 0.00022

#> Loss at epoch 70: 0.110739, lr: 0.00022

#> Loss at epoch 71: 0.110904, lr: 0.00022

#> Loss at epoch 72: 0.110879, lr: 0.00022

#> Loss at epoch 73: 0.110861, lr: 0.00022

#> Loss at epoch 74: 0.110837, lr: 0.00022

#> Loss at epoch 75: 0.110749, lr: 0.00022

#> Loss at epoch 76: 0.110807, lr: 0.00022

#> Loss at epoch 77: 0.110753, lr: 0.00022

#> Loss at epoch 78: 0.110695, lr: 0.00022

#> Loss at epoch 79: 0.110736, lr: 0.00022

#> Loss at epoch 80: 0.110750, lr: 0.00022

#> Loss at epoch 81: 0.110722, lr: 0.00022

#> Loss at epoch 82: 0.110700, lr: 0.00022

#> Loss at epoch 83: 0.110605, lr: 0.00022

#> Loss at epoch 84: 0.110559, lr: 0.00022

#> Loss at epoch 85: 0.110594, lr: 0.00022

#> Loss at epoch 86: 0.110858, lr: 0.00022

#> Loss at epoch 87: 0.110748, lr: 0.00022

#> Loss at epoch 88: 0.110585, lr: 0.00022

#> Loss at epoch 89: 0.110702, lr: 0.00022

#> Loss at epoch 90: 0.110729, lr: 0.00003

#> Loss at epoch 91: 0.110313, lr: 0.00003

#> Loss at epoch 92: 0.110357, lr: 0.00003

#> Loss at epoch 93: 0.110332, lr: 0.00003

#> Loss at epoch 94: 0.110322, lr: 0.00003

#> Loss at epoch 95: 0.110314, lr: 0.00003

#> Loss at epoch 96: 0.110295, lr: 0.00003

#> Loss at epoch 97: 0.110314, lr: 0.00003

#> Loss at epoch 98: 0.110305, lr: 0.00003

#> Loss at epoch 99: 0.110290, lr: 0.00003

#> Loss at epoch 100: 0.110298, lr: 0.00003

# }

#> Loss at epoch 2: 0.231113, lr: 0.01000

#> Loss at epoch 3: 0.231350, lr: 0.01000

#> Loss at epoch 4: 0.269389, lr: 0.01000

#> Loss at epoch 5: 0.169243, lr: 0.01000

#> Loss at epoch 6: 0.141261, lr: 0.01000

#> Loss at epoch 7: 0.185906, lr: 0.01000

#> Loss at epoch 8: 0.143617, lr: 0.01000

#> Loss at epoch 9: 0.140730, lr: 0.01000

#> Loss at epoch 10: 0.129844, lr: 0.01000

#> Loss at epoch 11: 0.136042, lr: 0.01000

#> Loss at epoch 12: 0.436134, lr: 0.01000

#> Loss at epoch 13: 0.318335, lr: 0.01000

#> Loss at epoch 14: 0.138690, lr: 0.01000

#> Loss at epoch 15: 0.246074, lr: 0.01000

#> Loss at epoch 16: 0.157595, lr: 0.01000

#> Loss at epoch 17: 0.294319, lr: 0.01000

#> Loss at epoch 18: 0.168762, lr: 0.01000

#> Loss at epoch 19: 0.176419, lr: 0.01000

#> Loss at epoch 20: 0.161251, lr: 0.01000

#> Loss at epoch 21: 0.166288, lr: 0.01000

#> Loss at epoch 22: 0.207131, lr: 0.01000

#> Loss at epoch 23: 0.141381, lr: 0.01000

#> Loss at epoch 24: 0.153455, lr: 0.01000

#> Loss at epoch 25: 0.143479, lr: 0.01000

#> Loss at epoch 26: 0.258239, lr: 0.01000

#> Loss at epoch 27: 0.197407, lr: 0.01000

#> Loss at epoch 28: 0.216296, lr: 0.01000

#> Loss at epoch 29: 0.185858, lr: 0.01000

#> Loss at epoch 30: 0.213892, lr: 0.00150

#> Loss at epoch 31: 0.123207, lr: 0.00150

#> Loss at epoch 32: 0.115316, lr: 0.00150

#> Loss at epoch 33: 0.114999, lr: 0.00150

#> Loss at epoch 34: 0.116099, lr: 0.00150

#> Loss at epoch 35: 0.116590, lr: 0.00150

#> Loss at epoch 36: 0.117337, lr: 0.00150

#> Loss at epoch 37: 0.114886, lr: 0.00150

#> Loss at epoch 38: 0.115280, lr: 0.00150

#> Loss at epoch 39: 0.115999, lr: 0.00150

#> Loss at epoch 40: 0.113874, lr: 0.00150

#> Loss at epoch 41: 0.114967, lr: 0.00150

#> Loss at epoch 42: 0.113593, lr: 0.00150

#> Loss at epoch 43: 0.113893, lr: 0.00150

#> Loss at epoch 44: 0.113710, lr: 0.00150

#> Loss at epoch 45: 0.115073, lr: 0.00150

#> Loss at epoch 46: 0.113688, lr: 0.00150

#> Loss at epoch 47: 0.115372, lr: 0.00150

#> Loss at epoch 48: 0.113541, lr: 0.00150

#> Loss at epoch 49: 0.112336, lr: 0.00150

#> Loss at epoch 50: 0.115721, lr: 0.00150

#> Loss at epoch 51: 0.113674, lr: 0.00150

#> Loss at epoch 52: 0.111781, lr: 0.00150

#> Loss at epoch 53: 0.113932, lr: 0.00150

#> Loss at epoch 54: 0.113738, lr: 0.00150

#> Loss at epoch 55: 0.112622, lr: 0.00150

#> Loss at epoch 56: 0.111825, lr: 0.00150

#> Loss at epoch 57: 0.112605, lr: 0.00150

#> Loss at epoch 58: 0.112345, lr: 0.00150

#> Loss at epoch 59: 0.111780, lr: 0.00150

#> Loss at epoch 60: 0.112041, lr: 0.00022

#> Loss at epoch 61: 0.111289, lr: 0.00022

#> Loss at epoch 62: 0.111016, lr: 0.00022

#> Loss at epoch 63: 0.111133, lr: 0.00022

#> Loss at epoch 64: 0.111072, lr: 0.00022

#> Loss at epoch 65: 0.111458, lr: 0.00022

#> Loss at epoch 66: 0.110895, lr: 0.00022

#> Loss at epoch 67: 0.111030, lr: 0.00022

#> Loss at epoch 68: 0.110732, lr: 0.00022

#> Loss at epoch 69: 0.111241, lr: 0.00022

#> Loss at epoch 70: 0.110739, lr: 0.00022

#> Loss at epoch 71: 0.110904, lr: 0.00022

#> Loss at epoch 72: 0.110879, lr: 0.00022

#> Loss at epoch 73: 0.110861, lr: 0.00022

#> Loss at epoch 74: 0.110837, lr: 0.00022

#> Loss at epoch 75: 0.110749, lr: 0.00022

#> Loss at epoch 76: 0.110807, lr: 0.00022

#> Loss at epoch 77: 0.110753, lr: 0.00022

#> Loss at epoch 78: 0.110695, lr: 0.00022

#> Loss at epoch 79: 0.110736, lr: 0.00022

#> Loss at epoch 80: 0.110750, lr: 0.00022

#> Loss at epoch 81: 0.110722, lr: 0.00022

#> Loss at epoch 82: 0.110700, lr: 0.00022

#> Loss at epoch 83: 0.110605, lr: 0.00022

#> Loss at epoch 84: 0.110559, lr: 0.00022

#> Loss at epoch 85: 0.110594, lr: 0.00022

#> Loss at epoch 86: 0.110858, lr: 0.00022

#> Loss at epoch 87: 0.110748, lr: 0.00022

#> Loss at epoch 88: 0.110585, lr: 0.00022

#> Loss at epoch 89: 0.110702, lr: 0.00022

#> Loss at epoch 90: 0.110729, lr: 0.00003

#> Loss at epoch 91: 0.110313, lr: 0.00003

#> Loss at epoch 92: 0.110357, lr: 0.00003

#> Loss at epoch 93: 0.110332, lr: 0.00003

#> Loss at epoch 94: 0.110322, lr: 0.00003

#> Loss at epoch 95: 0.110314, lr: 0.00003

#> Loss at epoch 96: 0.110295, lr: 0.00003

#> Loss at epoch 97: 0.110314, lr: 0.00003

#> Loss at epoch 98: 0.110305, lr: 0.00003

#> Loss at epoch 99: 0.110290, lr: 0.00003

#> Loss at epoch 100: 0.110298, lr: 0.00003

# }