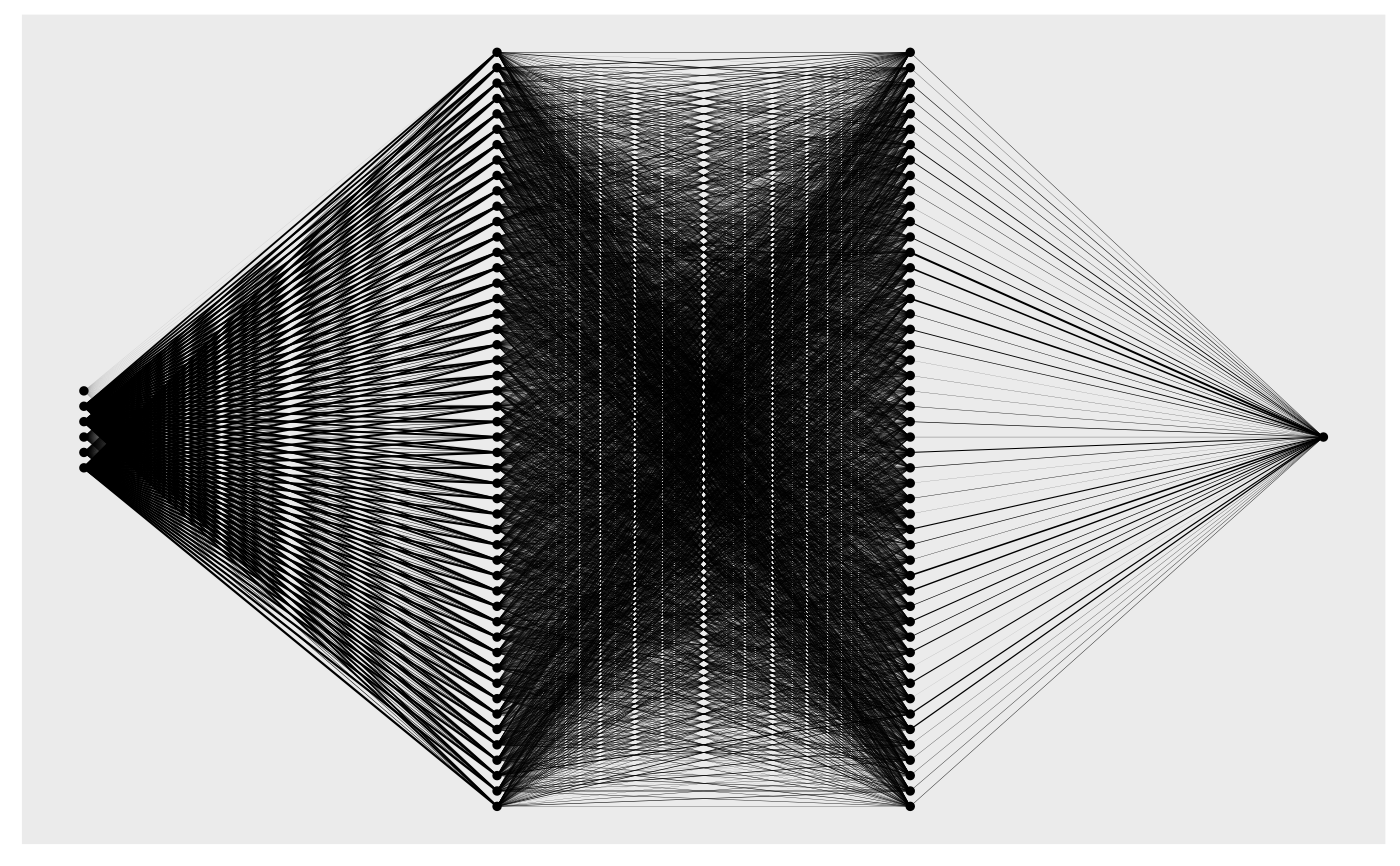

Creates graph plot which gives an overview of the network architecture.

Source:R/dnn.R

plot.citodnn.RdCreates graph plot which gives an overview of the network architecture.

Arguments

- x

a model created by

dnn- node_size

size of node in plot

- scale_edges

edge weight gets scaled according to other weights (layer specific)

- ...

no further functionality implemented yet

- which_model

which model from the ensemble should be plotted

Examples

# \donttest{

if(torch::torch_is_installed()){

library(cito)

set.seed(222)

validation_set<- sample(c(1:nrow(datasets::iris)),25)

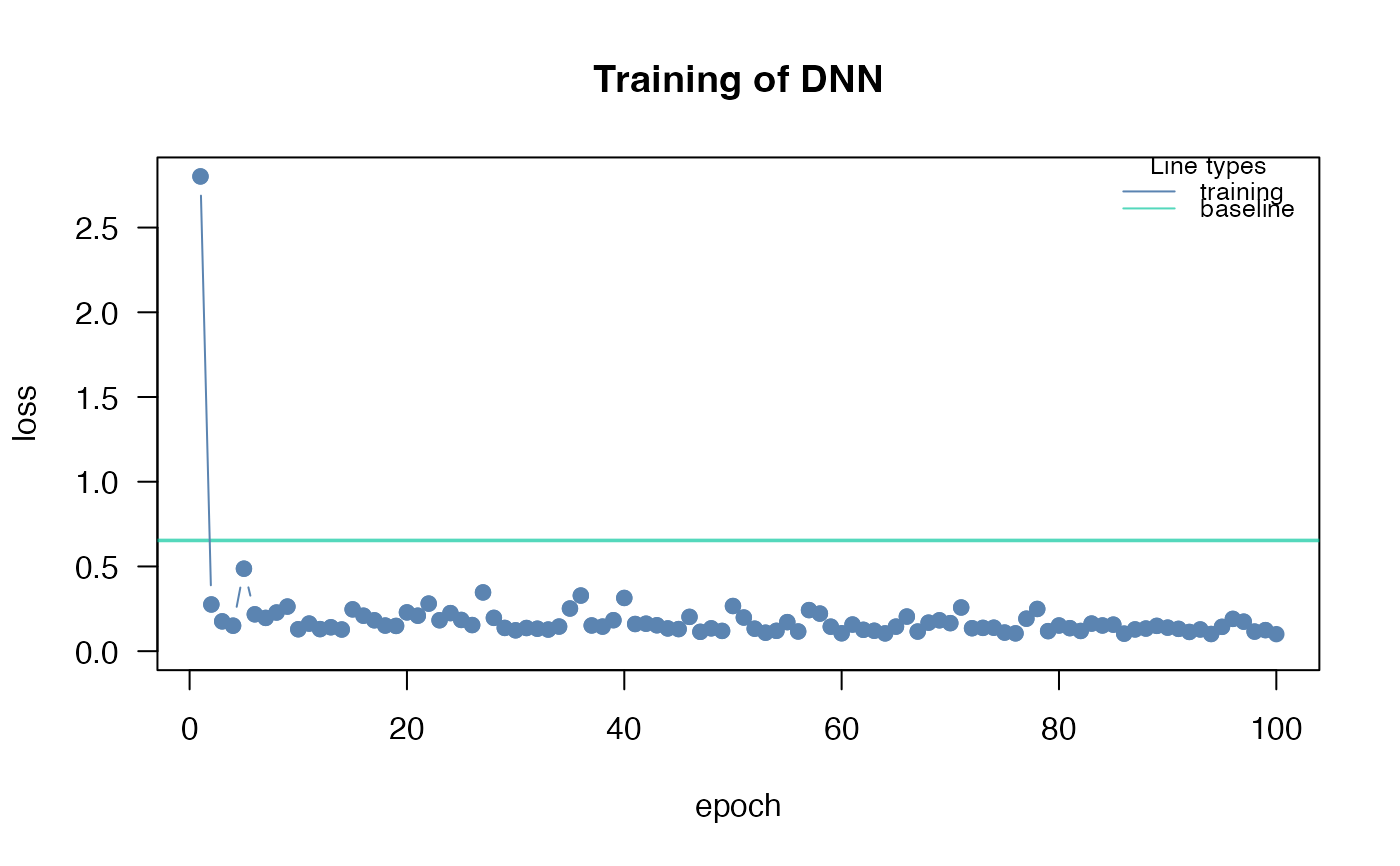

# Build and train Network

nn.fit<- dnn(Sepal.Length~., data = datasets::iris[-validation_set,])

plot(nn.fit)

}

#> Loss at epoch 1: 2.496234, lr: 0.01000

#> Loss at epoch 2: 0.275984, lr: 0.01000

#> Loss at epoch 3: 0.299044, lr: 0.01000

#> Loss at epoch 4: 0.222605, lr: 0.01000

#> Loss at epoch 5: 0.287249, lr: 0.01000

#> Loss at epoch 6: 0.250049, lr: 0.01000

#> Loss at epoch 7: 0.359944, lr: 0.01000

#> Loss at epoch 8: 0.206016, lr: 0.01000

#> Loss at epoch 9: 0.163694, lr: 0.01000

#> Loss at epoch 10: 0.140023, lr: 0.01000

#> Loss at epoch 11: 0.141925, lr: 0.01000

#> Loss at epoch 12: 0.151213, lr: 0.01000

#> Loss at epoch 13: 0.149036, lr: 0.01000

#> Loss at epoch 14: 0.398178, lr: 0.01000

#> Loss at epoch 15: 0.183247, lr: 0.01000

#> Loss at epoch 16: 0.356197, lr: 0.01000

#> Loss at epoch 17: 0.132249, lr: 0.01000

#> Loss at epoch 18: 0.187741, lr: 0.01000

#> Loss at epoch 19: 0.184170, lr: 0.01000

#> Loss at epoch 20: 0.272680, lr: 0.01000

#> Loss at epoch 21: 0.172288, lr: 0.01000

#> Loss at epoch 22: 0.147220, lr: 0.01000

#> Loss at epoch 23: 0.130538, lr: 0.01000

#> Loss at epoch 24: 0.126134, lr: 0.01000

#> Loss at epoch 25: 0.129028, lr: 0.01000

#> Loss at epoch 26: 0.150426, lr: 0.01000

#> Loss at epoch 27: 0.158499, lr: 0.01000

#> Loss at epoch 28: 0.157252, lr: 0.01000

#> Loss at epoch 29: 0.158960, lr: 0.01000

#> Loss at epoch 30: 0.216067, lr: 0.01000

#> Loss at epoch 31: 0.149803, lr: 0.01000

#> Loss at epoch 32: 0.173776, lr: 0.01000

#> Loss at epoch 33: 0.176177, lr: 0.01000

#> Loss at epoch 34: 0.116247, lr: 0.01000

#> Loss at epoch 35: 0.131292, lr: 0.01000

#> Loss at epoch 36: 0.128969, lr: 0.01000

#> Loss at epoch 37: 0.176717, lr: 0.01000

#> Loss at epoch 38: 0.120794, lr: 0.01000

#> Loss at epoch 39: 0.164252, lr: 0.01000

#> Loss at epoch 40: 0.164758, lr: 0.01000

#> Loss at epoch 41: 0.167306, lr: 0.01000

#> Loss at epoch 42: 0.148975, lr: 0.01000

#> Loss at epoch 43: 0.165813, lr: 0.01000

#> Loss at epoch 44: 0.115868, lr: 0.01000

#> Loss at epoch 45: 0.119369, lr: 0.01000

#> Loss at epoch 46: 0.134861, lr: 0.01000

#> Loss at epoch 47: 0.131380, lr: 0.01000

#> Loss at epoch 48: 0.117812, lr: 0.01000

#> Loss at epoch 49: 0.182973, lr: 0.01000

#> Loss at epoch 50: 0.171997, lr: 0.01000

#> Loss at epoch 51: 0.106516, lr: 0.01000

#> Loss at epoch 52: 0.118296, lr: 0.01000

#> Loss at epoch 53: 0.179335, lr: 0.01000

#> Loss at epoch 54: 0.116634, lr: 0.01000

#> Loss at epoch 55: 0.121189, lr: 0.01000

#> Loss at epoch 56: 0.175579, lr: 0.01000

#> Loss at epoch 57: 0.224448, lr: 0.01000

#> Loss at epoch 58: 0.135799, lr: 0.01000

#> Loss at epoch 59: 0.128182, lr: 0.01000

#> Loss at epoch 60: 0.124716, lr: 0.01000

#> Loss at epoch 61: 0.114326, lr: 0.01000

#> Loss at epoch 62: 0.133644, lr: 0.01000

#> Loss at epoch 63: 0.154931, lr: 0.01000

#> Loss at epoch 64: 0.120528, lr: 0.01000

#> Loss at epoch 65: 0.142507, lr: 0.01000

#> Loss at epoch 66: 0.140255, lr: 0.01000

#> Loss at epoch 67: 0.146326, lr: 0.01000

#> Loss at epoch 68: 0.177324, lr: 0.01000

#> Loss at epoch 69: 0.163104, lr: 0.01000

#> Loss at epoch 70: 0.210458, lr: 0.01000

#> Loss at epoch 71: 0.133166, lr: 0.01000

#> Loss at epoch 72: 0.119485, lr: 0.01000

#> Loss at epoch 73: 0.181278, lr: 0.01000

#> Loss at epoch 74: 0.113691, lr: 0.01000

#> Loss at epoch 75: 0.137806, lr: 0.01000

#> Loss at epoch 76: 0.125155, lr: 0.01000

#> Loss at epoch 77: 0.190301, lr: 0.01000

#> Loss at epoch 78: 0.104105, lr: 0.01000

#> Loss at epoch 79: 0.184372, lr: 0.01000

#> Loss at epoch 80: 0.197652, lr: 0.01000

#> Loss at epoch 81: 0.305428, lr: 0.01000

#> Loss at epoch 82: 0.171503, lr: 0.01000

#> Loss at epoch 83: 0.113379, lr: 0.01000

#> Loss at epoch 84: 0.117211, lr: 0.01000

#> Loss at epoch 85: 0.110590, lr: 0.01000

#> Loss at epoch 86: 0.122922, lr: 0.01000

#> Loss at epoch 87: 0.204976, lr: 0.01000

#> Loss at epoch 88: 0.167082, lr: 0.01000

#> Loss at epoch 89: 0.145538, lr: 0.01000

#> Loss at epoch 90: 0.142150, lr: 0.01000

#> Loss at epoch 91: 0.131696, lr: 0.01000

#> Loss at epoch 92: 0.129867, lr: 0.01000

#> Loss at epoch 93: 0.145236, lr: 0.01000

#> Loss at epoch 94: 0.141025, lr: 0.01000

#> Loss at epoch 95: 0.111752, lr: 0.01000

#> Loss at epoch 96: 0.185524, lr: 0.01000

#> Loss at epoch 97: 0.231474, lr: 0.01000

#> Loss at epoch 98: 0.196479, lr: 0.01000

#> Loss at epoch 99: 0.151052, lr: 0.01000

#> Loss at epoch 100: 0.151423, lr: 0.01000

#> Loss at epoch 2: 0.275984, lr: 0.01000

#> Loss at epoch 3: 0.299044, lr: 0.01000

#> Loss at epoch 4: 0.222605, lr: 0.01000

#> Loss at epoch 5: 0.287249, lr: 0.01000

#> Loss at epoch 6: 0.250049, lr: 0.01000

#> Loss at epoch 7: 0.359944, lr: 0.01000

#> Loss at epoch 8: 0.206016, lr: 0.01000

#> Loss at epoch 9: 0.163694, lr: 0.01000

#> Loss at epoch 10: 0.140023, lr: 0.01000

#> Loss at epoch 11: 0.141925, lr: 0.01000

#> Loss at epoch 12: 0.151213, lr: 0.01000

#> Loss at epoch 13: 0.149036, lr: 0.01000

#> Loss at epoch 14: 0.398178, lr: 0.01000

#> Loss at epoch 15: 0.183247, lr: 0.01000

#> Loss at epoch 16: 0.356197, lr: 0.01000

#> Loss at epoch 17: 0.132249, lr: 0.01000

#> Loss at epoch 18: 0.187741, lr: 0.01000

#> Loss at epoch 19: 0.184170, lr: 0.01000

#> Loss at epoch 20: 0.272680, lr: 0.01000

#> Loss at epoch 21: 0.172288, lr: 0.01000

#> Loss at epoch 22: 0.147220, lr: 0.01000

#> Loss at epoch 23: 0.130538, lr: 0.01000

#> Loss at epoch 24: 0.126134, lr: 0.01000

#> Loss at epoch 25: 0.129028, lr: 0.01000

#> Loss at epoch 26: 0.150426, lr: 0.01000

#> Loss at epoch 27: 0.158499, lr: 0.01000

#> Loss at epoch 28: 0.157252, lr: 0.01000

#> Loss at epoch 29: 0.158960, lr: 0.01000

#> Loss at epoch 30: 0.216067, lr: 0.01000

#> Loss at epoch 31: 0.149803, lr: 0.01000

#> Loss at epoch 32: 0.173776, lr: 0.01000

#> Loss at epoch 33: 0.176177, lr: 0.01000

#> Loss at epoch 34: 0.116247, lr: 0.01000

#> Loss at epoch 35: 0.131292, lr: 0.01000

#> Loss at epoch 36: 0.128969, lr: 0.01000

#> Loss at epoch 37: 0.176717, lr: 0.01000

#> Loss at epoch 38: 0.120794, lr: 0.01000

#> Loss at epoch 39: 0.164252, lr: 0.01000

#> Loss at epoch 40: 0.164758, lr: 0.01000

#> Loss at epoch 41: 0.167306, lr: 0.01000

#> Loss at epoch 42: 0.148975, lr: 0.01000

#> Loss at epoch 43: 0.165813, lr: 0.01000

#> Loss at epoch 44: 0.115868, lr: 0.01000

#> Loss at epoch 45: 0.119369, lr: 0.01000

#> Loss at epoch 46: 0.134861, lr: 0.01000

#> Loss at epoch 47: 0.131380, lr: 0.01000

#> Loss at epoch 48: 0.117812, lr: 0.01000

#> Loss at epoch 49: 0.182973, lr: 0.01000

#> Loss at epoch 50: 0.171997, lr: 0.01000

#> Loss at epoch 51: 0.106516, lr: 0.01000

#> Loss at epoch 52: 0.118296, lr: 0.01000

#> Loss at epoch 53: 0.179335, lr: 0.01000

#> Loss at epoch 54: 0.116634, lr: 0.01000

#> Loss at epoch 55: 0.121189, lr: 0.01000

#> Loss at epoch 56: 0.175579, lr: 0.01000

#> Loss at epoch 57: 0.224448, lr: 0.01000

#> Loss at epoch 58: 0.135799, lr: 0.01000

#> Loss at epoch 59: 0.128182, lr: 0.01000

#> Loss at epoch 60: 0.124716, lr: 0.01000

#> Loss at epoch 61: 0.114326, lr: 0.01000

#> Loss at epoch 62: 0.133644, lr: 0.01000

#> Loss at epoch 63: 0.154931, lr: 0.01000

#> Loss at epoch 64: 0.120528, lr: 0.01000

#> Loss at epoch 65: 0.142507, lr: 0.01000

#> Loss at epoch 66: 0.140255, lr: 0.01000

#> Loss at epoch 67: 0.146326, lr: 0.01000

#> Loss at epoch 68: 0.177324, lr: 0.01000

#> Loss at epoch 69: 0.163104, lr: 0.01000

#> Loss at epoch 70: 0.210458, lr: 0.01000

#> Loss at epoch 71: 0.133166, lr: 0.01000

#> Loss at epoch 72: 0.119485, lr: 0.01000

#> Loss at epoch 73: 0.181278, lr: 0.01000

#> Loss at epoch 74: 0.113691, lr: 0.01000

#> Loss at epoch 75: 0.137806, lr: 0.01000

#> Loss at epoch 76: 0.125155, lr: 0.01000

#> Loss at epoch 77: 0.190301, lr: 0.01000

#> Loss at epoch 78: 0.104105, lr: 0.01000

#> Loss at epoch 79: 0.184372, lr: 0.01000

#> Loss at epoch 80: 0.197652, lr: 0.01000

#> Loss at epoch 81: 0.305428, lr: 0.01000

#> Loss at epoch 82: 0.171503, lr: 0.01000

#> Loss at epoch 83: 0.113379, lr: 0.01000

#> Loss at epoch 84: 0.117211, lr: 0.01000

#> Loss at epoch 85: 0.110590, lr: 0.01000

#> Loss at epoch 86: 0.122922, lr: 0.01000

#> Loss at epoch 87: 0.204976, lr: 0.01000

#> Loss at epoch 88: 0.167082, lr: 0.01000

#> Loss at epoch 89: 0.145538, lr: 0.01000

#> Loss at epoch 90: 0.142150, lr: 0.01000

#> Loss at epoch 91: 0.131696, lr: 0.01000

#> Loss at epoch 92: 0.129867, lr: 0.01000

#> Loss at epoch 93: 0.145236, lr: 0.01000

#> Loss at epoch 94: 0.141025, lr: 0.01000

#> Loss at epoch 95: 0.111752, lr: 0.01000

#> Loss at epoch 96: 0.185524, lr: 0.01000

#> Loss at epoch 97: 0.231474, lr: 0.01000

#> Loss at epoch 98: 0.196479, lr: 0.01000

#> Loss at epoch 99: 0.151052, lr: 0.01000

#> Loss at epoch 100: 0.151423, lr: 0.01000

# }

# }