Average conditional effects calculate the local derivatives for each observation for each feature. They are similar to marginal effects. And the average of these conditional effects is an approximation of linear effects (see Pichler and Hartig, 2023 for more details). You can use this function to either calculate main effects (on the diagonal, take a look at the example) or interaction effects (off-diagonals) between features.

To obtain uncertainties for these effects, enable the bootstrapping option in the dnn(..) function (see example).

Usage

conditionalEffects(

object,

interactions = FALSE,

epsilon = 0.1,

device = c("cpu", "cuda", "mps"),

indices = NULL,

data = NULL,

type = "response",

...

)

# S3 method for class 'citodnn'

conditionalEffects(

object,

interactions = FALSE,

epsilon = 0.1,

device = c("cpu", "cuda", "mps"),

indices = NULL,

data = NULL,

type = "response",

...

)

# S3 method for class 'citodnnBootstrap'

conditionalEffects(

object,

interactions = FALSE,

epsilon = 0.1,

device = c("cpu", "cuda", "mps"),

indices = NULL,

data = NULL,

type = "response",

...

)Arguments

- object

object of class

citodnn- interactions

calculate interactions or not (computationally expensive)

- epsilon

difference used to calculate derivatives

- device

which device

- indices

of variables for which the ACE are calculated

- data

data which is used to calculate the ACE

- type

ACE on which scale (response or link)

- ...

additional arguments that are passed to the predict function

Value

an S3 object of class "conditionalEffects" is returned.

The list consists of the following attributes:

- result

3-dimensional array with the raw results

- mean

Matrix, average conditional effects

- abs

Matrix, summed absolute conditional effects

- sd

Matrix, standard deviation of the conditional effects

References

Scholbeck, C. A., Casalicchio, G., Molnar, C., Bischl, B., & Heumann, C. (2022). Marginal effects for non-linear prediction functions. arXiv preprint arXiv:2201.08837.

Pichler, M., & Hartig, F. (2023). Can predictive models be used for causal inference?. arXiv preprint arXiv:2306.10551.

Examples

# \donttest{

if(torch::torch_is_installed()){

library(cito)

# Build and train Network

nn.fit = dnn(Sepal.Length~., data = datasets::iris)

# Calculate average conditional effects

ACE = conditionalEffects(nn.fit)

## Main effects (categorical features are not supported)

ACE

## With interaction effects:

ACE = conditionalEffects(nn.fit, interactions = TRUE)

## The off diagonal elements are the interaction effects

ACE[[1]]$mean

## ACE is a list, elements correspond to the number of response classes

## Sepal.length == 1 Response so we have only one

## list element in the ACE object

# Re-train NN with bootstrapping to obtain standard errors

nn.fit = dnn(Sepal.Length~., data = datasets::iris, bootstrap = 30L)

## The summary method calculates also the conditional effects, and if

## bootstrapping was used, it will also report standard errors and p-values:

summary(nn.fit)

}

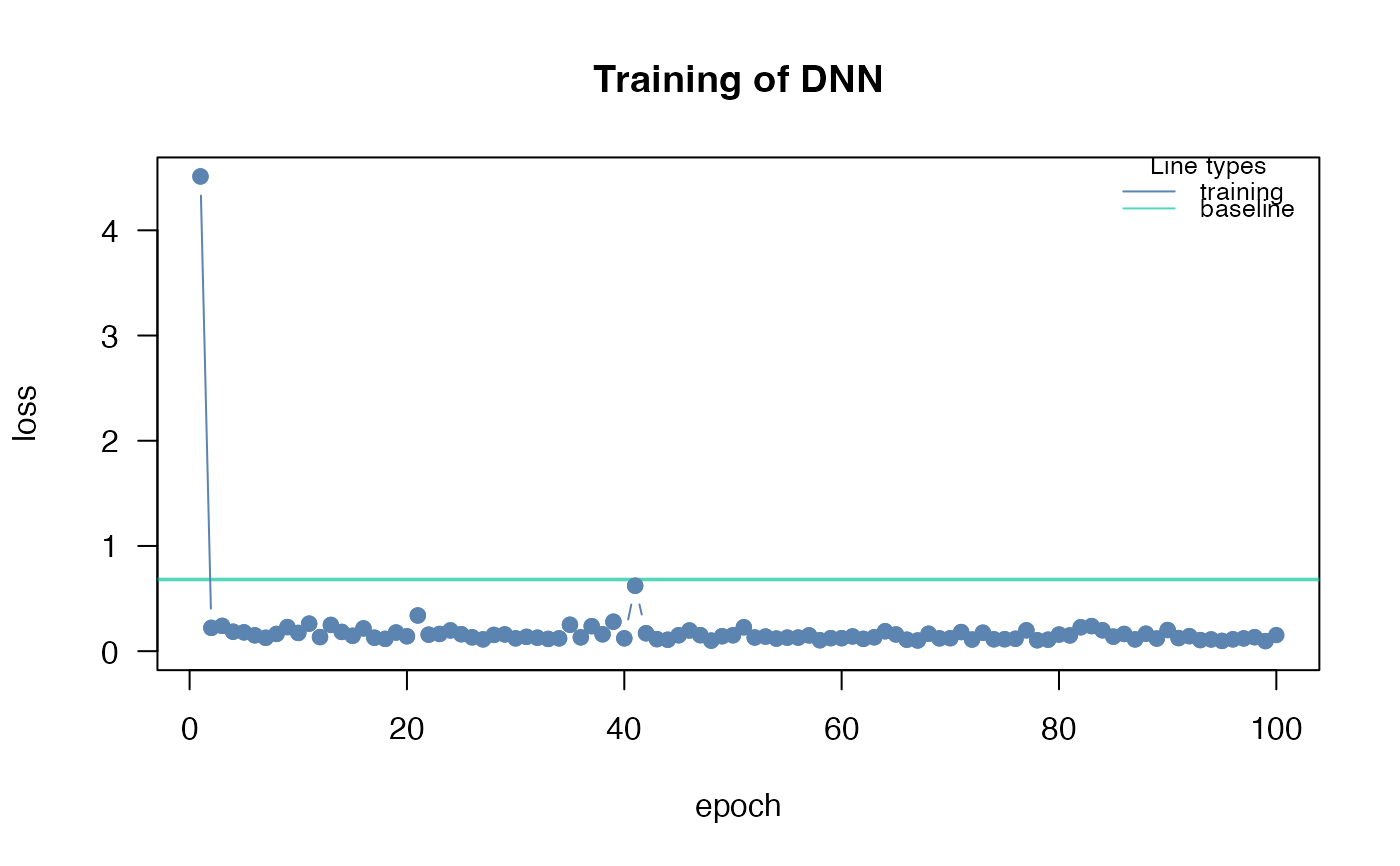

#> Loss at epoch 1: 4.363048, lr: 0.01000

#> Loss at epoch 2: 0.272192, lr: 0.01000

#> Loss at epoch 3: 0.374940, lr: 0.01000

#> Loss at epoch 4: 0.276003, lr: 0.01000

#> Loss at epoch 5: 0.176003, lr: 0.01000

#> Loss at epoch 6: 0.162379, lr: 0.01000

#> Loss at epoch 7: 0.162826, lr: 0.01000

#> Loss at epoch 8: 0.218452, lr: 0.01000

#> Loss at epoch 9: 0.153501, lr: 0.01000

#> Loss at epoch 10: 0.159021, lr: 0.01000

#> Loss at epoch 11: 0.199770, lr: 0.01000

#> Loss at epoch 12: 0.276008, lr: 0.01000

#> Loss at epoch 13: 0.136575, lr: 0.01000

#> Loss at epoch 14: 0.134161, lr: 0.01000

#> Loss at epoch 15: 0.140879, lr: 0.01000

#> Loss at epoch 16: 0.154157, lr: 0.01000

#> Loss at epoch 17: 0.137692, lr: 0.01000

#> Loss at epoch 18: 0.280078, lr: 0.01000

#> Loss at epoch 19: 0.152508, lr: 0.01000

#> Loss at epoch 20: 0.201336, lr: 0.01000

#> Loss at epoch 21: 0.176678, lr: 0.01000

#> Loss at epoch 22: 0.168723, lr: 0.01000

#> Loss at epoch 23: 0.217394, lr: 0.01000

#> Loss at epoch 24: 0.126510, lr: 0.01000

#> Loss at epoch 25: 0.142637, lr: 0.01000

#> Loss at epoch 26: 0.159910, lr: 0.01000

#> Loss at epoch 27: 0.129799, lr: 0.01000

#> Loss at epoch 28: 0.119393, lr: 0.01000

#> Loss at epoch 29: 0.128175, lr: 0.01000

#> Loss at epoch 30: 0.139445, lr: 0.01000

#> Loss at epoch 31: 0.120286, lr: 0.01000

#> Loss at epoch 32: 0.153908, lr: 0.01000

#> Loss at epoch 33: 0.139932, lr: 0.01000

#> Loss at epoch 34: 0.196470, lr: 0.01000

#> Loss at epoch 35: 0.222016, lr: 0.01000

#> Loss at epoch 36: 0.115350, lr: 0.01000

#> Loss at epoch 37: 0.130246, lr: 0.01000

#> Loss at epoch 38: 0.128341, lr: 0.01000

#> Loss at epoch 39: 0.139921, lr: 0.01000

#> Loss at epoch 40: 0.133473, lr: 0.01000

#> Loss at epoch 41: 0.190675, lr: 0.01000

#> Loss at epoch 42: 0.152598, lr: 0.01000

#> Loss at epoch 43: 0.127298, lr: 0.01000

#> Loss at epoch 44: 0.143021, lr: 0.01000

#> Loss at epoch 45: 0.176637, lr: 0.01000

#> Loss at epoch 46: 0.227017, lr: 0.01000

#> Loss at epoch 47: 0.116094, lr: 0.01000

#> Loss at epoch 48: 0.169703, lr: 0.01000

#> Loss at epoch 49: 0.115200, lr: 0.01000

#> Loss at epoch 50: 0.194225, lr: 0.01000

#> Loss at epoch 51: 0.121132, lr: 0.01000

#> Loss at epoch 52: 0.140460, lr: 0.01000

#> Loss at epoch 53: 0.128924, lr: 0.01000

#> Loss at epoch 54: 0.188344, lr: 0.01000

#> Loss at epoch 55: 0.114714, lr: 0.01000

#> Loss at epoch 56: 0.136857, lr: 0.01000

#> Loss at epoch 57: 0.224927, lr: 0.01000

#> Loss at epoch 58: 0.151434, lr: 0.01000

#> Loss at epoch 59: 0.121373, lr: 0.01000

#> Loss at epoch 60: 0.196071, lr: 0.01000

#> Loss at epoch 61: 0.142148, lr: 0.01000

#> Loss at epoch 62: 0.112751, lr: 0.01000

#> Loss at epoch 63: 0.120279, lr: 0.01000

#> Loss at epoch 64: 0.124868, lr: 0.01000

#> Loss at epoch 65: 0.122346, lr: 0.01000

#> Loss at epoch 66: 0.151730, lr: 0.01000

#> Loss at epoch 67: 0.136396, lr: 0.01000

#> Loss at epoch 68: 0.134735, lr: 0.01000

#> Loss at epoch 69: 0.145546, lr: 0.01000

#> Loss at epoch 70: 0.112017, lr: 0.01000

#> Loss at epoch 71: 0.127638, lr: 0.01000

#> Loss at epoch 72: 0.178938, lr: 0.01000

#> Loss at epoch 73: 0.224137, lr: 0.01000

#> Loss at epoch 74: 0.241549, lr: 0.01000

#> Loss at epoch 75: 0.176176, lr: 0.01000

#> Loss at epoch 76: 0.119769, lr: 0.01000

#> Loss at epoch 77: 0.138289, lr: 0.01000

#> Loss at epoch 78: 0.149200, lr: 0.01000

#> Loss at epoch 79: 0.129378, lr: 0.01000

#> Loss at epoch 80: 0.153158, lr: 0.01000

#> Loss at epoch 81: 0.140047, lr: 0.01000

#> Loss at epoch 82: 0.115352, lr: 0.01000

#> Loss at epoch 83: 0.111738, lr: 0.01000

#> Loss at epoch 84: 0.136471, lr: 0.01000

#> Loss at epoch 85: 0.226092, lr: 0.01000

#> Loss at epoch 86: 0.141407, lr: 0.01000

#> Loss at epoch 87: 0.116782, lr: 0.01000

#> Loss at epoch 88: 0.112259, lr: 0.01000

#> Loss at epoch 89: 0.114547, lr: 0.01000

#> Loss at epoch 90: 0.126242, lr: 0.01000

#> Loss at epoch 91: 0.120716, lr: 0.01000

#> Loss at epoch 92: 0.102394, lr: 0.01000

#> Loss at epoch 93: 0.180366, lr: 0.01000

#> Loss at epoch 94: 0.117197, lr: 0.01000

#> Loss at epoch 95: 0.113679, lr: 0.01000

#> Loss at epoch 96: 0.121416, lr: 0.01000

#> Loss at epoch 97: 0.196279, lr: 0.01000

#> Loss at epoch 98: 0.137671, lr: 0.01000

#> Loss at epoch 99: 0.118519, lr: 0.01000

#> Loss at epoch 100: 0.235122, lr: 0.01000

#> Summary of Deep Neural Network Model

#>

#>

#> ── Feature Importance

#>

#> Importance Std.Err Z value Pr(>|z|)

#> Sepal.Width → 0.886 0.388 2.29 0.022 *

#> Petal.Length → 20.581 9.271 2.22 0.026 *

#> Petal.Width → 1.038 1.369 0.76 0.448

#> Species → 0.239 0.190 1.26 0.208

#> ---

#> Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

#>

#>

#>

#> ── Average Conditional Effects

#> ACE Std.Err Z value Pr(>|z|)

#> Sepal.Width → 0.4800 0.0627 7.65 2e-14 ***

#> Petal.Length → 0.6290 0.0653 9.64 <2e-16 ***

#> Petal.Width → -0.2654 0.1537 -1.73 0.084 .

#> ---

#> Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

#>

#>

#>

#> ── Standard Deviation of Conditional Effects

#>

#> ACE Std.Err Z value Pr(>|z|)

#> Sepal.Width → 0.0768 0.0229 3.35 0.0008 ***

#> Petal.Length → 0.0425 0.0140 3.03 0.0024 **

#> Petal.Width → 0.0431 0.0188 2.30 0.0217 *

#> ---

#> Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

# }

#> Loss at epoch 2: 0.272192, lr: 0.01000

#> Loss at epoch 3: 0.374940, lr: 0.01000

#> Loss at epoch 4: 0.276003, lr: 0.01000

#> Loss at epoch 5: 0.176003, lr: 0.01000

#> Loss at epoch 6: 0.162379, lr: 0.01000

#> Loss at epoch 7: 0.162826, lr: 0.01000

#> Loss at epoch 8: 0.218452, lr: 0.01000

#> Loss at epoch 9: 0.153501, lr: 0.01000

#> Loss at epoch 10: 0.159021, lr: 0.01000

#> Loss at epoch 11: 0.199770, lr: 0.01000

#> Loss at epoch 12: 0.276008, lr: 0.01000

#> Loss at epoch 13: 0.136575, lr: 0.01000

#> Loss at epoch 14: 0.134161, lr: 0.01000

#> Loss at epoch 15: 0.140879, lr: 0.01000

#> Loss at epoch 16: 0.154157, lr: 0.01000

#> Loss at epoch 17: 0.137692, lr: 0.01000

#> Loss at epoch 18: 0.280078, lr: 0.01000

#> Loss at epoch 19: 0.152508, lr: 0.01000

#> Loss at epoch 20: 0.201336, lr: 0.01000

#> Loss at epoch 21: 0.176678, lr: 0.01000

#> Loss at epoch 22: 0.168723, lr: 0.01000

#> Loss at epoch 23: 0.217394, lr: 0.01000

#> Loss at epoch 24: 0.126510, lr: 0.01000

#> Loss at epoch 25: 0.142637, lr: 0.01000

#> Loss at epoch 26: 0.159910, lr: 0.01000

#> Loss at epoch 27: 0.129799, lr: 0.01000

#> Loss at epoch 28: 0.119393, lr: 0.01000

#> Loss at epoch 29: 0.128175, lr: 0.01000

#> Loss at epoch 30: 0.139445, lr: 0.01000

#> Loss at epoch 31: 0.120286, lr: 0.01000

#> Loss at epoch 32: 0.153908, lr: 0.01000

#> Loss at epoch 33: 0.139932, lr: 0.01000

#> Loss at epoch 34: 0.196470, lr: 0.01000

#> Loss at epoch 35: 0.222016, lr: 0.01000

#> Loss at epoch 36: 0.115350, lr: 0.01000

#> Loss at epoch 37: 0.130246, lr: 0.01000

#> Loss at epoch 38: 0.128341, lr: 0.01000

#> Loss at epoch 39: 0.139921, lr: 0.01000

#> Loss at epoch 40: 0.133473, lr: 0.01000

#> Loss at epoch 41: 0.190675, lr: 0.01000

#> Loss at epoch 42: 0.152598, lr: 0.01000

#> Loss at epoch 43: 0.127298, lr: 0.01000

#> Loss at epoch 44: 0.143021, lr: 0.01000

#> Loss at epoch 45: 0.176637, lr: 0.01000

#> Loss at epoch 46: 0.227017, lr: 0.01000

#> Loss at epoch 47: 0.116094, lr: 0.01000

#> Loss at epoch 48: 0.169703, lr: 0.01000

#> Loss at epoch 49: 0.115200, lr: 0.01000

#> Loss at epoch 50: 0.194225, lr: 0.01000

#> Loss at epoch 51: 0.121132, lr: 0.01000

#> Loss at epoch 52: 0.140460, lr: 0.01000

#> Loss at epoch 53: 0.128924, lr: 0.01000

#> Loss at epoch 54: 0.188344, lr: 0.01000

#> Loss at epoch 55: 0.114714, lr: 0.01000

#> Loss at epoch 56: 0.136857, lr: 0.01000

#> Loss at epoch 57: 0.224927, lr: 0.01000

#> Loss at epoch 58: 0.151434, lr: 0.01000

#> Loss at epoch 59: 0.121373, lr: 0.01000

#> Loss at epoch 60: 0.196071, lr: 0.01000

#> Loss at epoch 61: 0.142148, lr: 0.01000

#> Loss at epoch 62: 0.112751, lr: 0.01000

#> Loss at epoch 63: 0.120279, lr: 0.01000

#> Loss at epoch 64: 0.124868, lr: 0.01000

#> Loss at epoch 65: 0.122346, lr: 0.01000

#> Loss at epoch 66: 0.151730, lr: 0.01000

#> Loss at epoch 67: 0.136396, lr: 0.01000

#> Loss at epoch 68: 0.134735, lr: 0.01000

#> Loss at epoch 69: 0.145546, lr: 0.01000

#> Loss at epoch 70: 0.112017, lr: 0.01000

#> Loss at epoch 71: 0.127638, lr: 0.01000

#> Loss at epoch 72: 0.178938, lr: 0.01000

#> Loss at epoch 73: 0.224137, lr: 0.01000

#> Loss at epoch 74: 0.241549, lr: 0.01000

#> Loss at epoch 75: 0.176176, lr: 0.01000

#> Loss at epoch 76: 0.119769, lr: 0.01000

#> Loss at epoch 77: 0.138289, lr: 0.01000

#> Loss at epoch 78: 0.149200, lr: 0.01000

#> Loss at epoch 79: 0.129378, lr: 0.01000

#> Loss at epoch 80: 0.153158, lr: 0.01000

#> Loss at epoch 81: 0.140047, lr: 0.01000

#> Loss at epoch 82: 0.115352, lr: 0.01000

#> Loss at epoch 83: 0.111738, lr: 0.01000

#> Loss at epoch 84: 0.136471, lr: 0.01000

#> Loss at epoch 85: 0.226092, lr: 0.01000

#> Loss at epoch 86: 0.141407, lr: 0.01000

#> Loss at epoch 87: 0.116782, lr: 0.01000

#> Loss at epoch 88: 0.112259, lr: 0.01000

#> Loss at epoch 89: 0.114547, lr: 0.01000

#> Loss at epoch 90: 0.126242, lr: 0.01000

#> Loss at epoch 91: 0.120716, lr: 0.01000

#> Loss at epoch 92: 0.102394, lr: 0.01000

#> Loss at epoch 93: 0.180366, lr: 0.01000

#> Loss at epoch 94: 0.117197, lr: 0.01000

#> Loss at epoch 95: 0.113679, lr: 0.01000

#> Loss at epoch 96: 0.121416, lr: 0.01000

#> Loss at epoch 97: 0.196279, lr: 0.01000

#> Loss at epoch 98: 0.137671, lr: 0.01000

#> Loss at epoch 99: 0.118519, lr: 0.01000

#> Loss at epoch 100: 0.235122, lr: 0.01000

#> Summary of Deep Neural Network Model

#>

#>

#> ── Feature Importance

#>

#> Importance Std.Err Z value Pr(>|z|)

#> Sepal.Width → 0.886 0.388 2.29 0.022 *

#> Petal.Length → 20.581 9.271 2.22 0.026 *

#> Petal.Width → 1.038 1.369 0.76 0.448

#> Species → 0.239 0.190 1.26 0.208

#> ---

#> Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

#>

#>

#>

#> ── Average Conditional Effects

#> ACE Std.Err Z value Pr(>|z|)

#> Sepal.Width → 0.4800 0.0627 7.65 2e-14 ***

#> Petal.Length → 0.6290 0.0653 9.64 <2e-16 ***

#> Petal.Width → -0.2654 0.1537 -1.73 0.084 .

#> ---

#> Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

#>

#>

#>

#> ── Standard Deviation of Conditional Effects

#>

#> ACE Std.Err Z value Pr(>|z|)

#> Sepal.Width → 0.0768 0.0229 3.35 0.0008 ***

#> Petal.Length → 0.0425 0.0140 3.03 0.0024 **

#> Petal.Width → 0.0431 0.0188 2.30 0.0217 *

#> ---

#> Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

# }